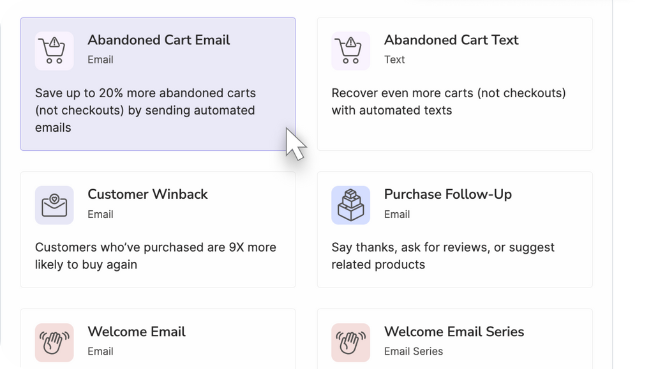

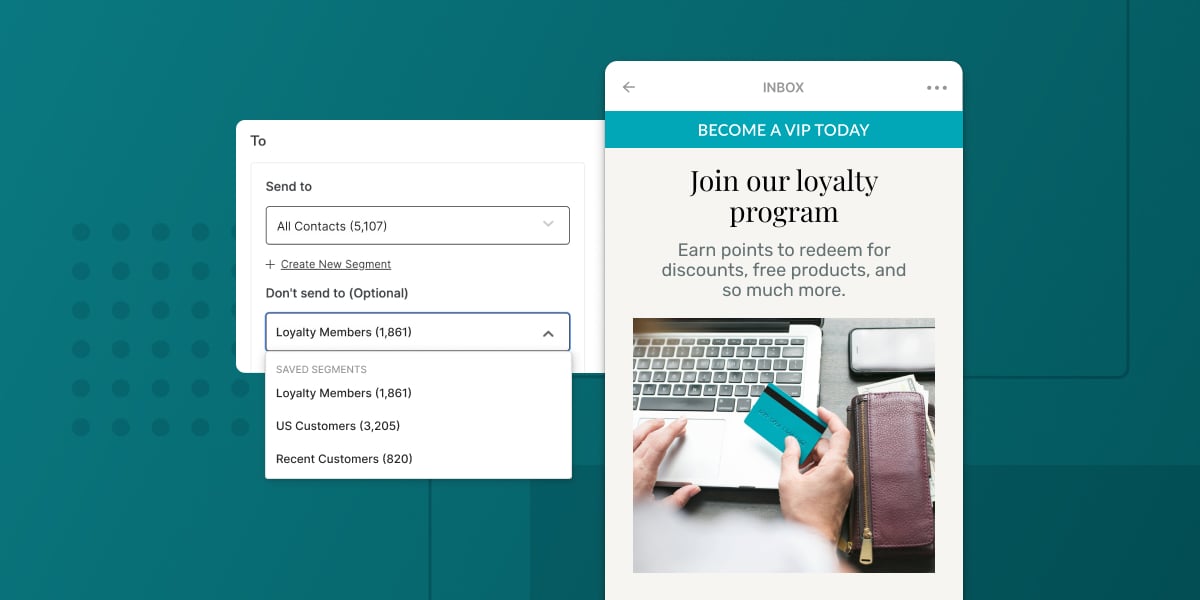

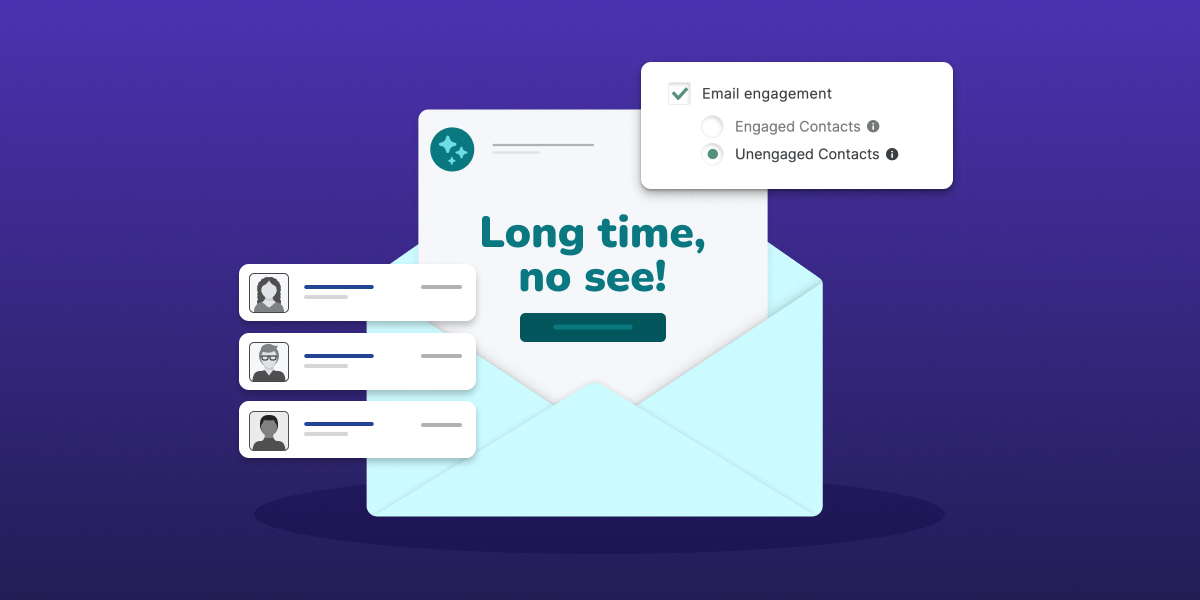

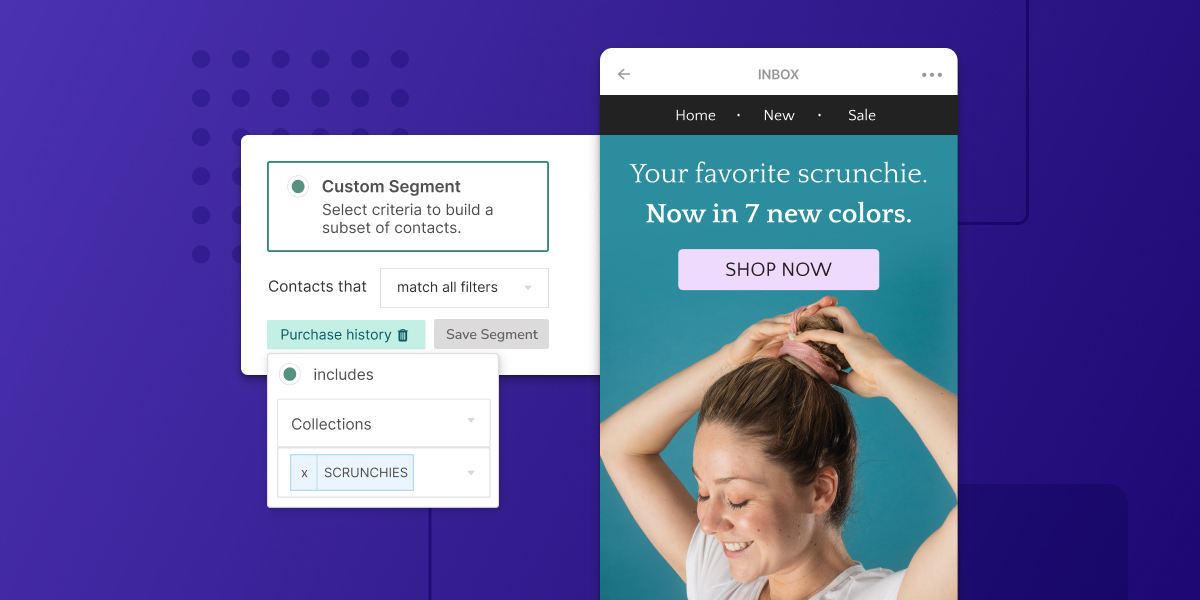

Email Marketing

Email marketing tactics to help you grow sales for your store

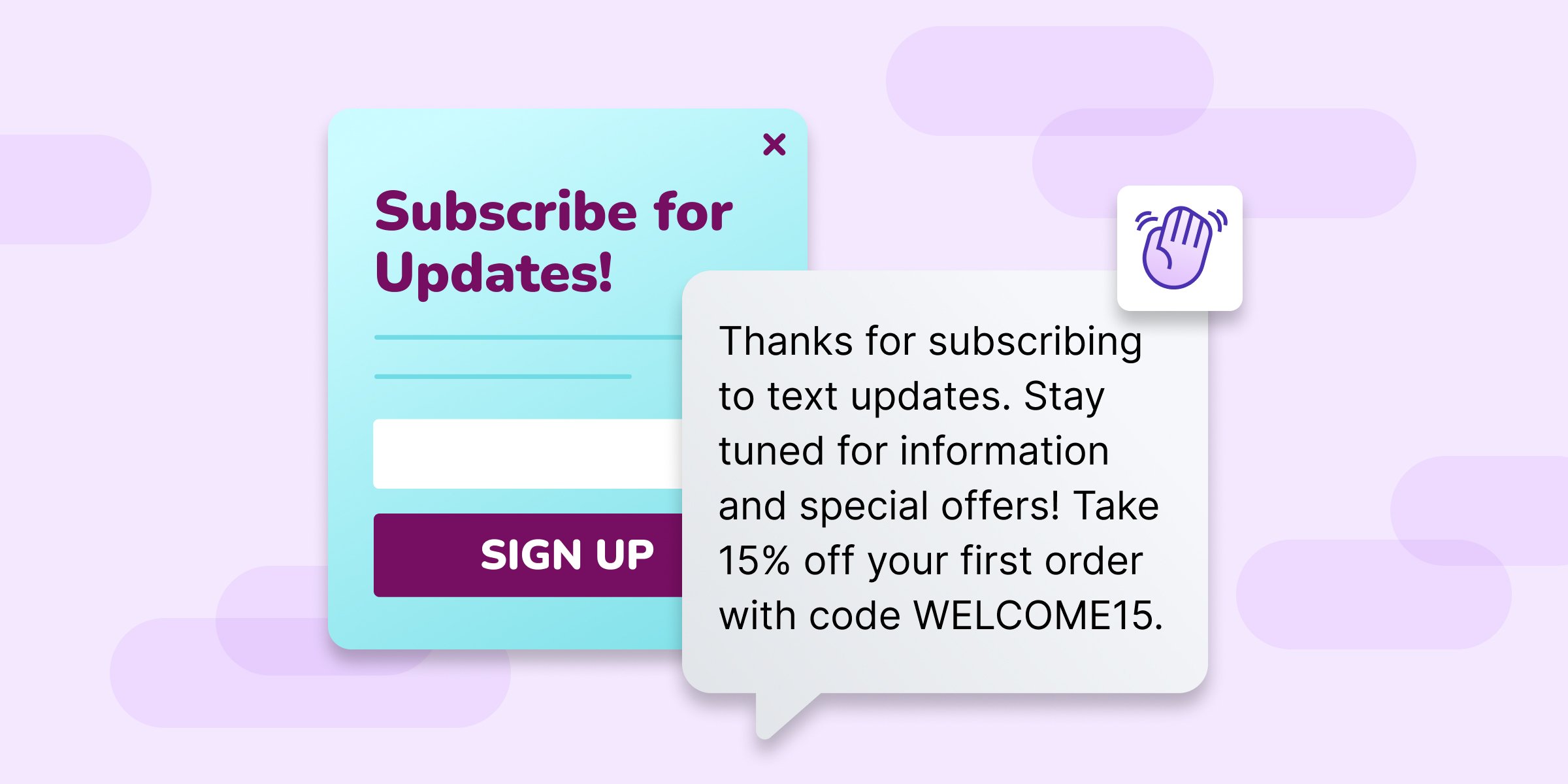

SMS Marketing

Sell to shoppers on the devices they use most – their smartphones

List Growth

Proven strategies to help you turn browsers into subscribers.

Case Studies

Real stories from real Privy merchants

Get great ecommerce marketing advice straight to your inbox.

We're also on YouTube!

It’s easier than ever to build an online store, but it’s harder than ever to grow it. We're here to help.

Dive into best practices and tips to create a welcome discount popup that'll make shoppers excited to sign up for your list!

Our streamlined, user-friendly editor is designed to make you feel like a pro. Check it out.

Check out our podcast 🎙️

Featuring tons of bite-sized, expert advice to help you grow your store from $0-$1M in sales

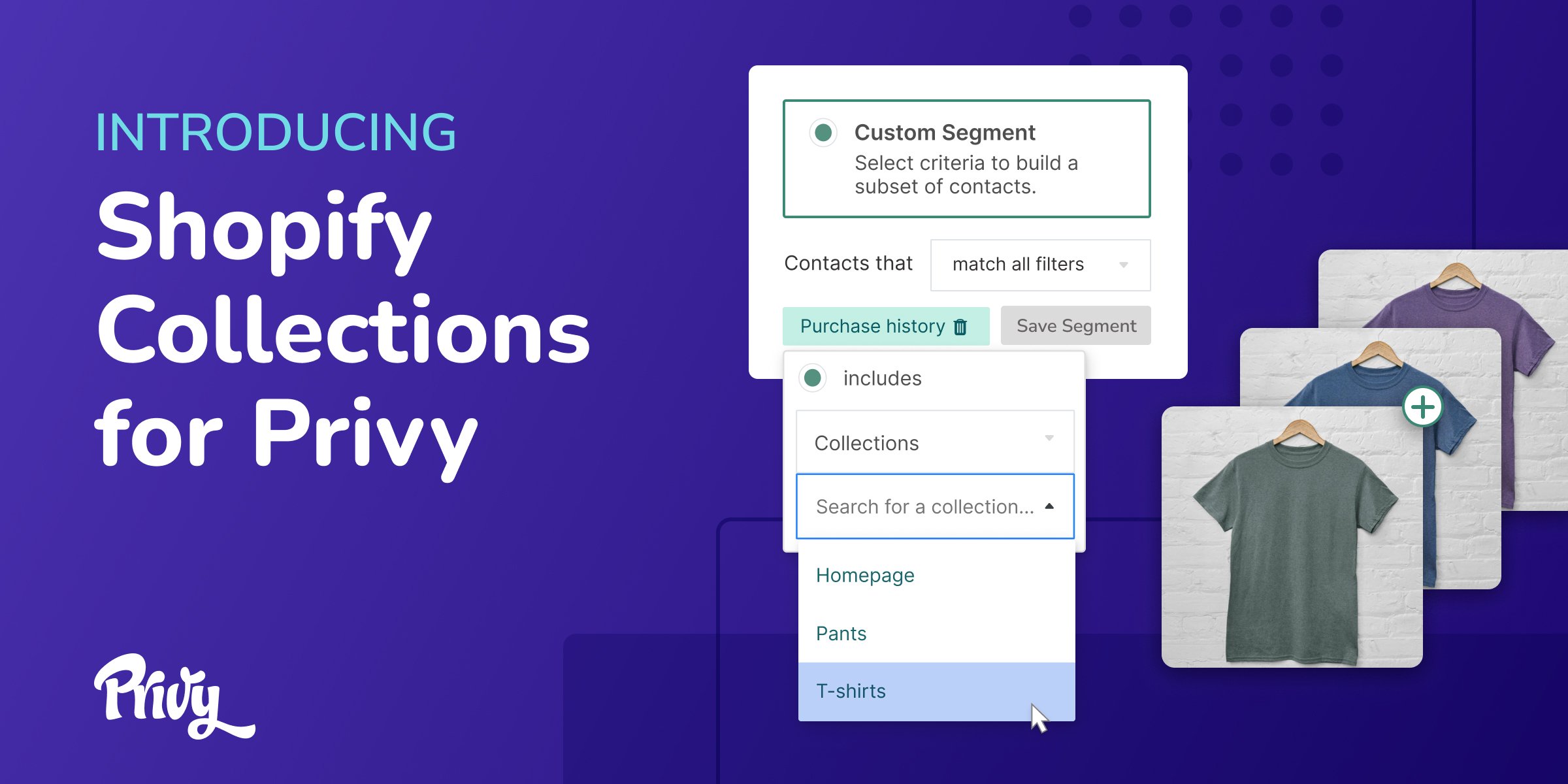

Product News

Keep tabs on product releases and other updates from the Privy team.

Recent Articles

Try Privy Free for 15 Days

Grow your email and SMS lists with our pre-built templates, and start sending money-making emails. Automatically syncs with Shopify.

.jpg)