All-in-One Ecommerce Marketing Platform

Privy is the Fastest Way To Grow Sales With Email & SMS

Get the ecommerce marketing platform built for stores that need to grow sales now. Grow your contact lists, save abandoned carts, send money-making emails & texts, and more – all in one place.

Over 18,500 five star Shopify reviews

What can Privy do for your online store?

Get access to all the Email, Conversion, and SMS tools you need to increase sales at each stage of your customers' journey.

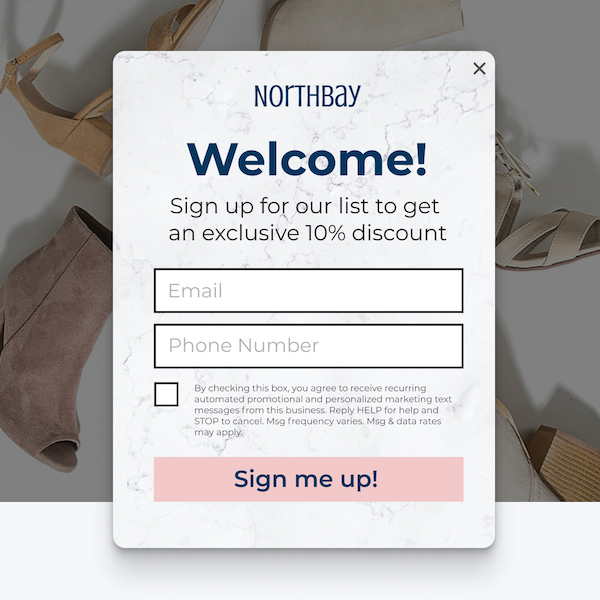

Grow your email and SMS lists faster

Turn casual browsers into loyal subscribers with customizable popups, flyouts, and other high-converting displays.Welcome incentives

Incentivize new visitors to buy from your Shopify store. Offer a first-time discount in exchange for an email or phone number.

Interactive spin-to-wins

Signing up for your email or SMS list should be fun. Add an interactive spin-to-win wheel and customize your prizes however you want.

Mobile-optimized popups

Whether someone shops on their desktop, smartphone, or tablet, their experience wont be inhibited by Privy popups.

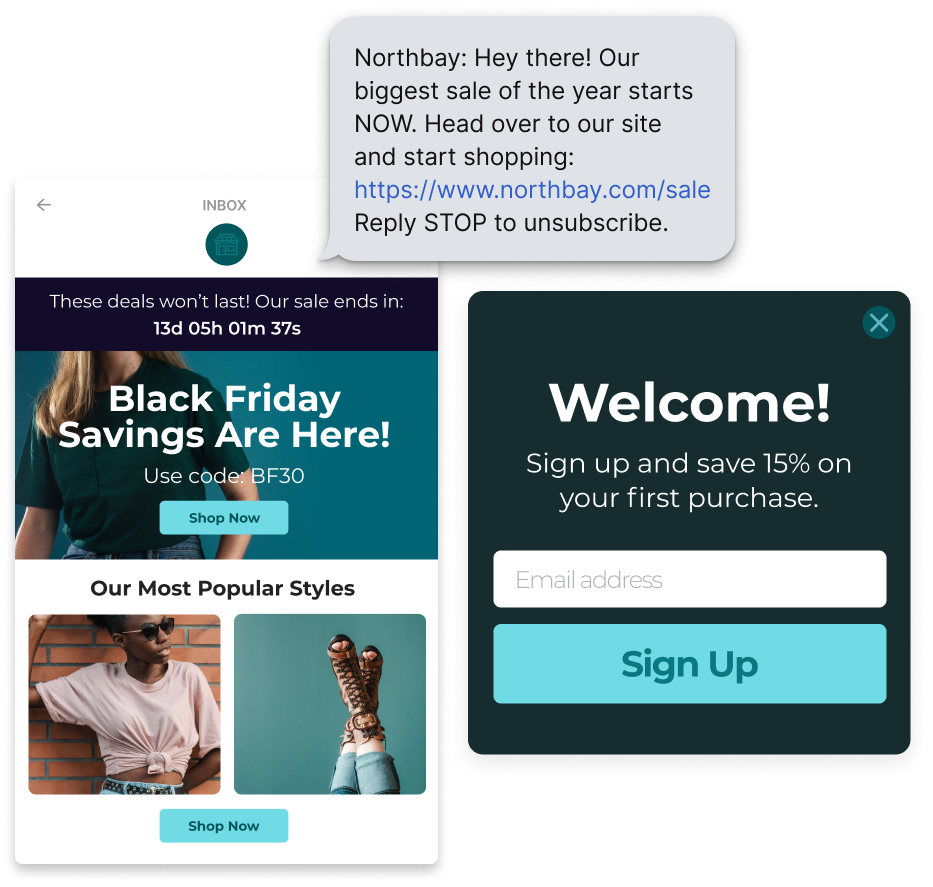

Let your subscribers be the first to know

Communication is key if you want to retain customers. Privy gives you all the tools you need to stay in front of them.Welcome email series

Add a first-time coupon offer, tell your brand story, and create a connection that will keep your email contacts engaged.

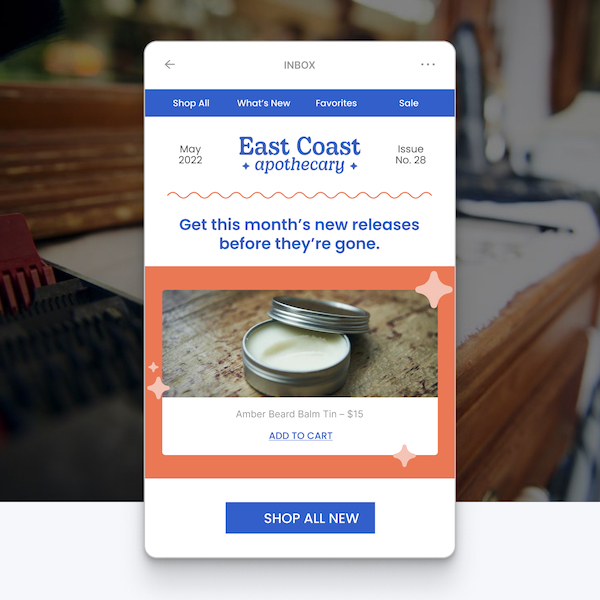

Brand newsletters

Announce new products, seasonal sales, limited-time offers, and other money-making promotions with newsletter emails.

Broadcast text messaging

Send marketing and sales texts to your SMS subscribers. Broadcast text is the perfect complement to your email newsletter.

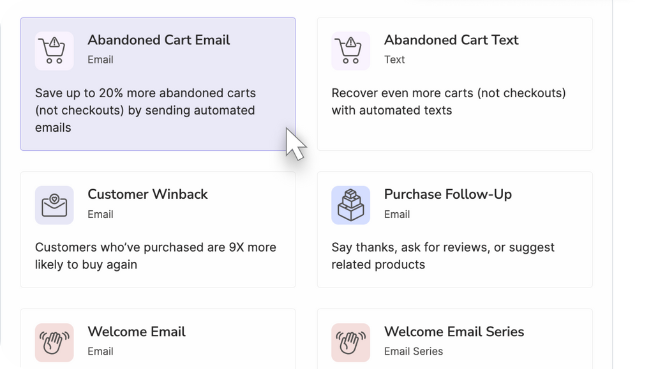

Get shoppers to buy more from your store

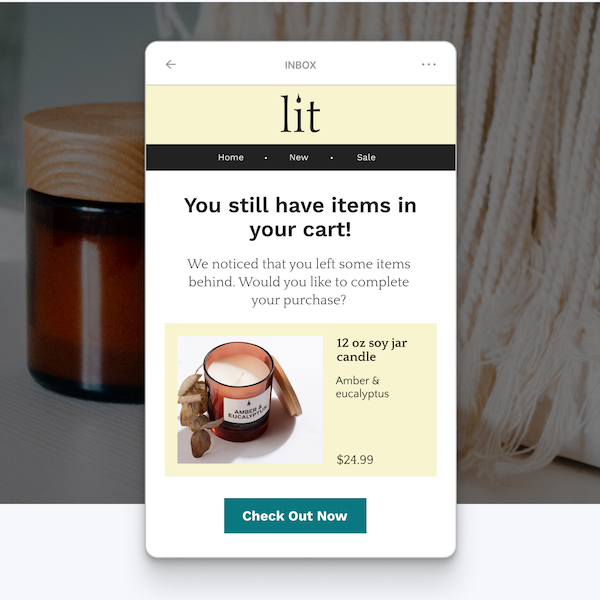

Give customers the nudge they need to add more items to their carts and finish their purchases.Abandoned cart texts & emails

Notify shoppers in your email or SMS list when they've left items in their carts. Automate emails or texts to send after a specified period of time.

Cart-saver campaigns

Stop cart abandonment before it happens. Cart-saver popups show when someone is about to leave your site. Reveal a coupon code in exchange for their contact info.

Cross-sell campaigns

Launch product recommendation popups at your checkout stage that are personalized to the items in someone's shopping cart.

Announce free shipping

Add a free shipping bar to the top of your website and show your shoppers exactly how much they need to spend in order to receive free shipping.

Add a countdown timer

Create urgency on your website with a countdown timer. Shoppers will know they only have a limited-time to take advantage of your offer.

Turn one-time buyers into repeat customers

Your store can't live on one-time purchases. Make buying a habit with emails and texts that keep customers coming back for more.Customer win-back emails

Set up reminders and time-delayed offers to make sure your best customers never go too long without purchasing. Add a limited-time discount to sweeten the deal.

Post-purchase follow ups

Keep customers informed every step of the way with post-purchase follow up emails and texts. Ask for customer feedback on their purchase + a star-rating and review on your website.

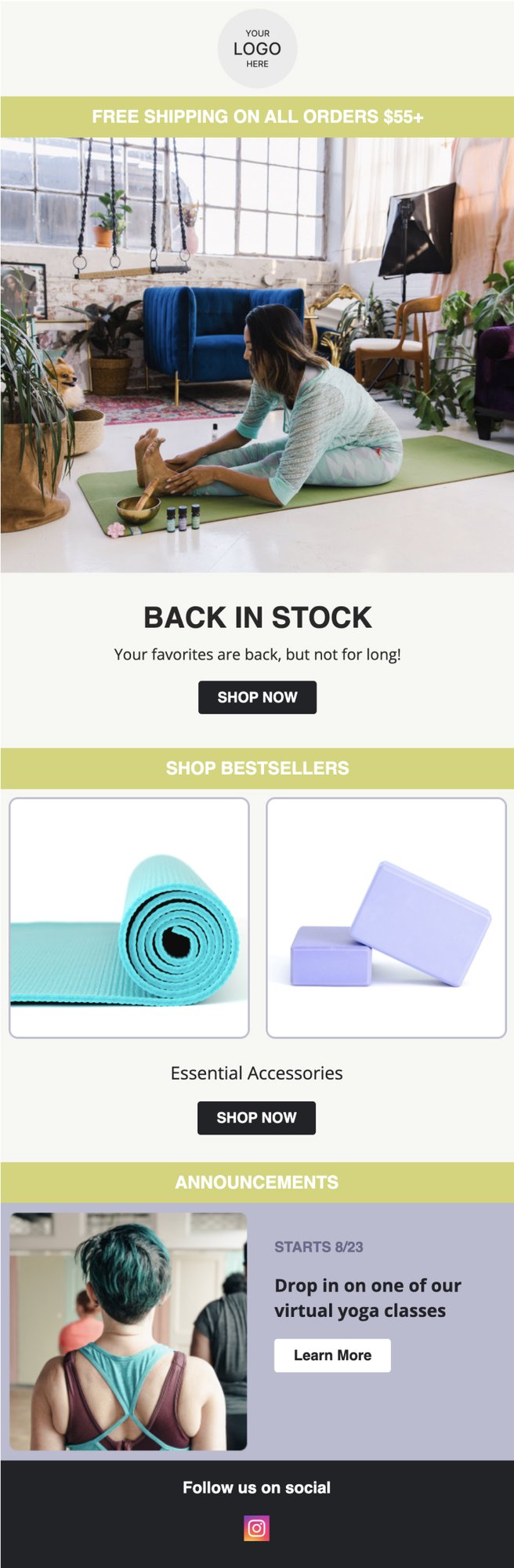

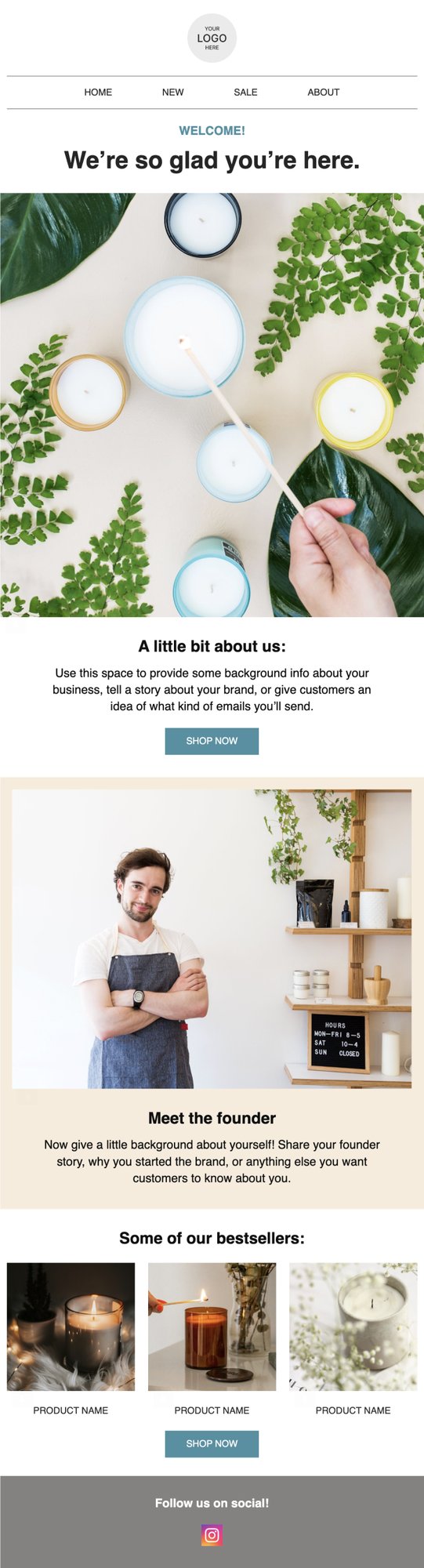

Build beautiful emails in a snap with Privy's library of customizable email templates

Choose from a variety of our pre-built email templates, and customize them to fit your brand with Privy's drag-and-drop editor

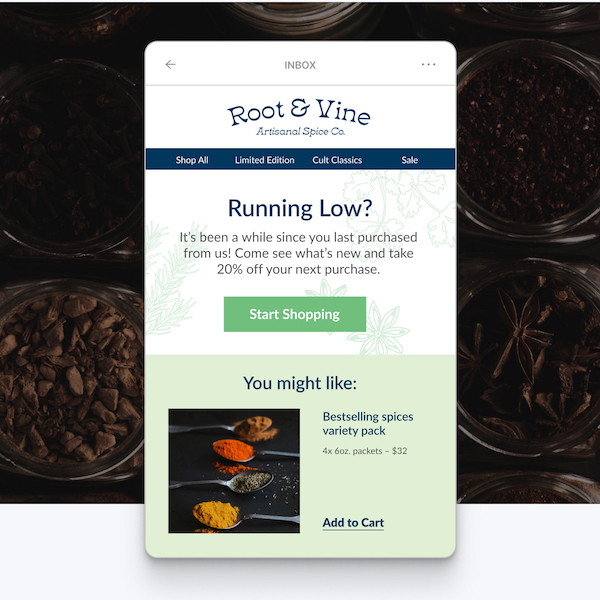

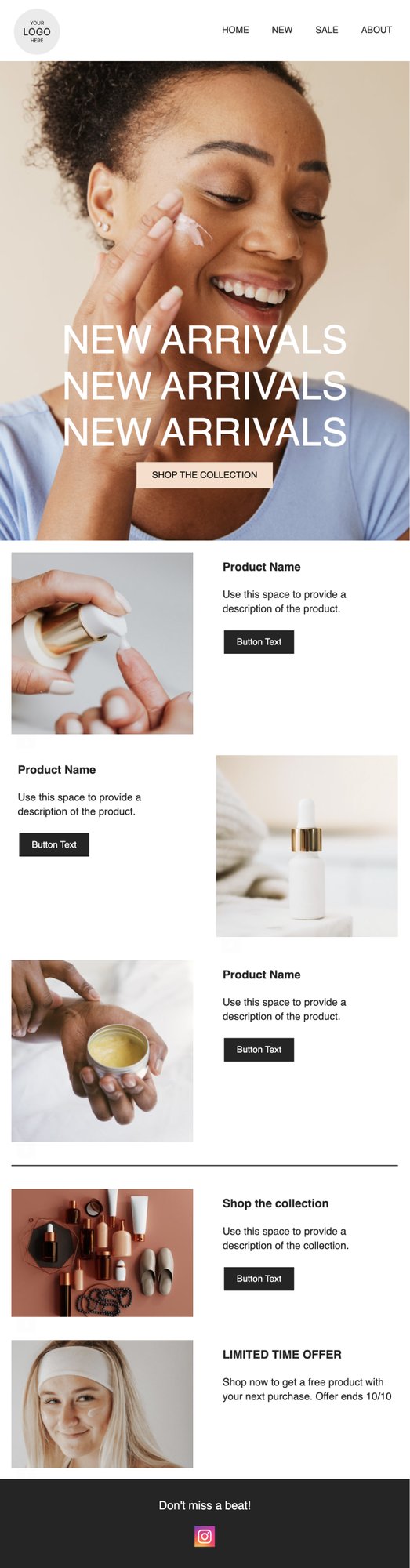

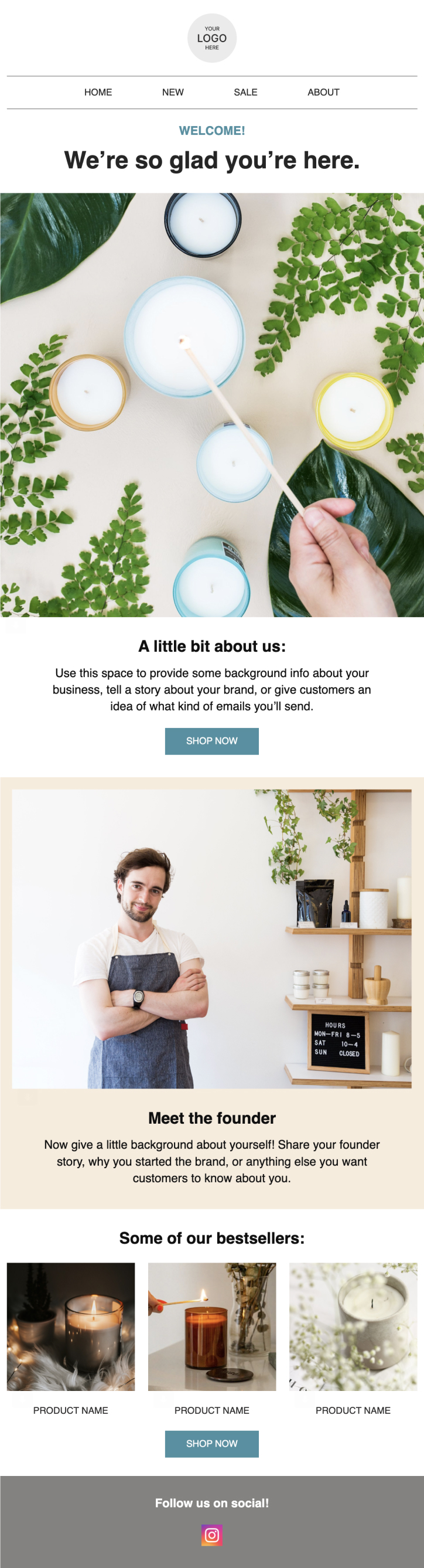

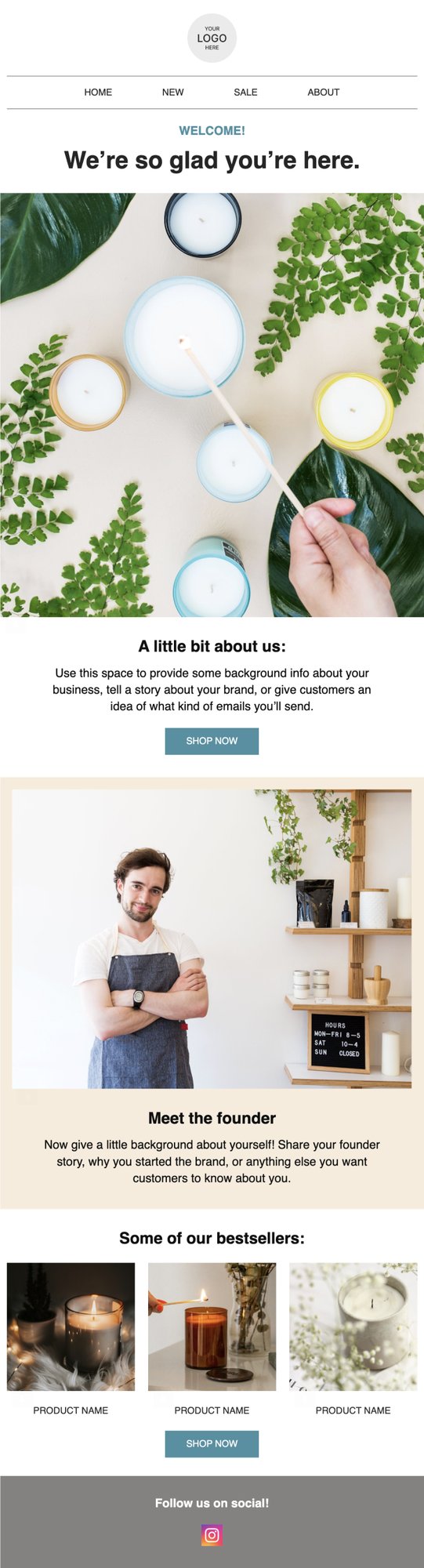

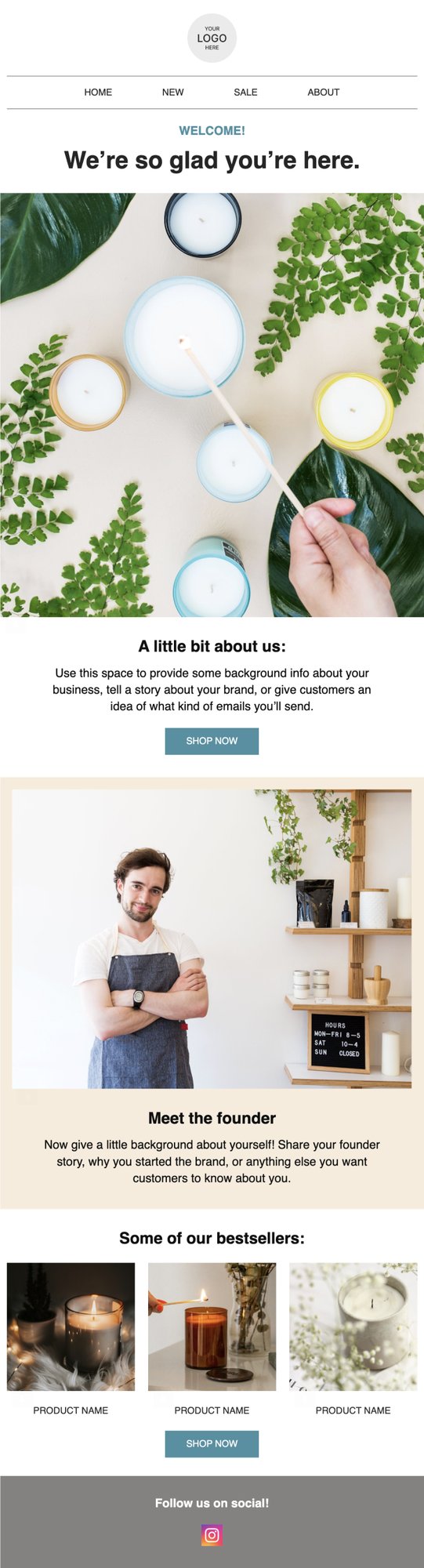

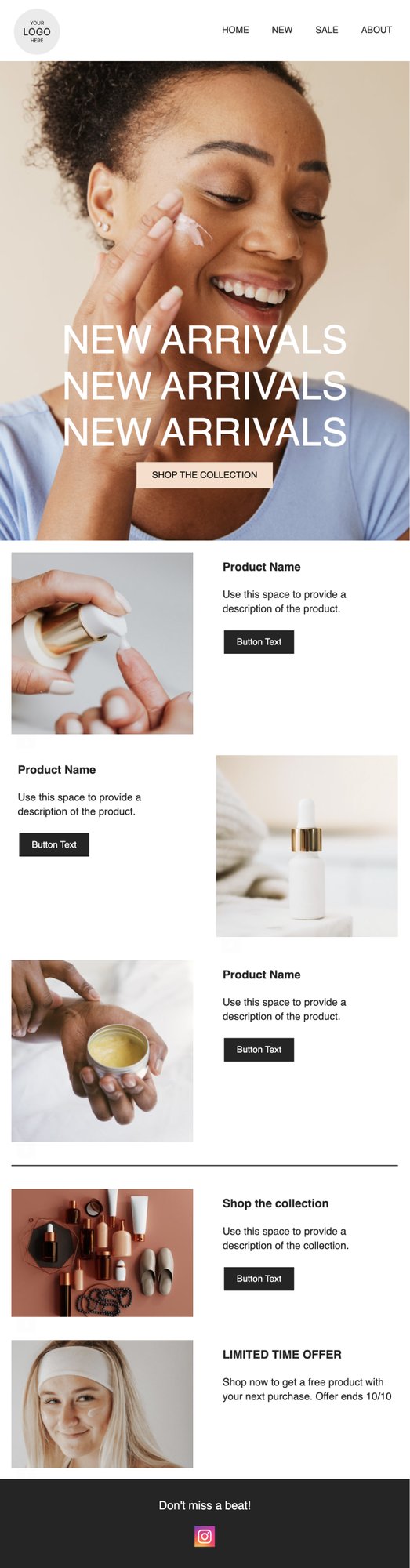

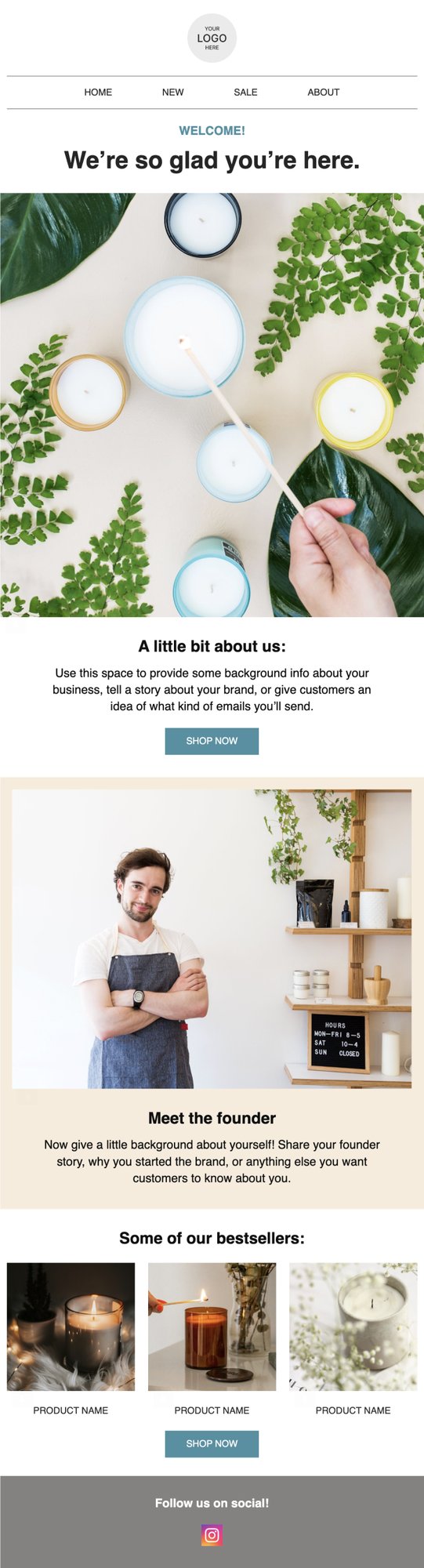

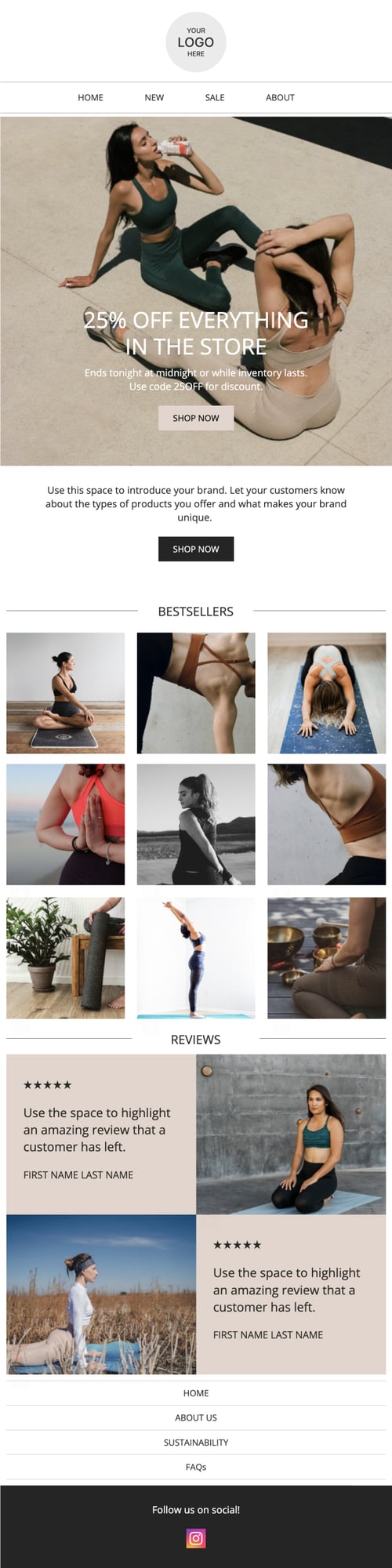

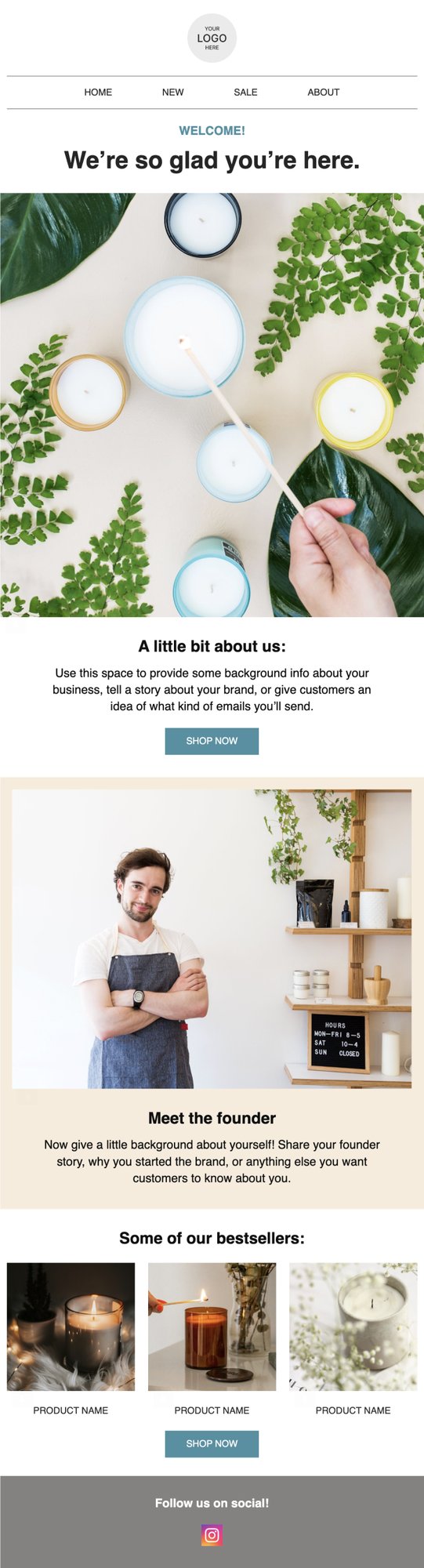

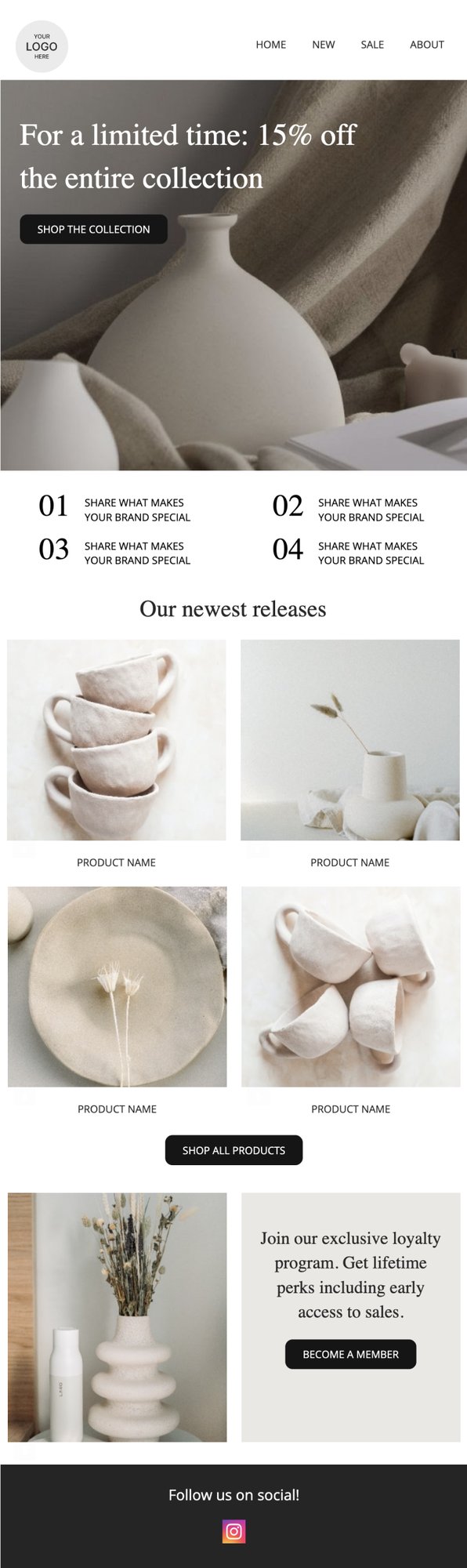

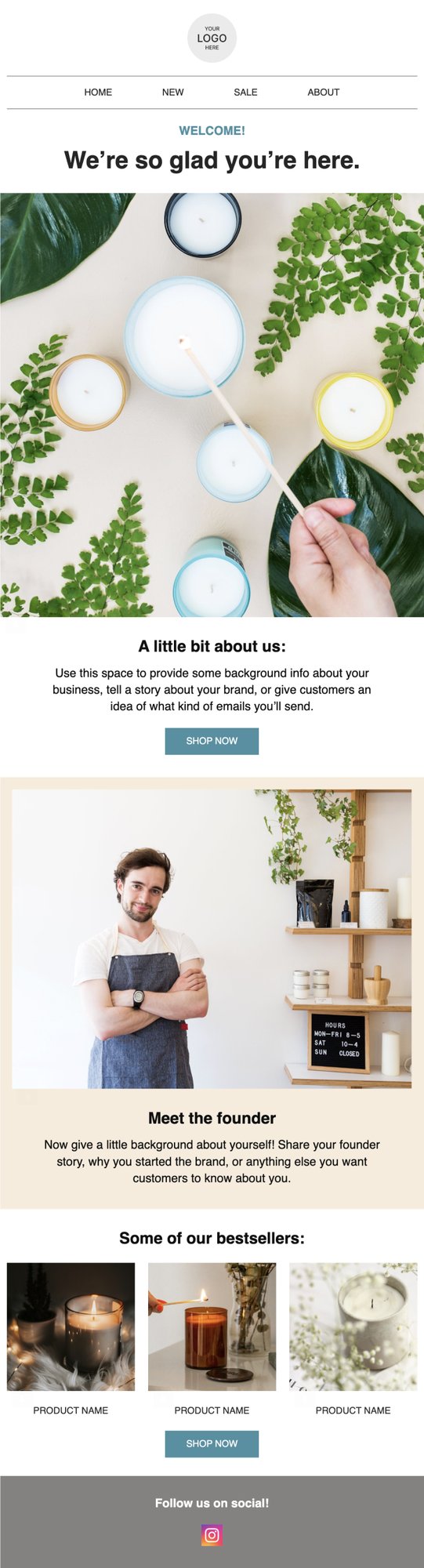

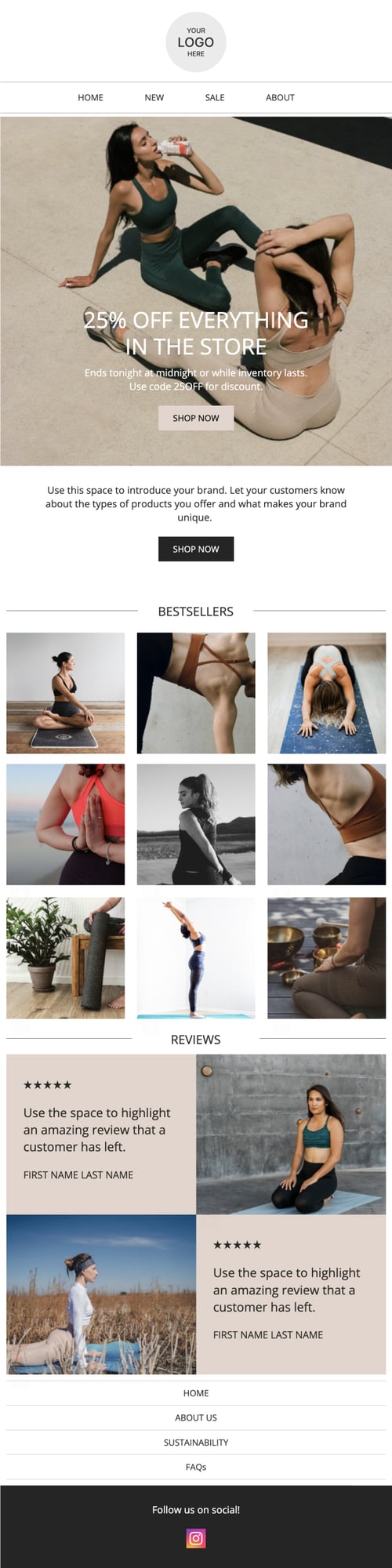

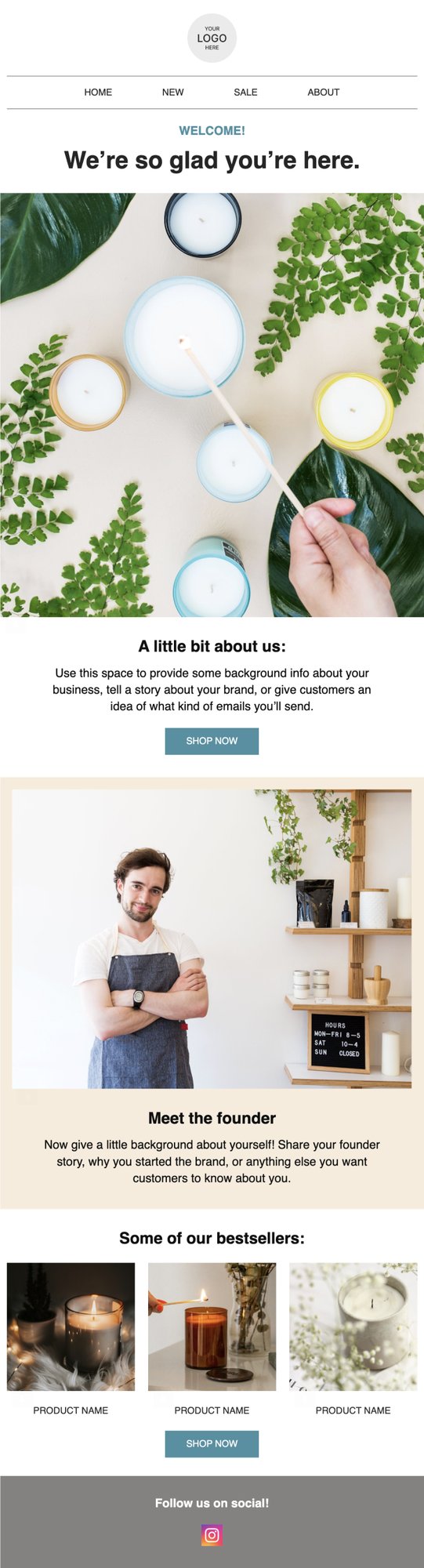

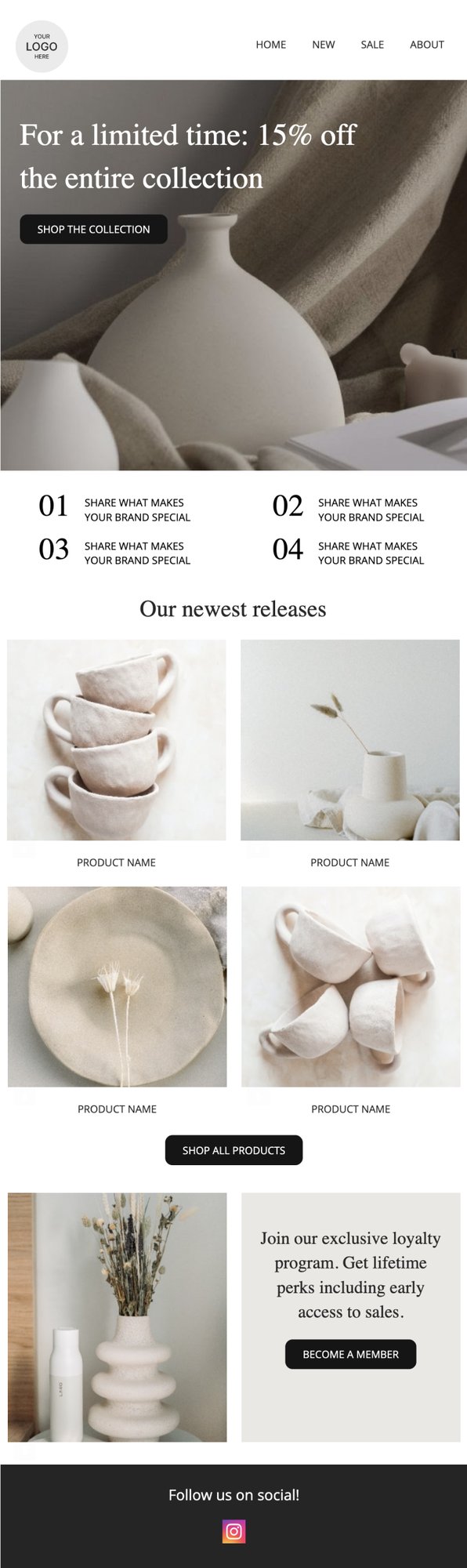

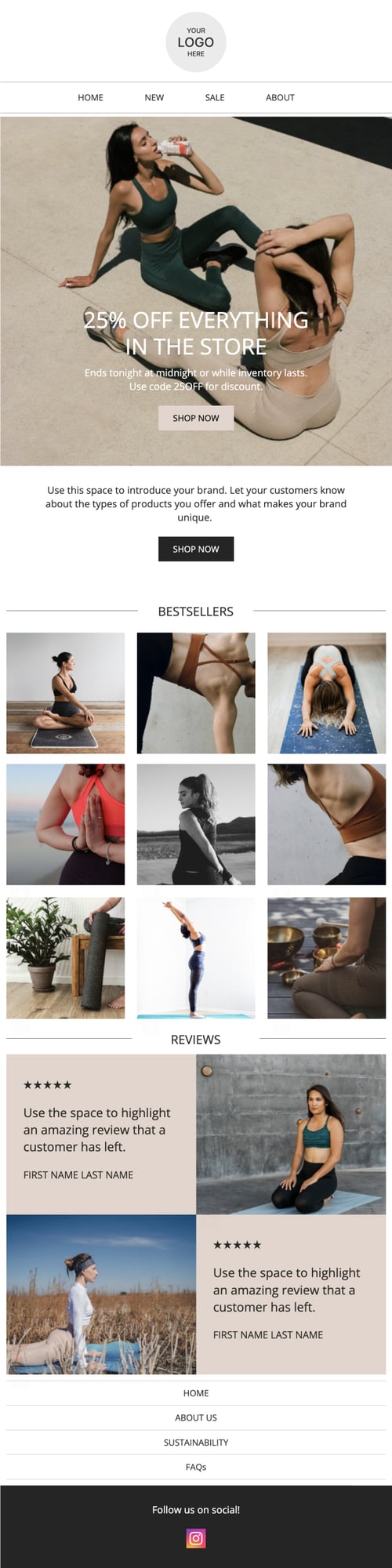

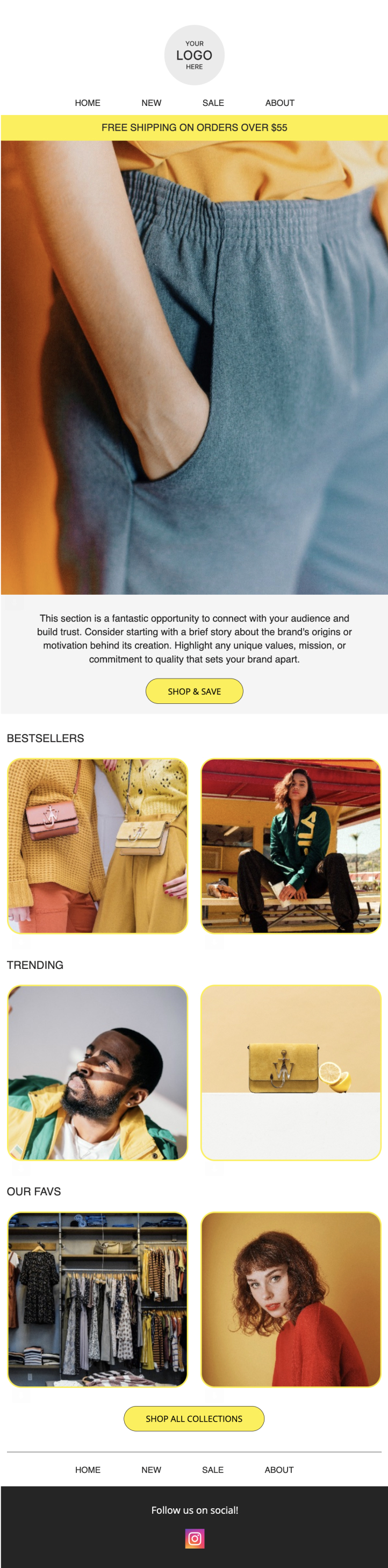

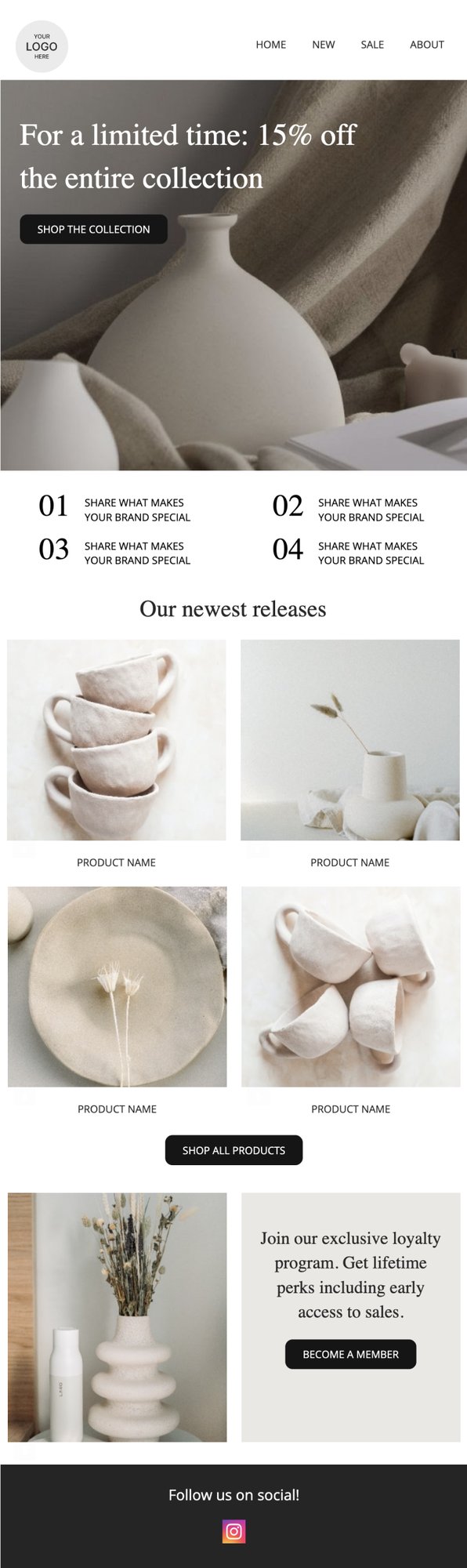

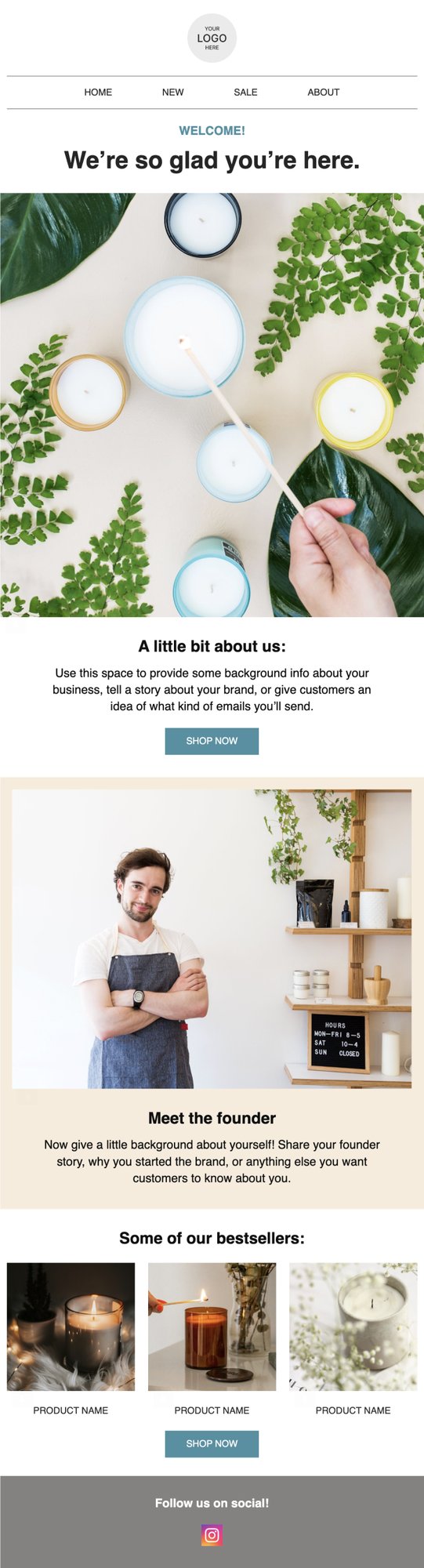

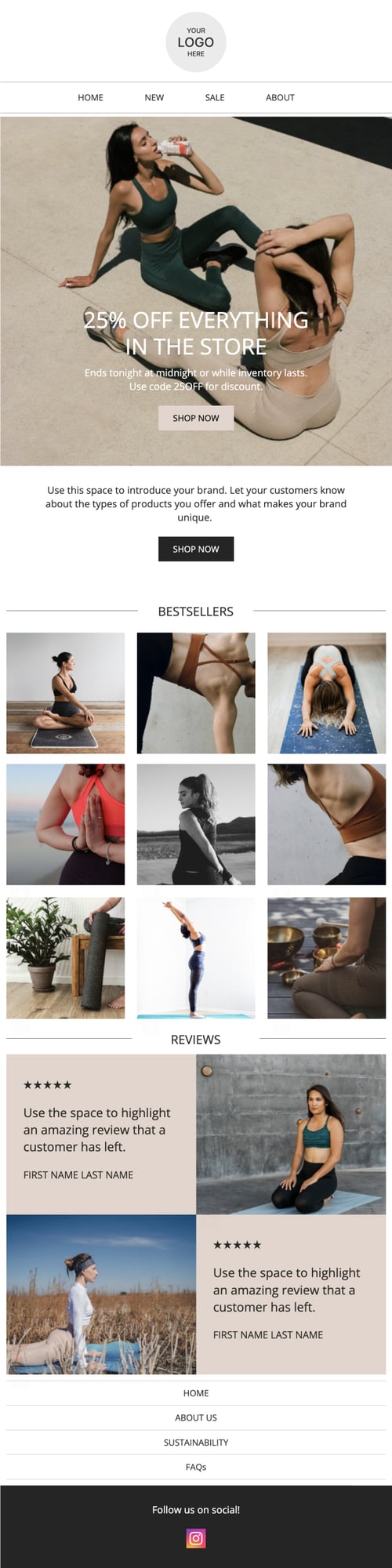

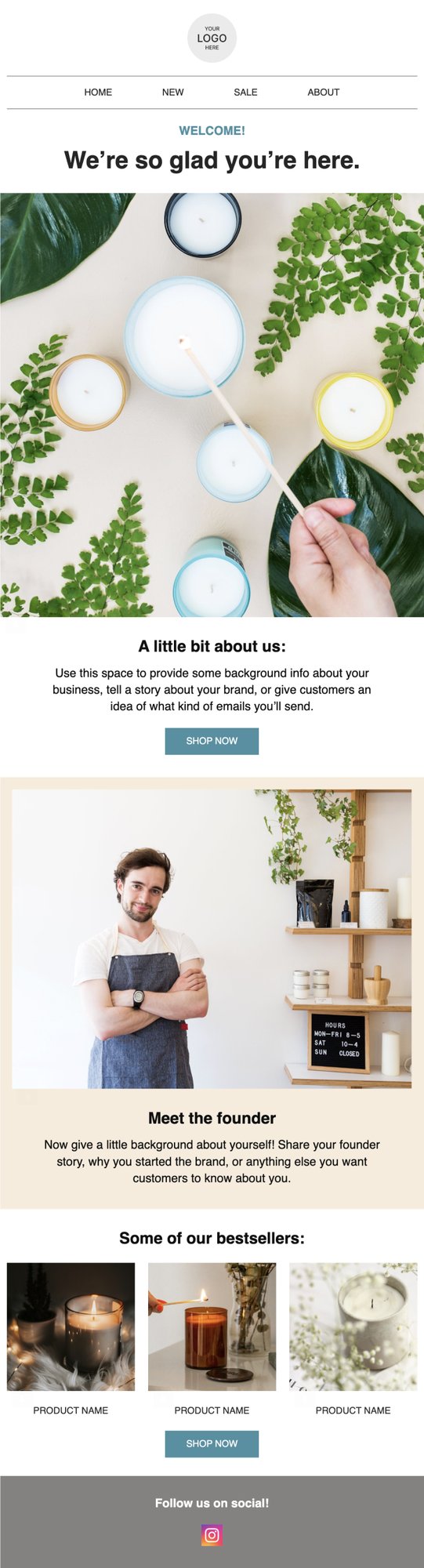

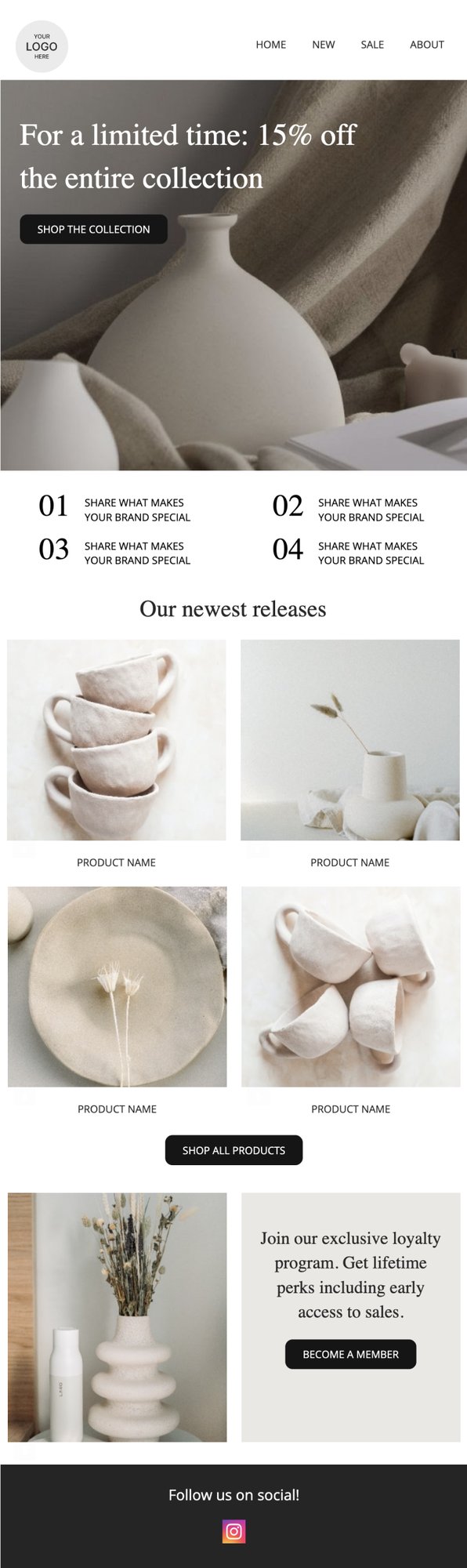

PRIVY EMAIL TEMPLATE

Welcome to the Brand

Greet new subscribers and take the opportunity to tell them a bit about you and your brand.

Try this template

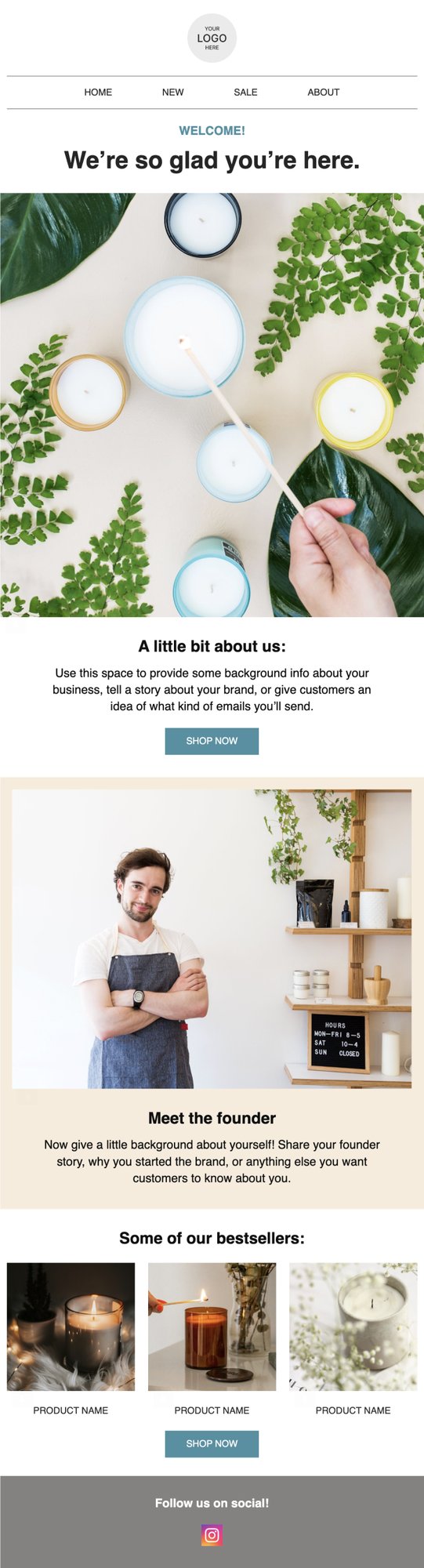

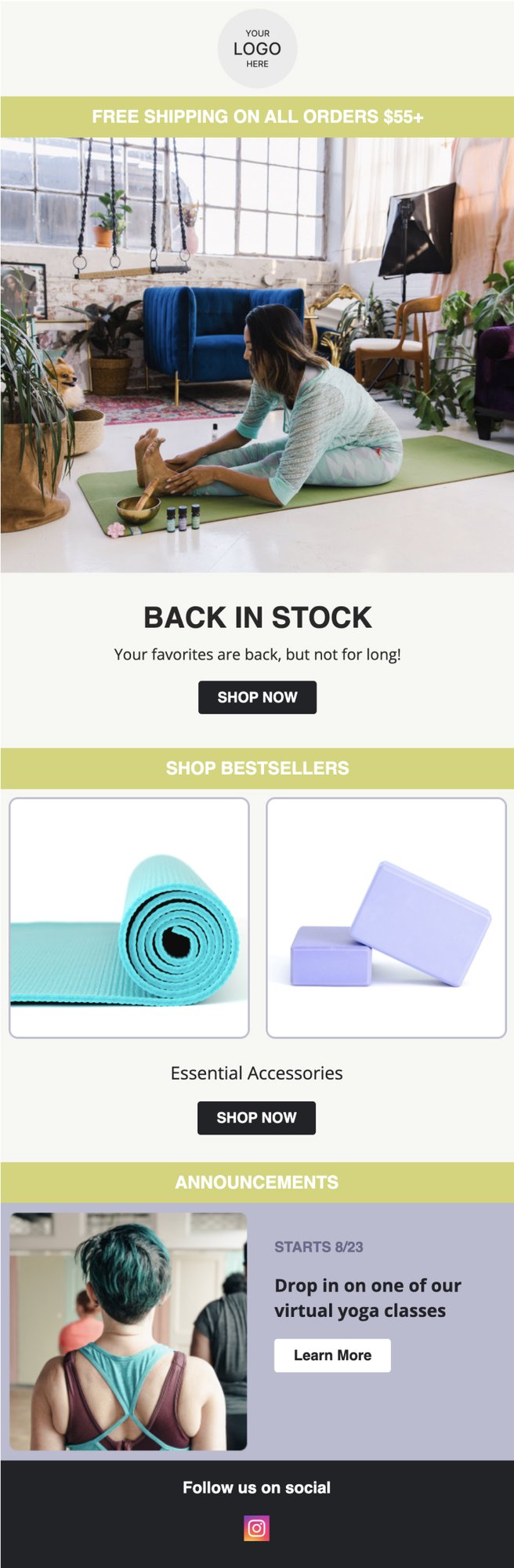

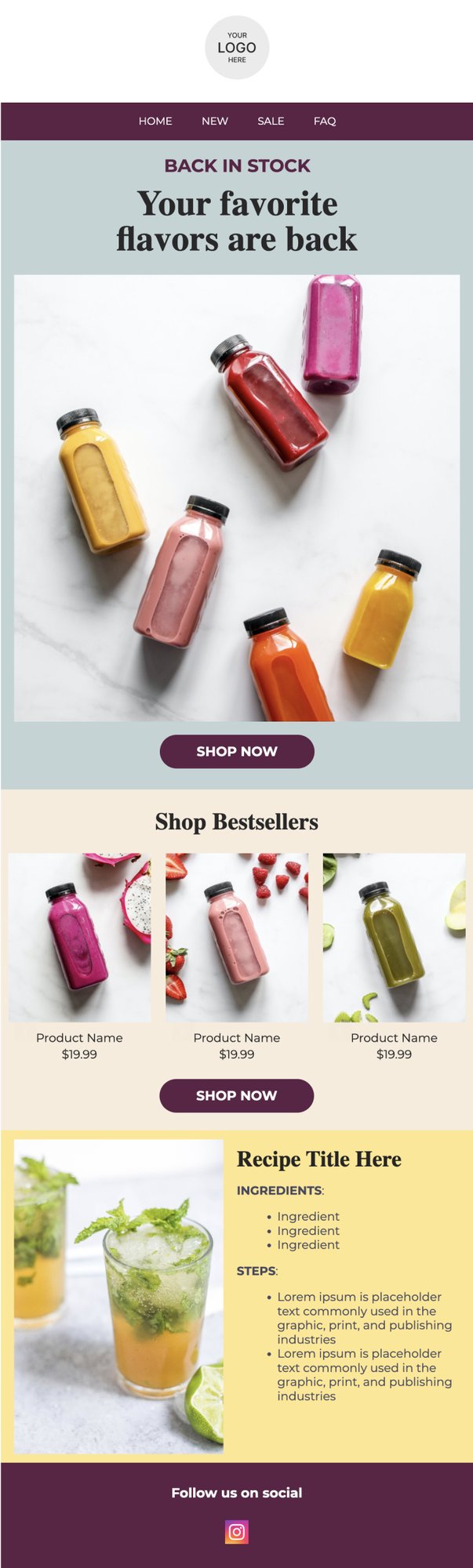

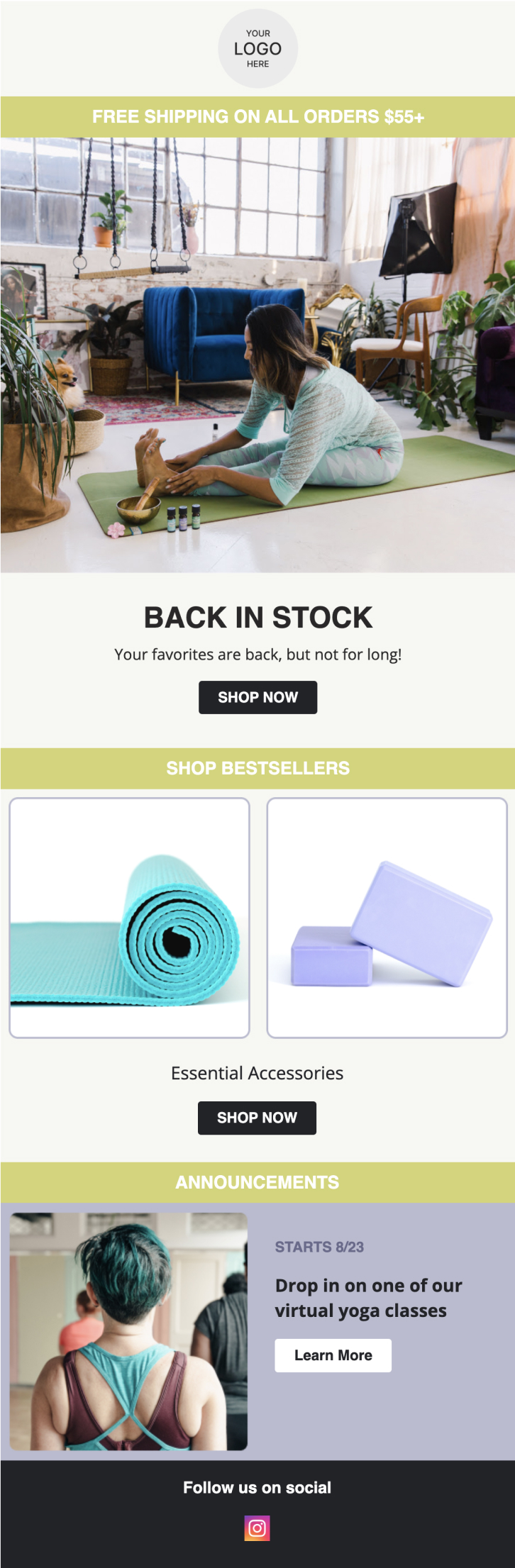

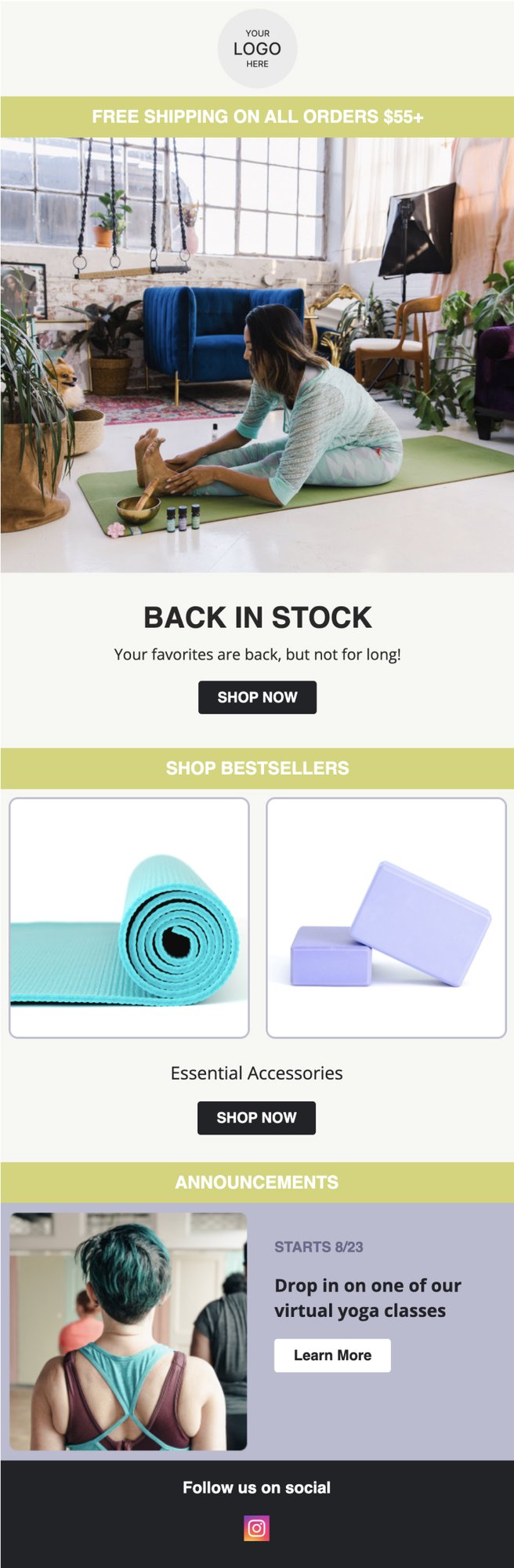

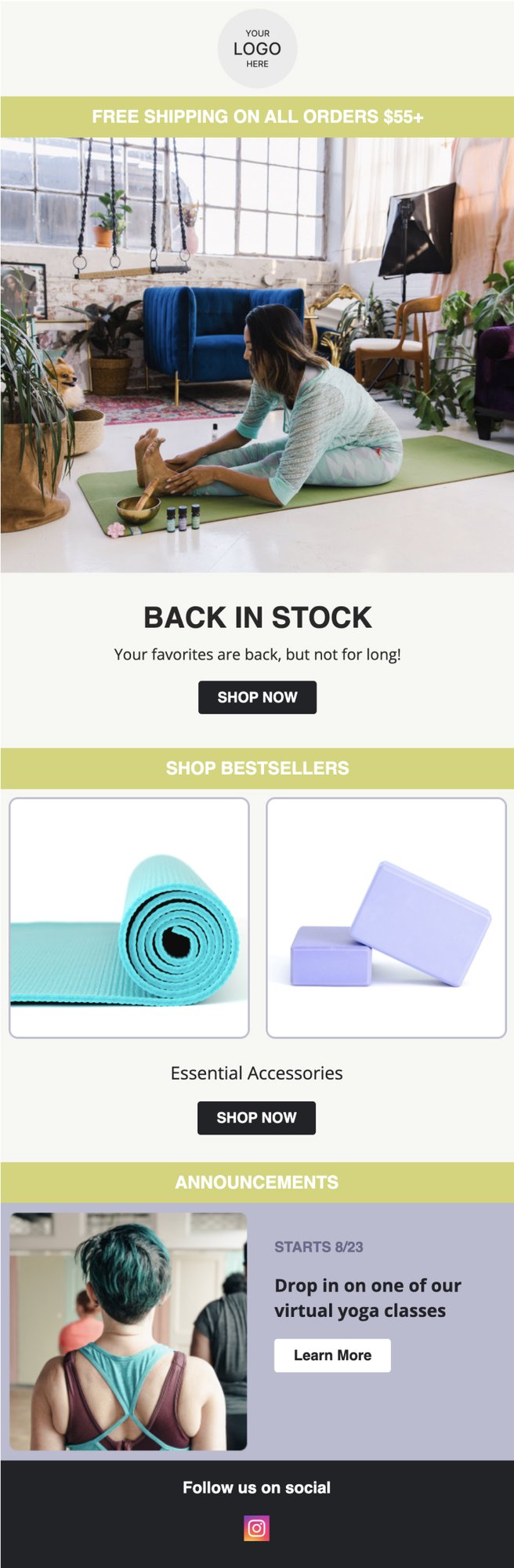

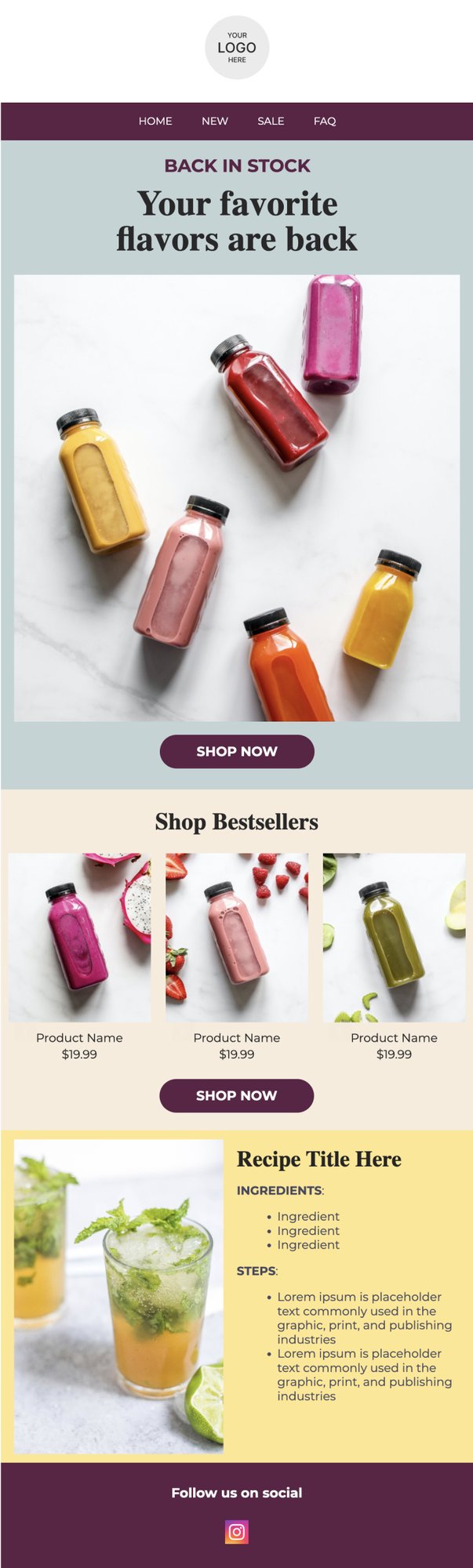

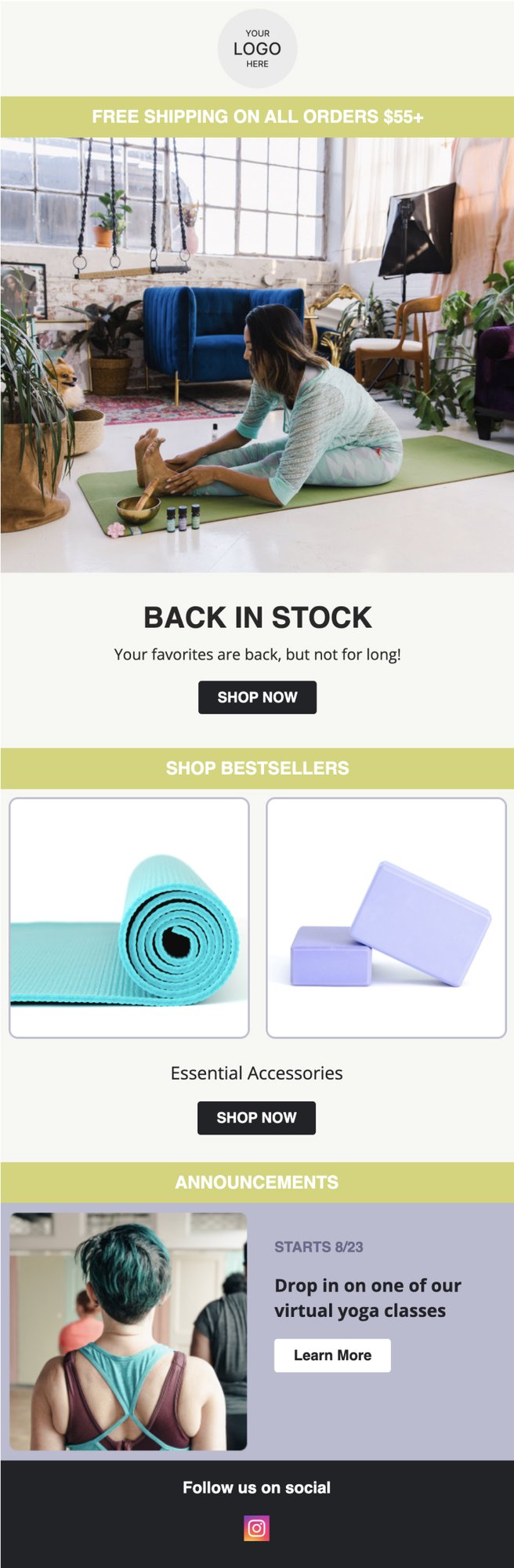

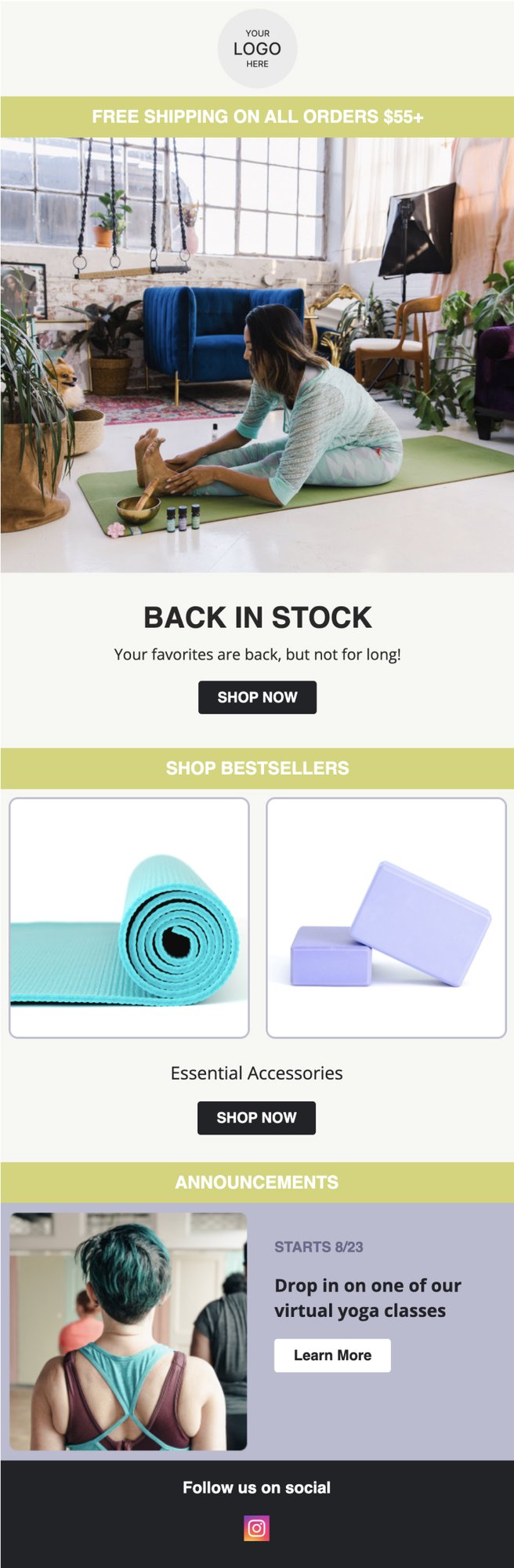

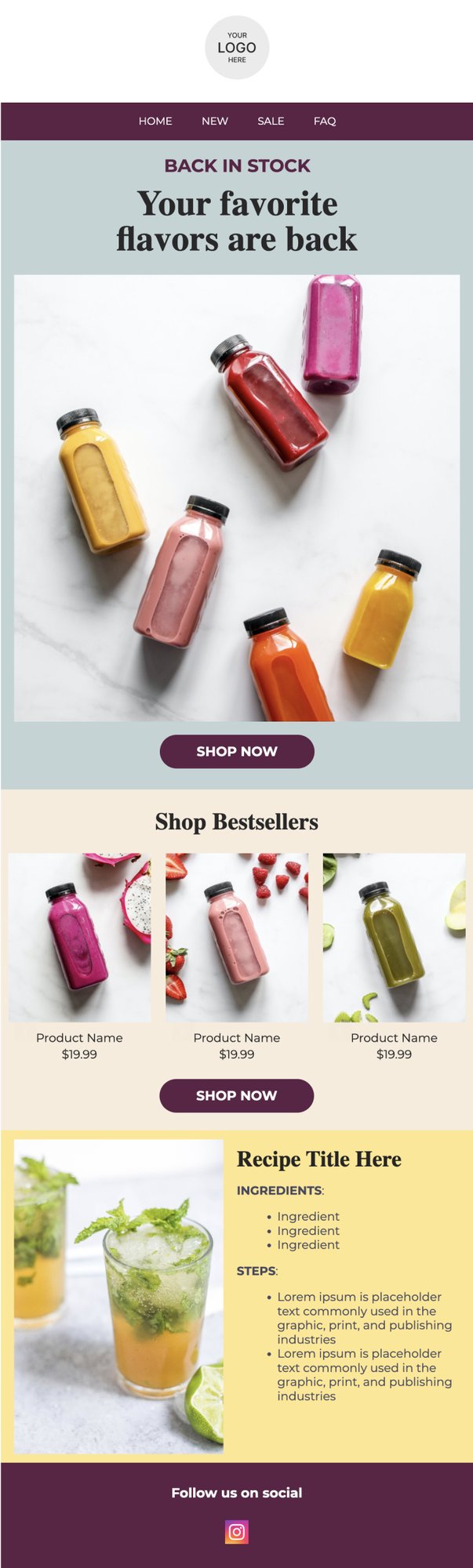

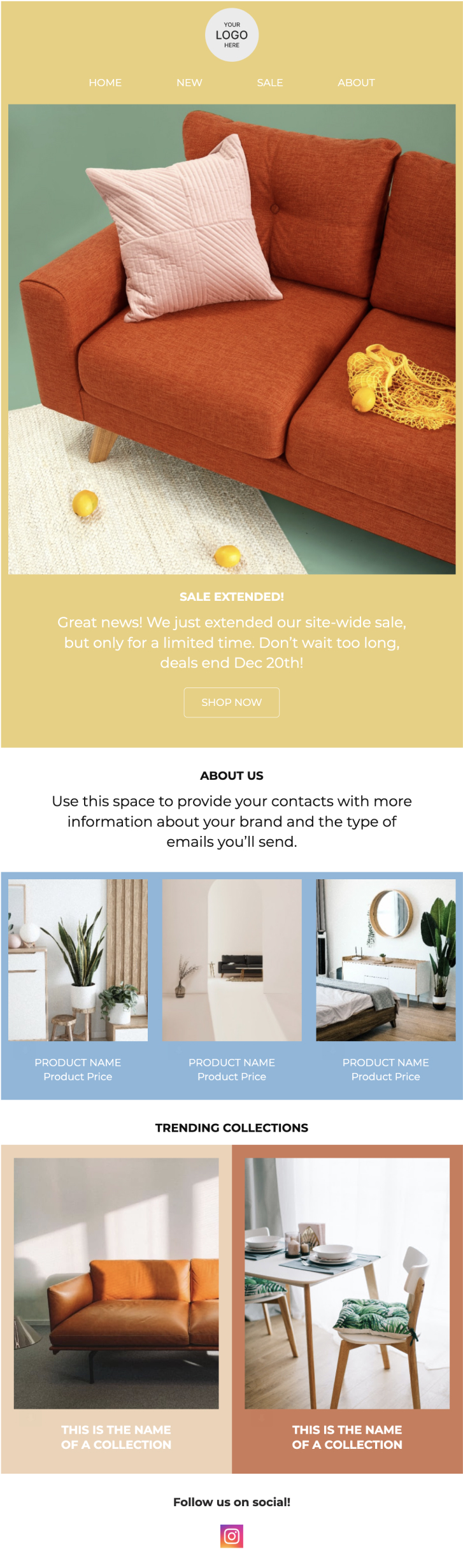

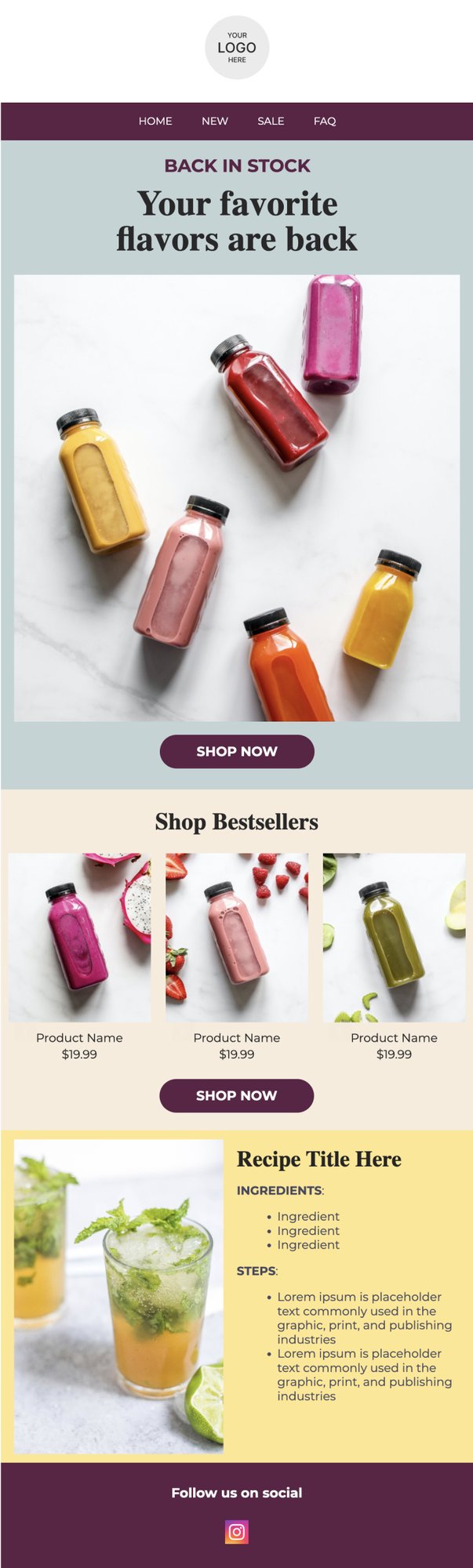

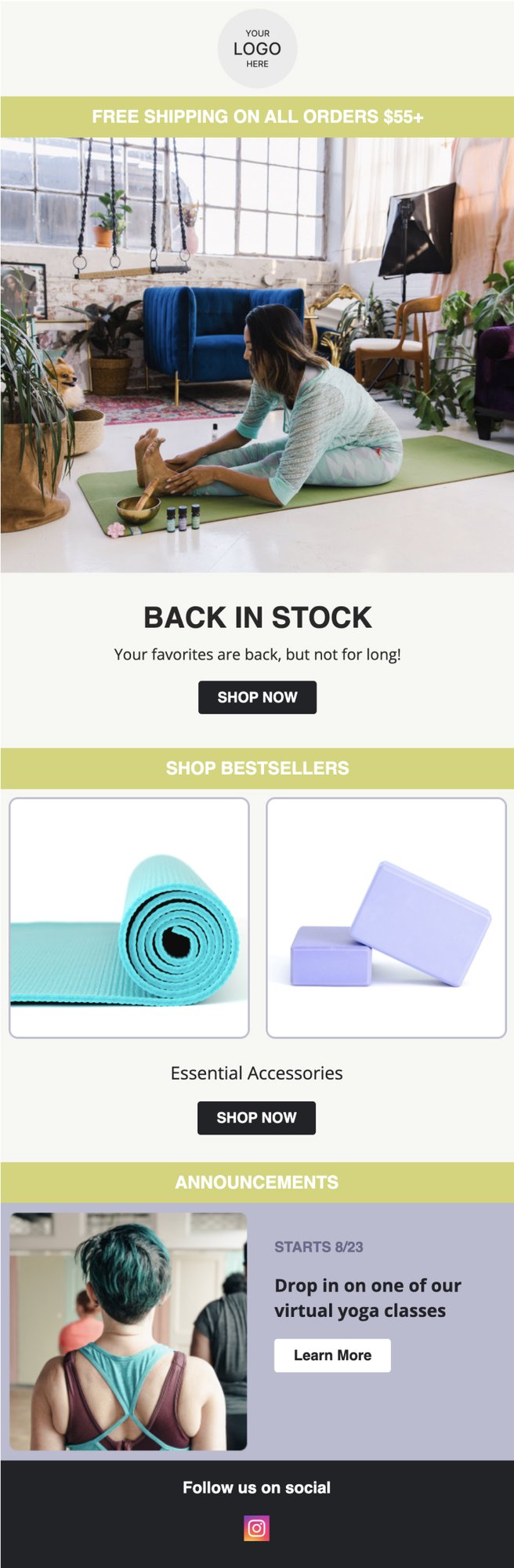

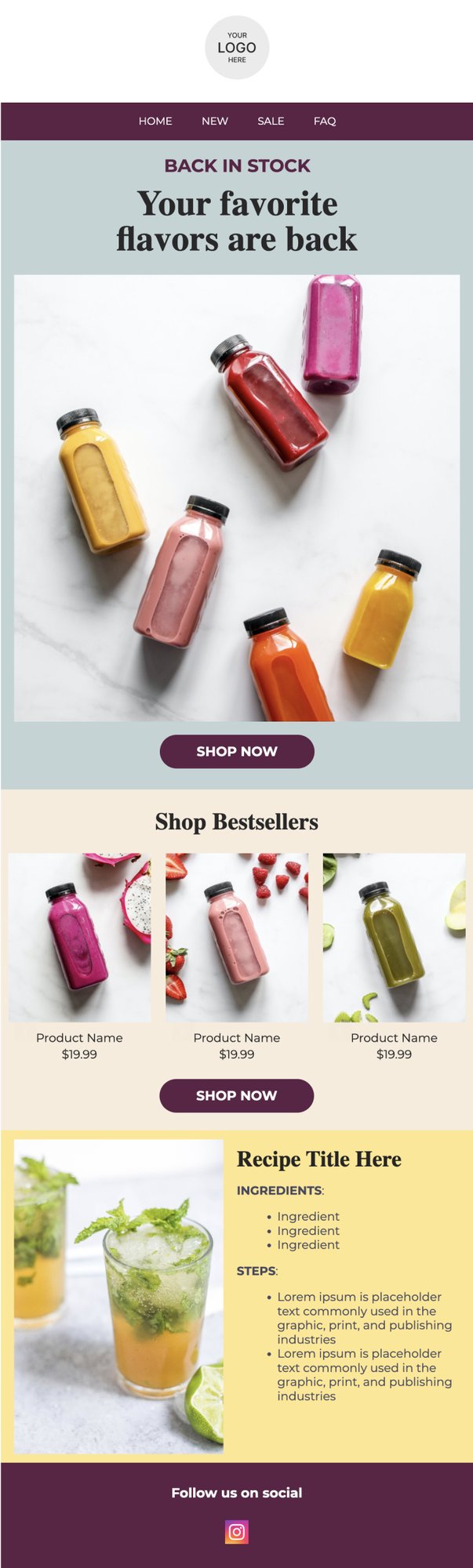

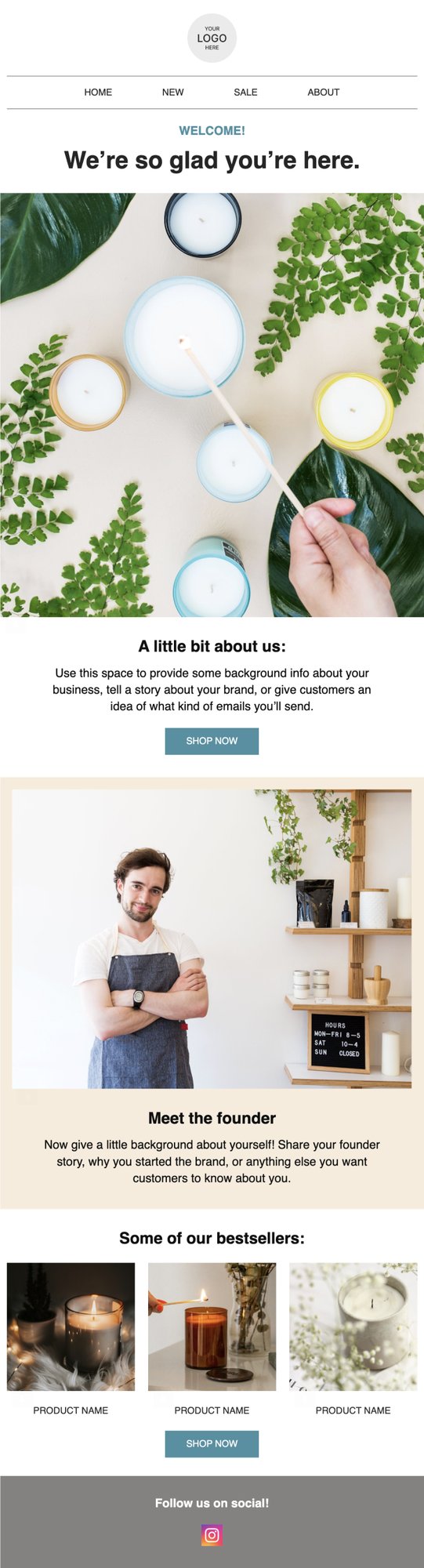

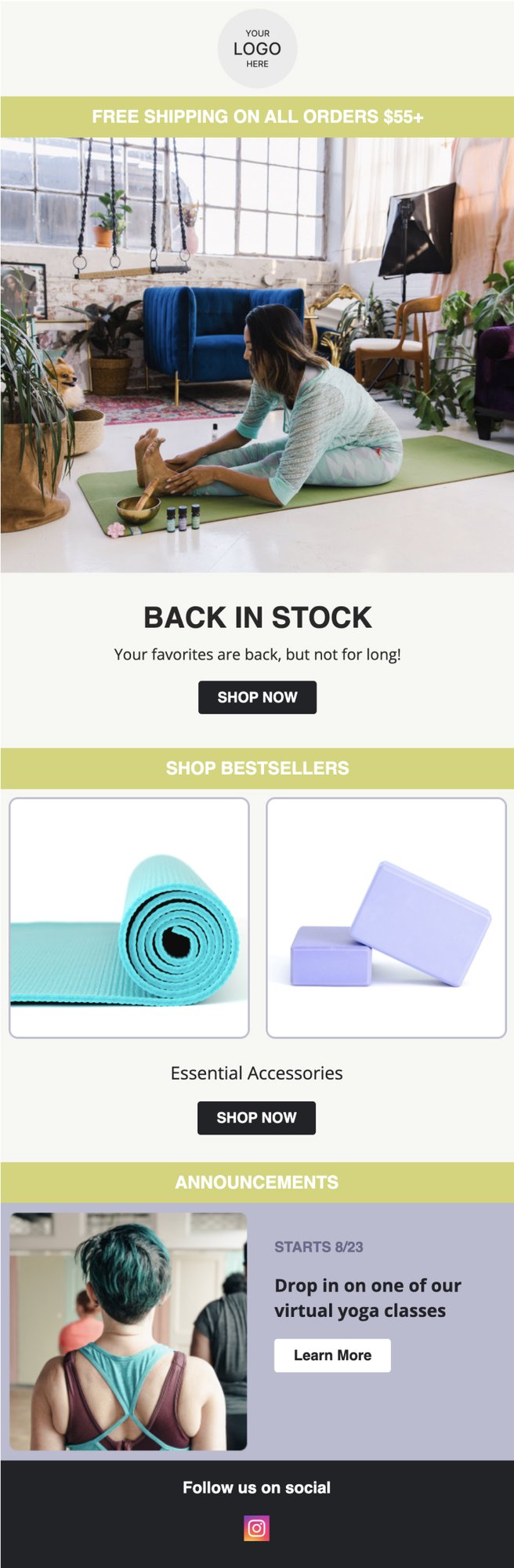

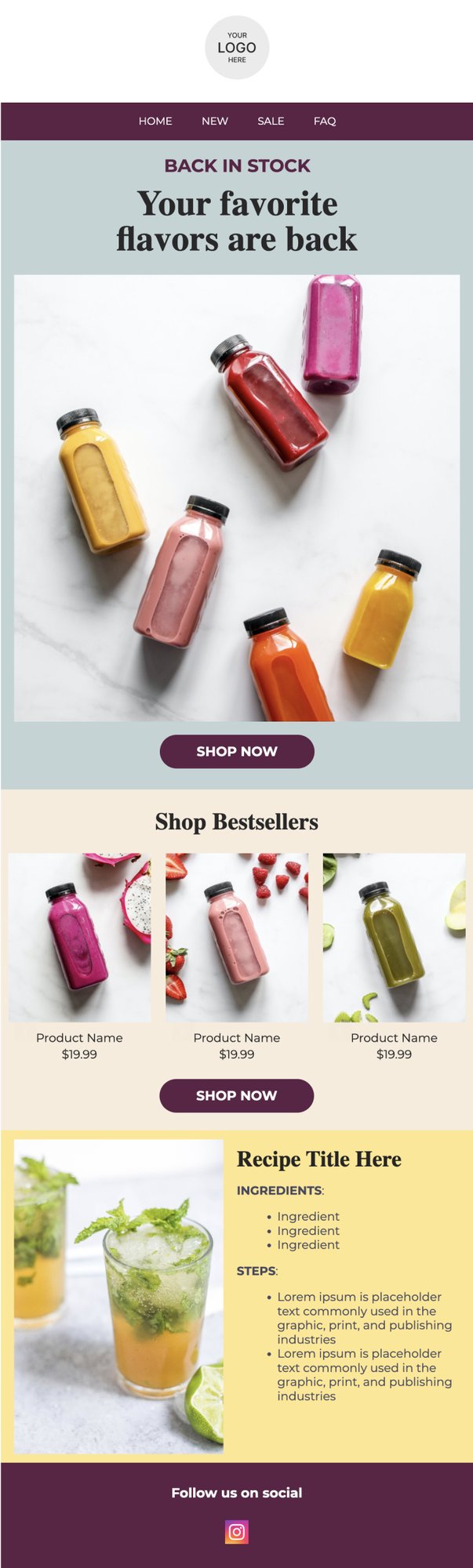

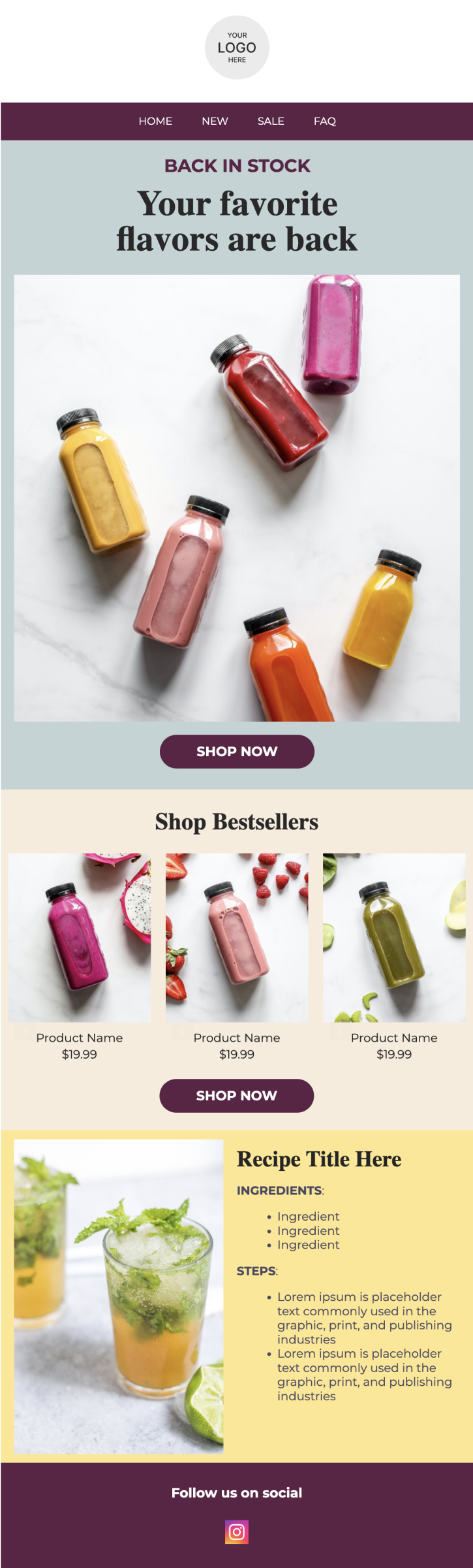

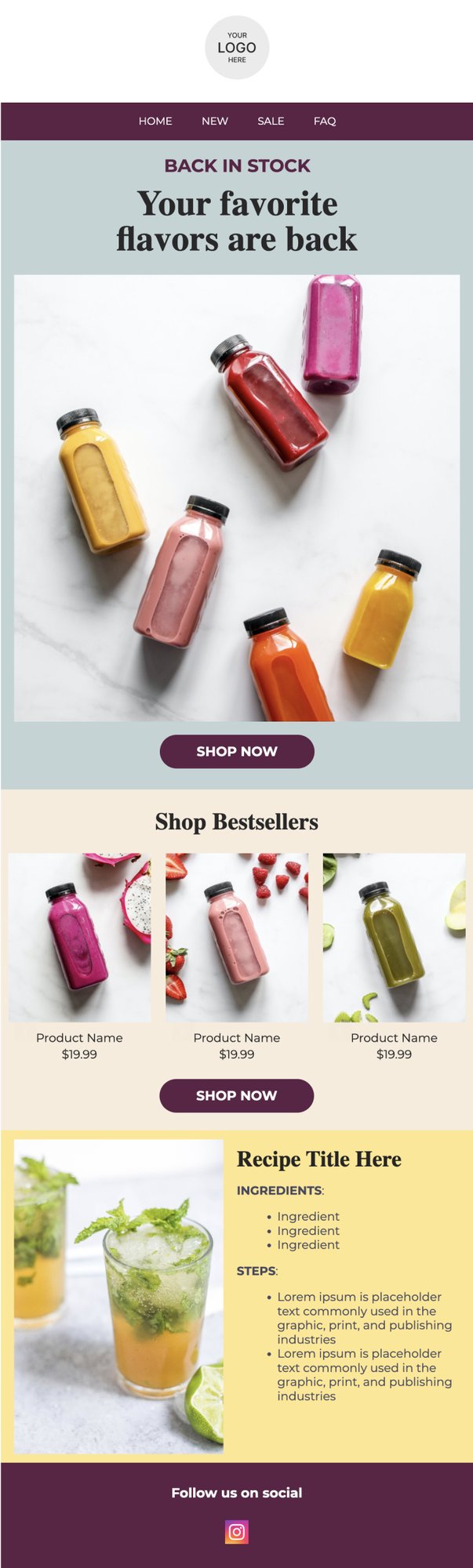

PRIVY EMAIL TEMPLATE

Back in Stock Announcement

Create urgency by letting your customers know that their favorite products are back, but they need to act fast before they sell out again!

Try this template

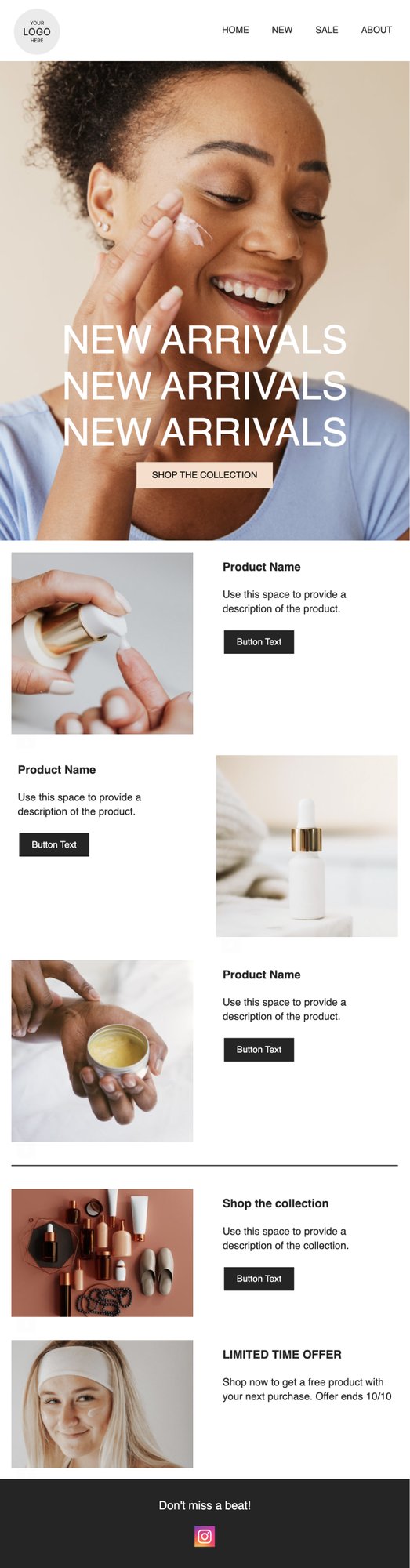

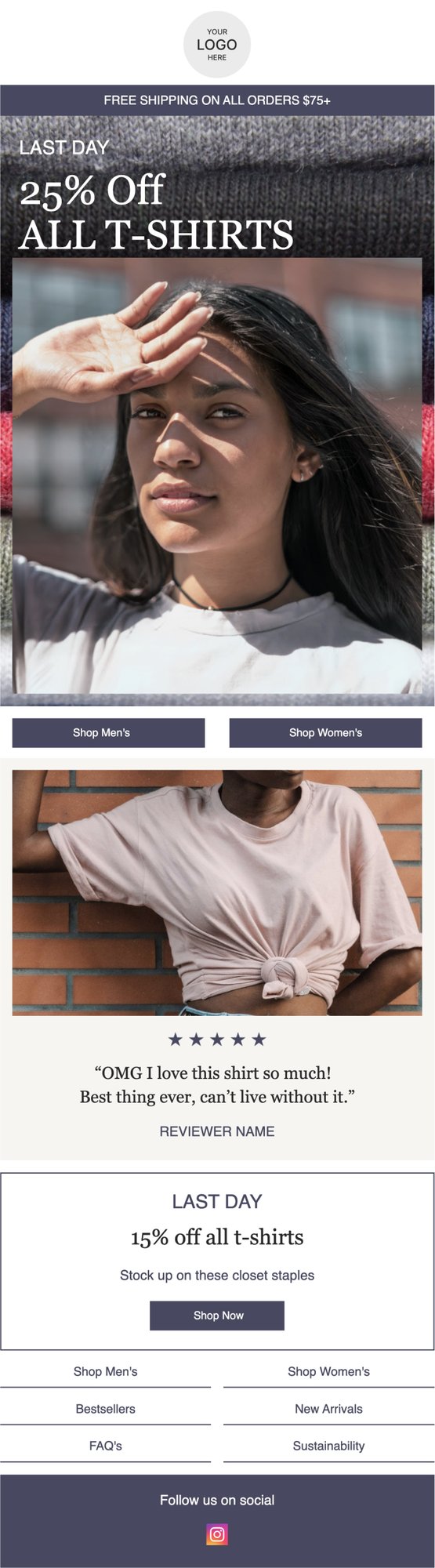

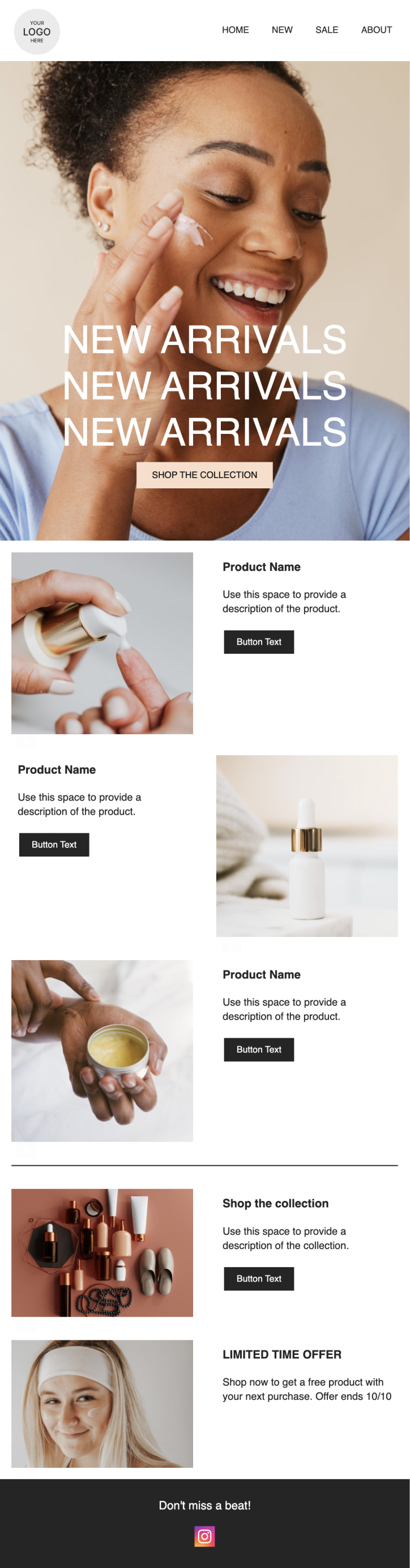

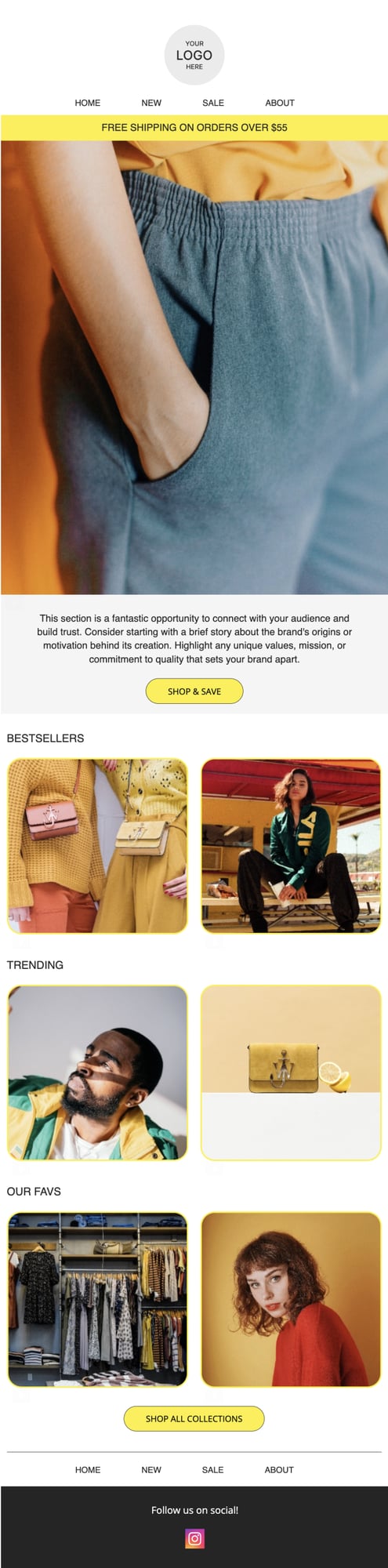

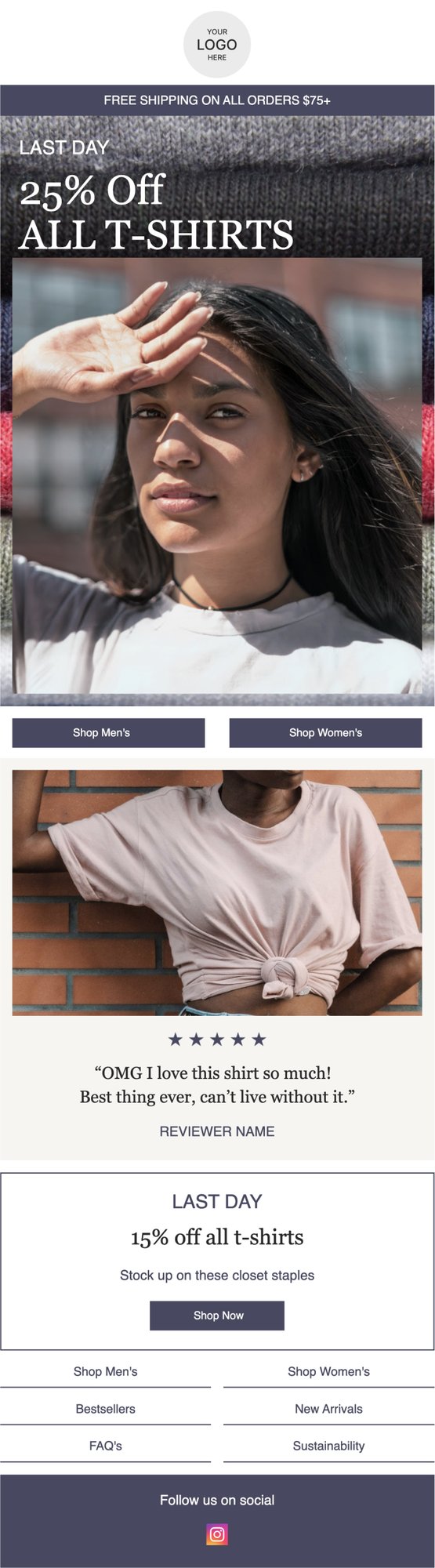

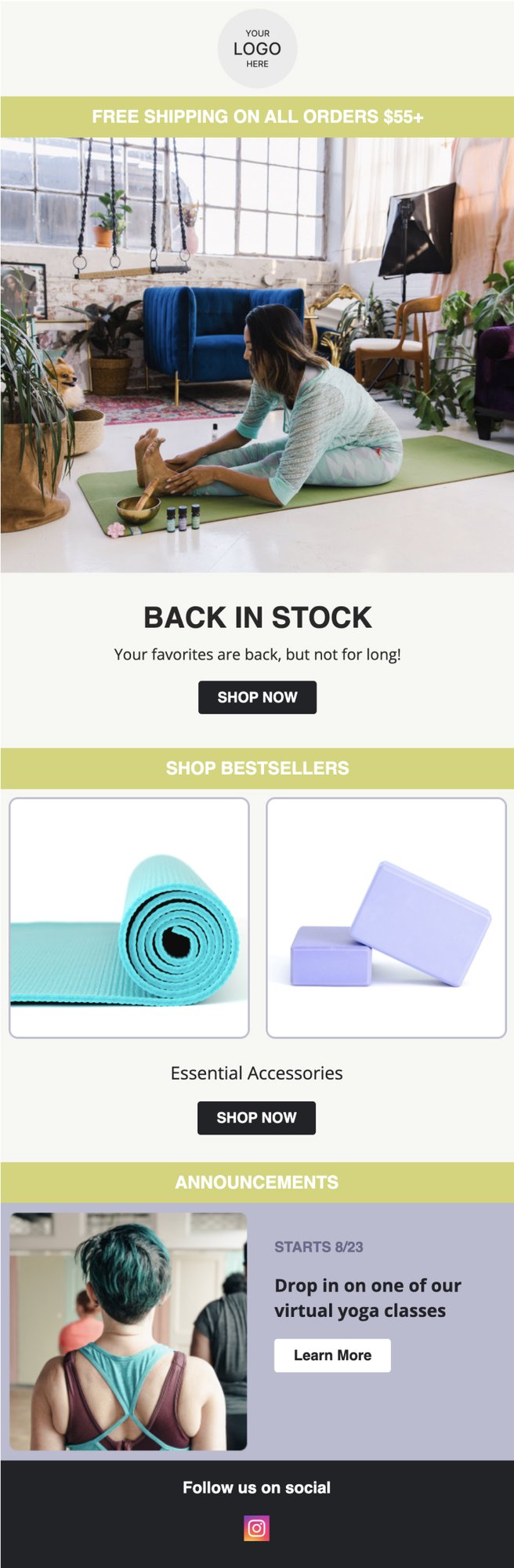

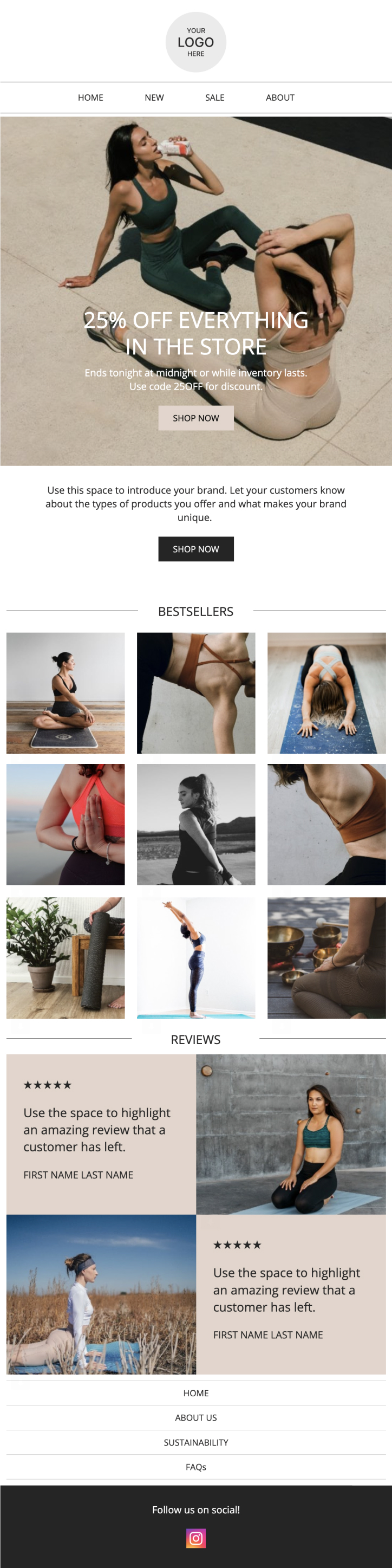

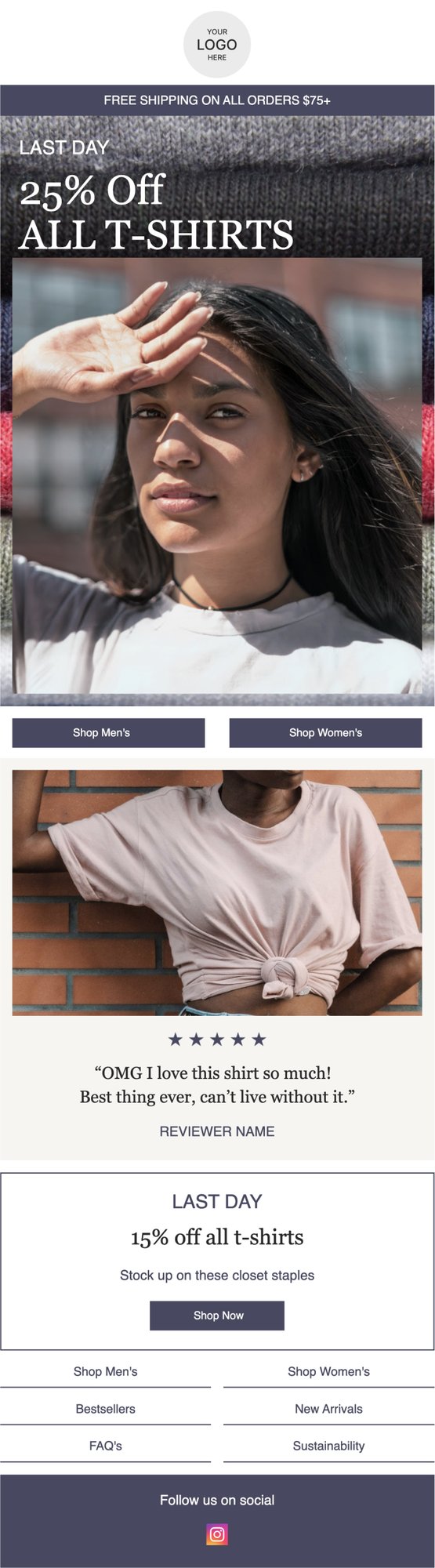

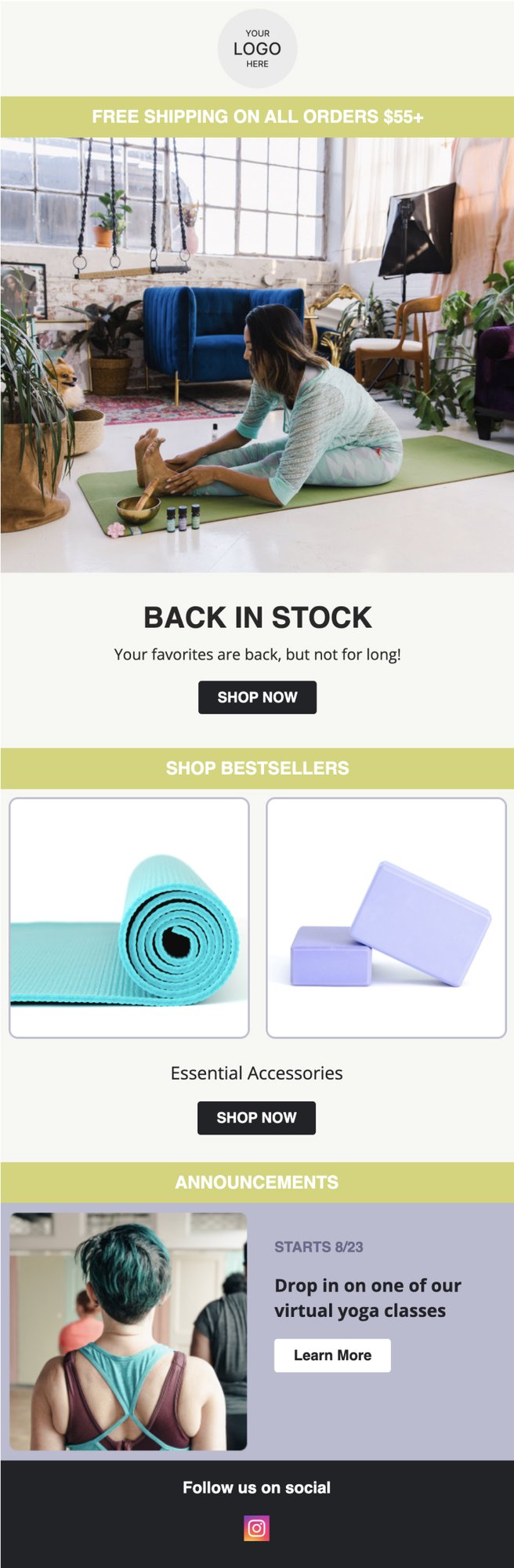

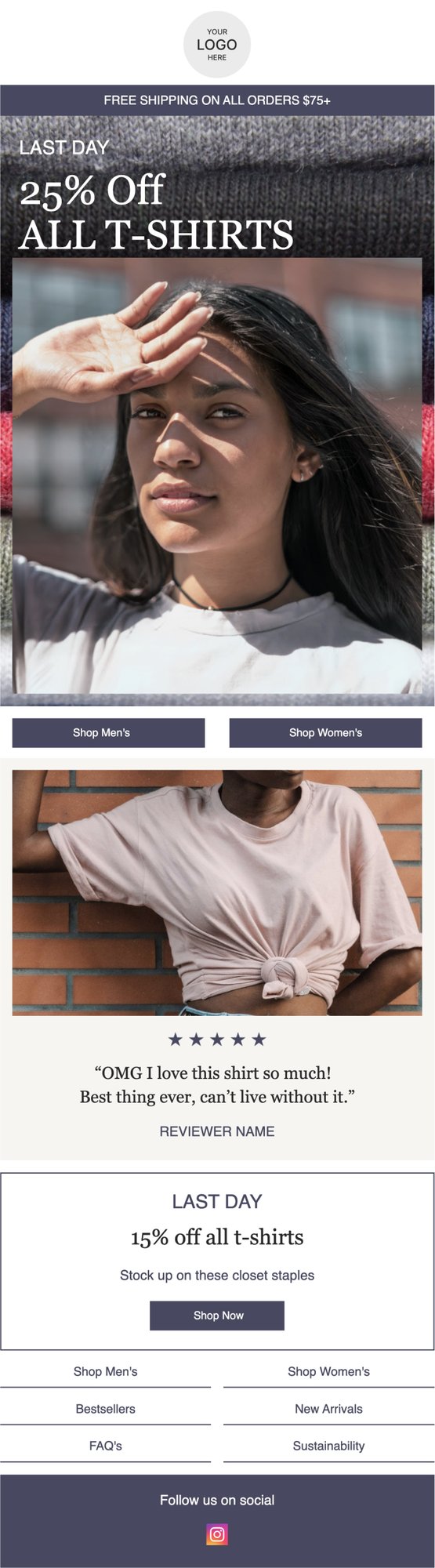

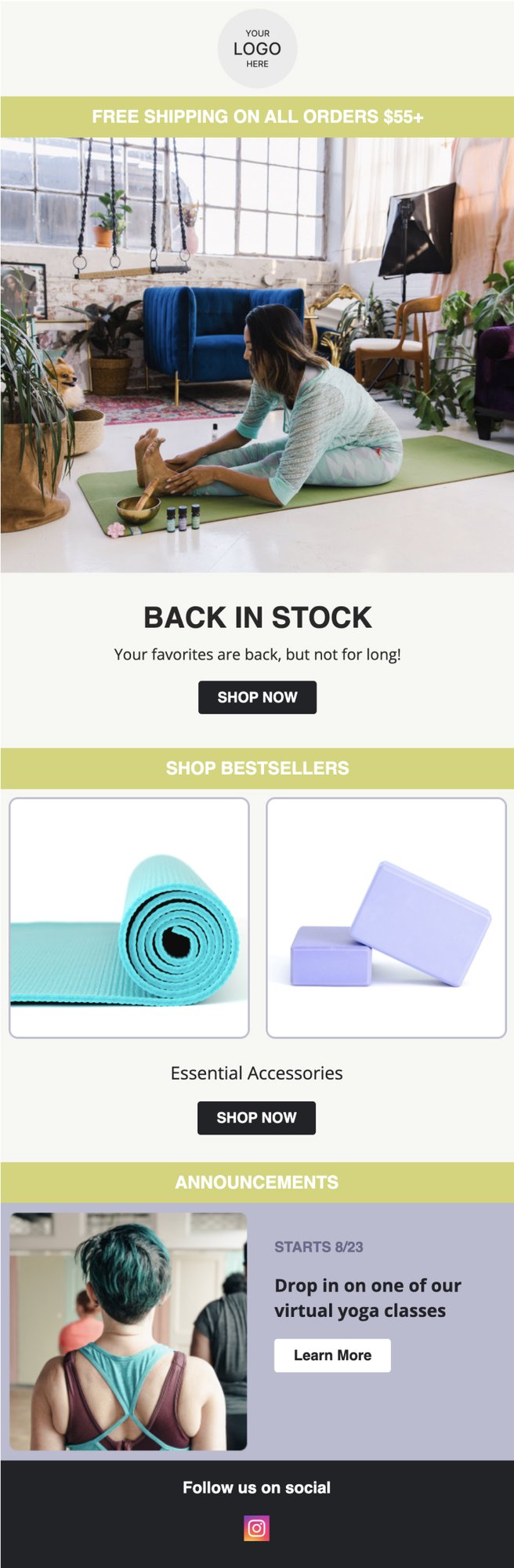

PRIVY EMAIL TEMPLATE

Featured Product

Use this template to dive behind the scenes of one or more of your bestsellers. Let your customers know exactly why they'll love your products!

Try this template

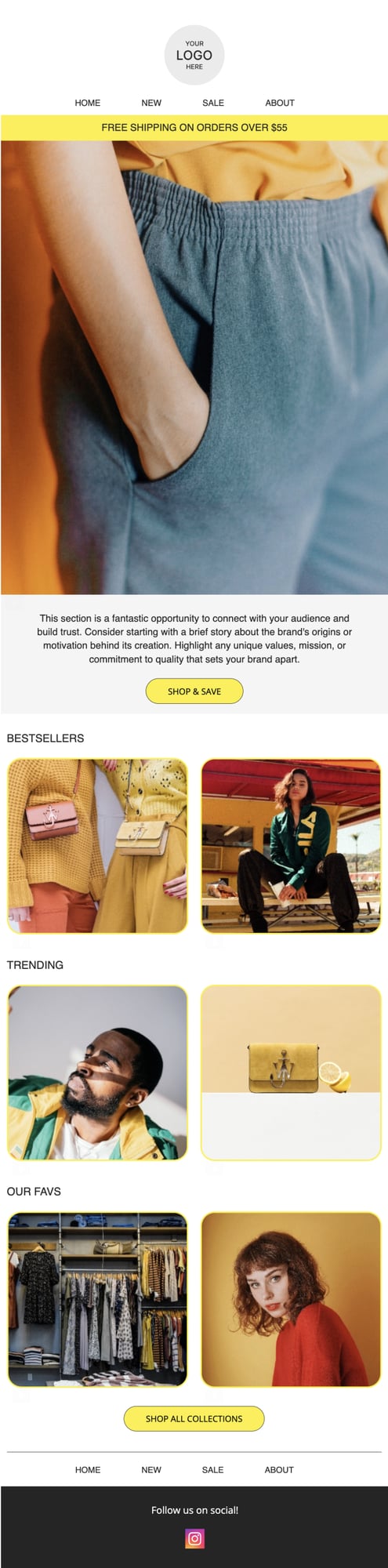

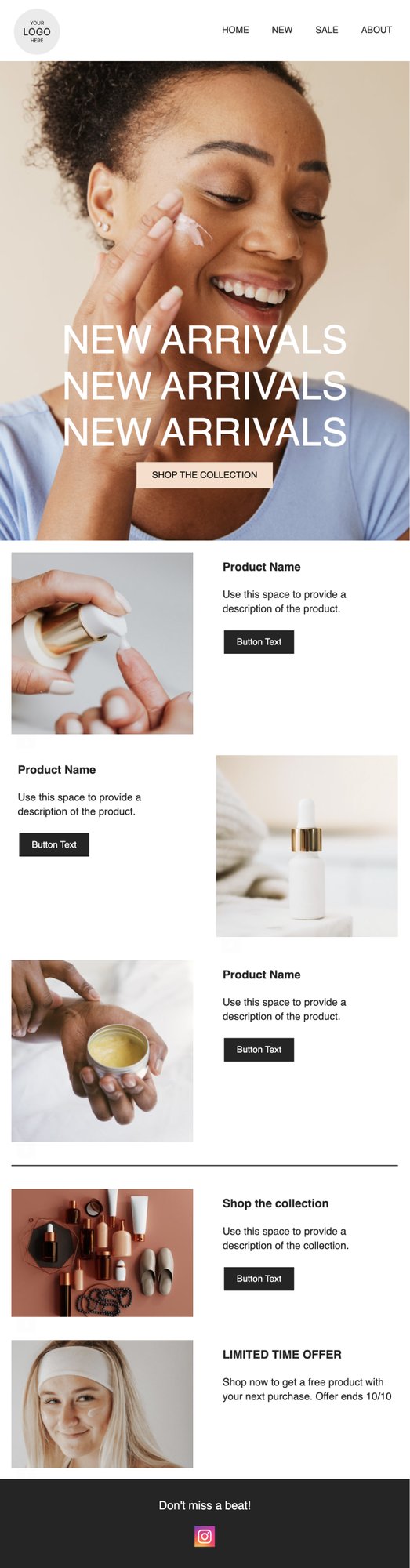

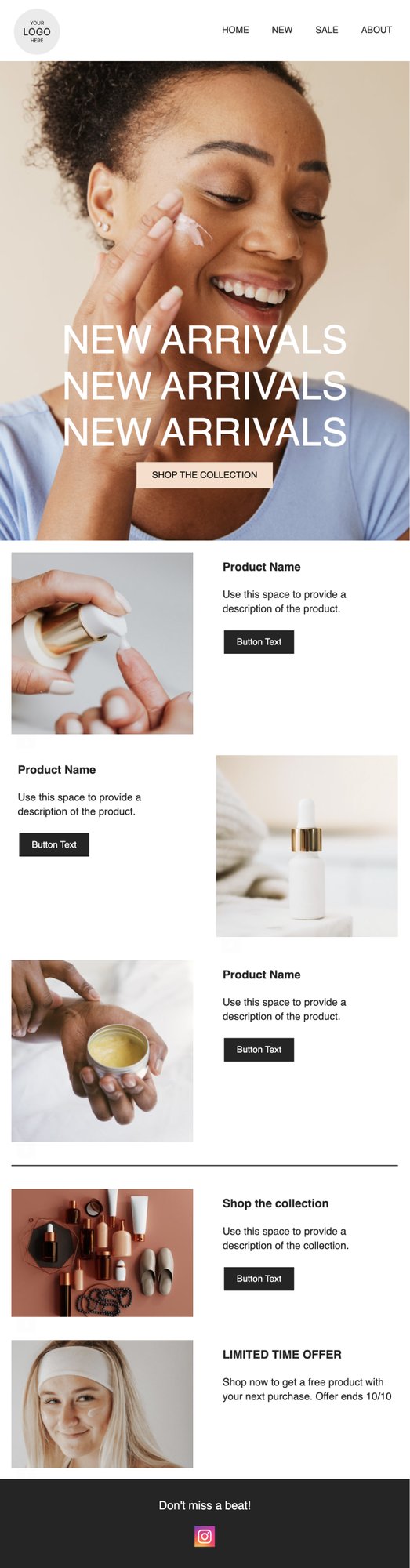

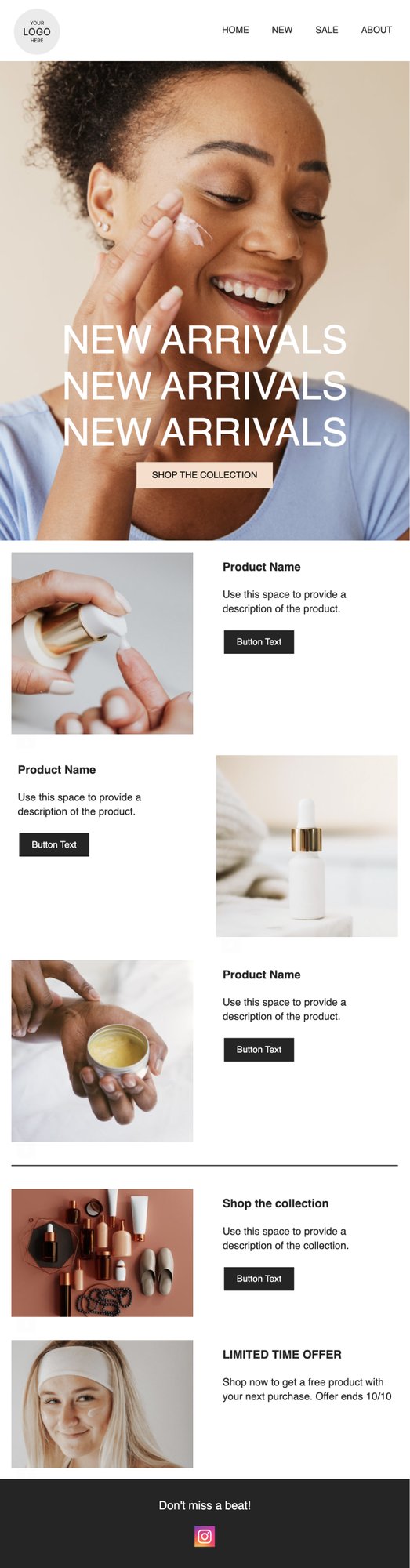

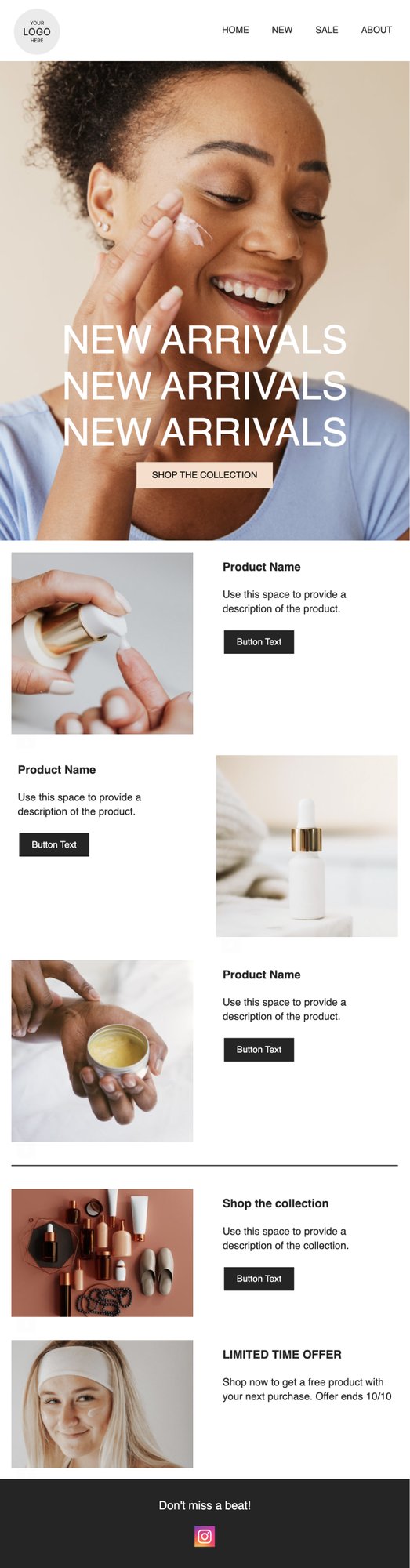

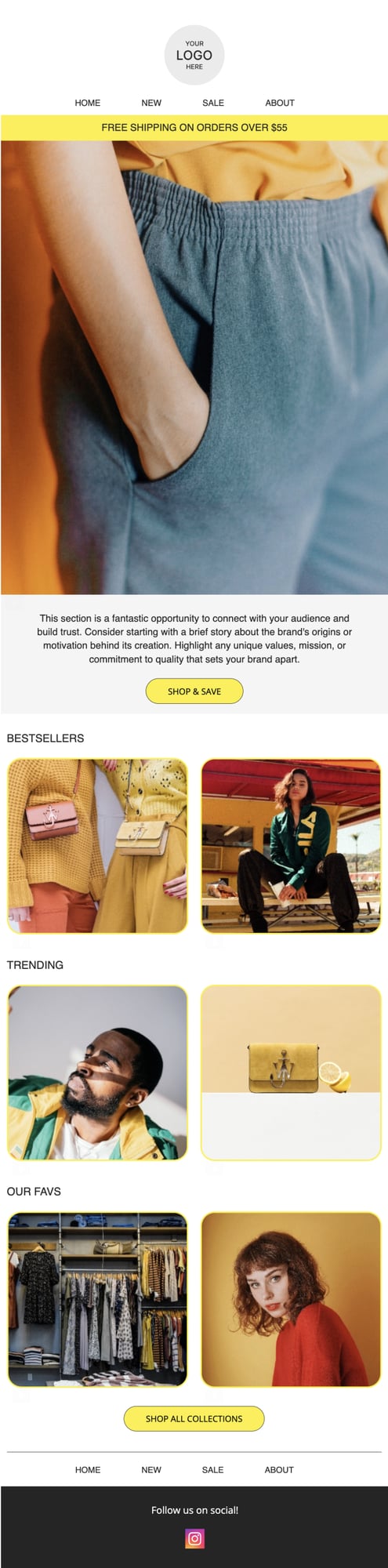

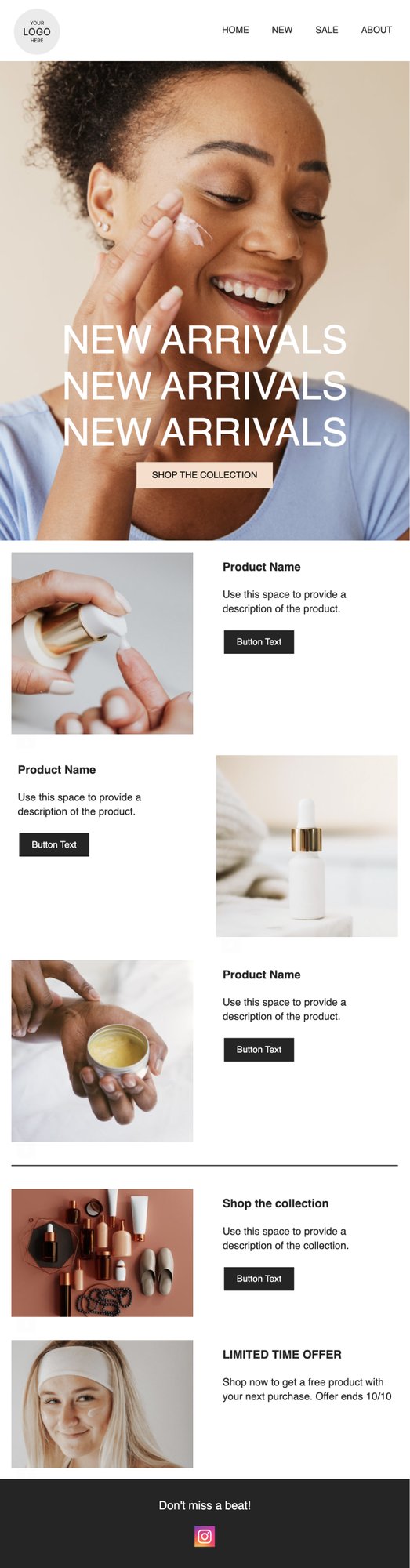

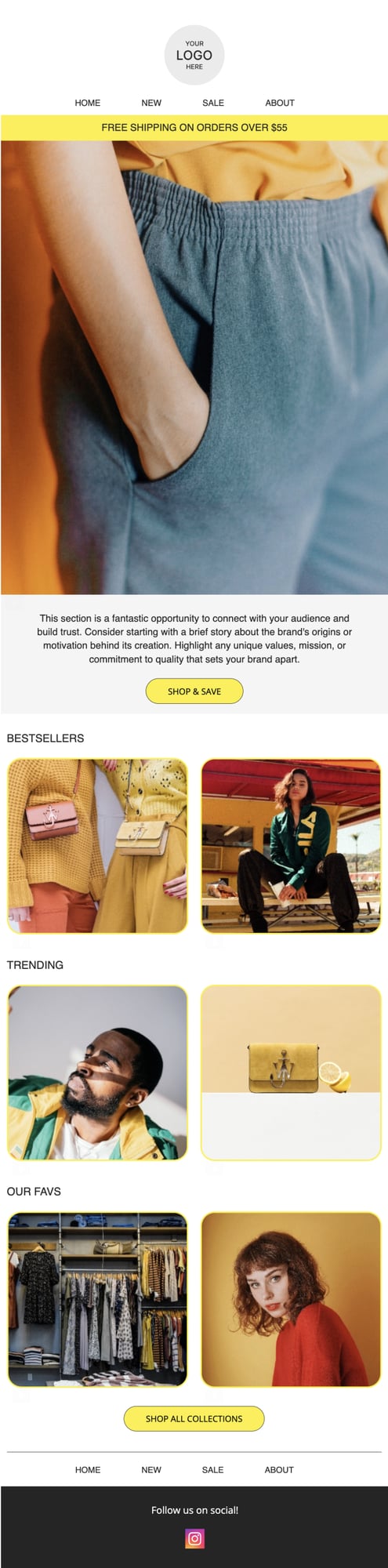

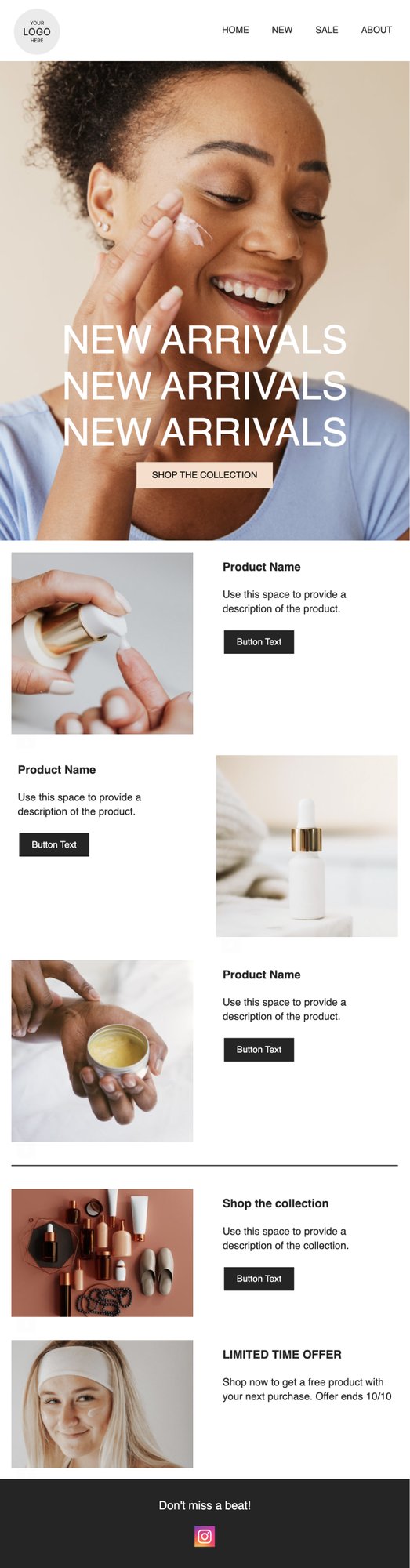

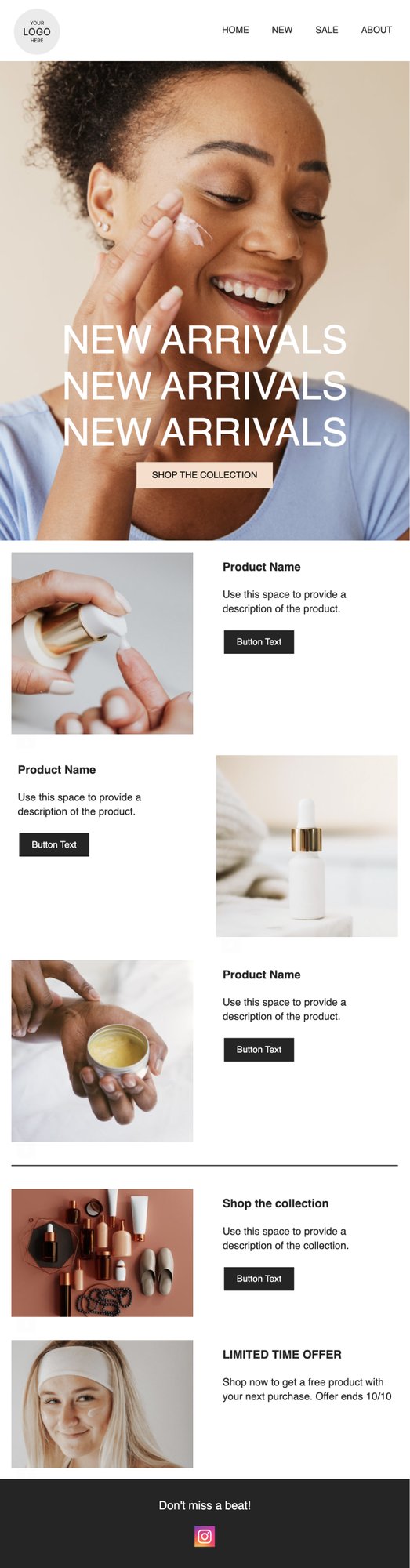

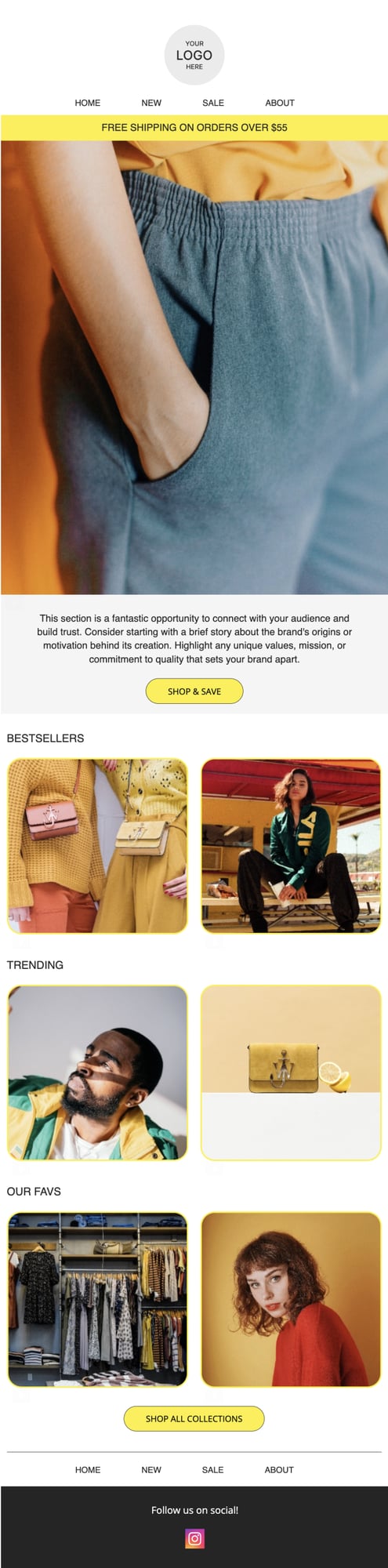

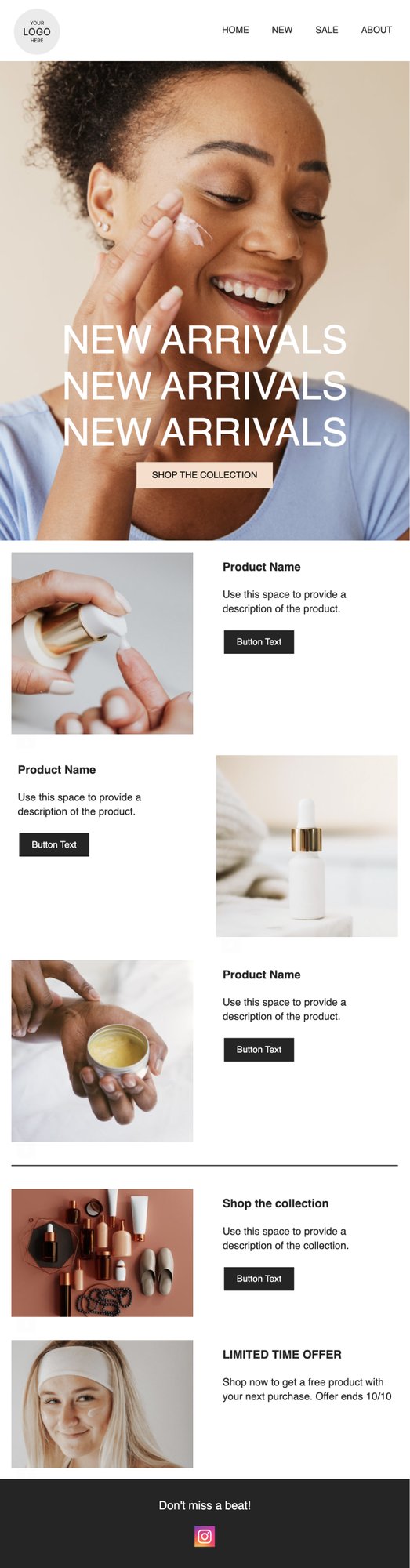

PRIVY EMAIL TEMPLATE

New Arrivals Annoucement

Launching some new products? Let your customers know with this sleek newsletter.

Try this template

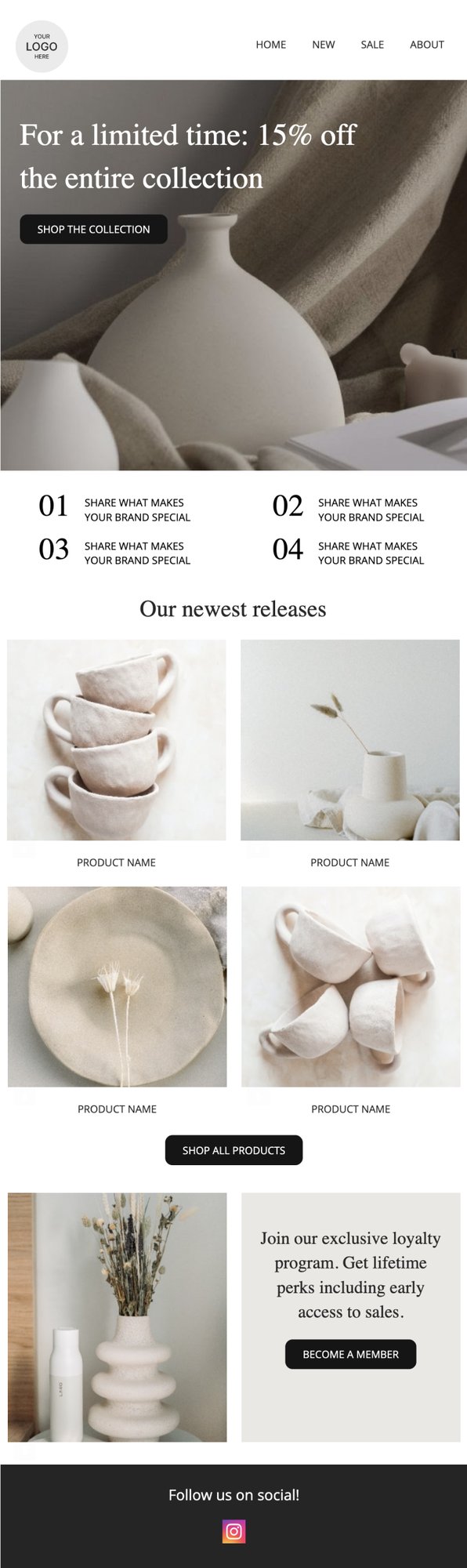

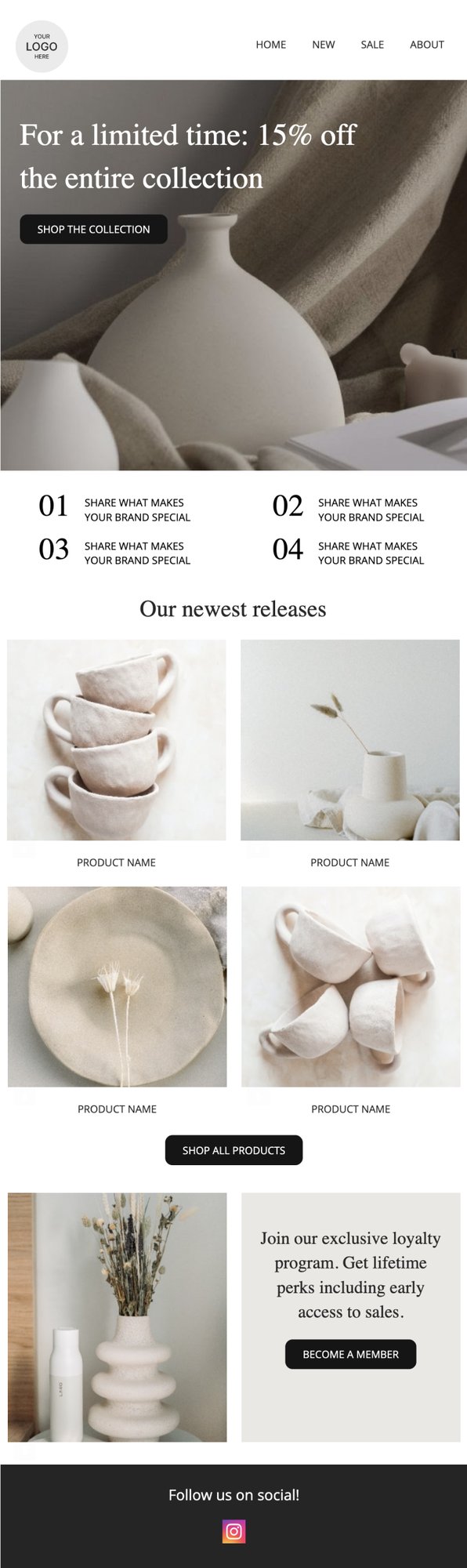

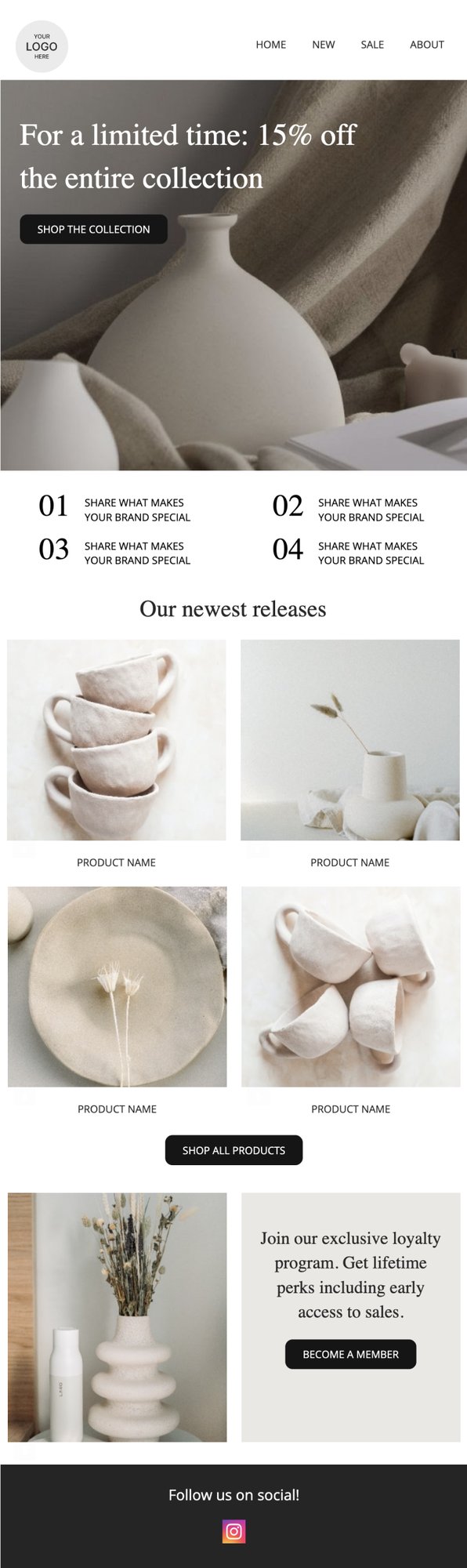

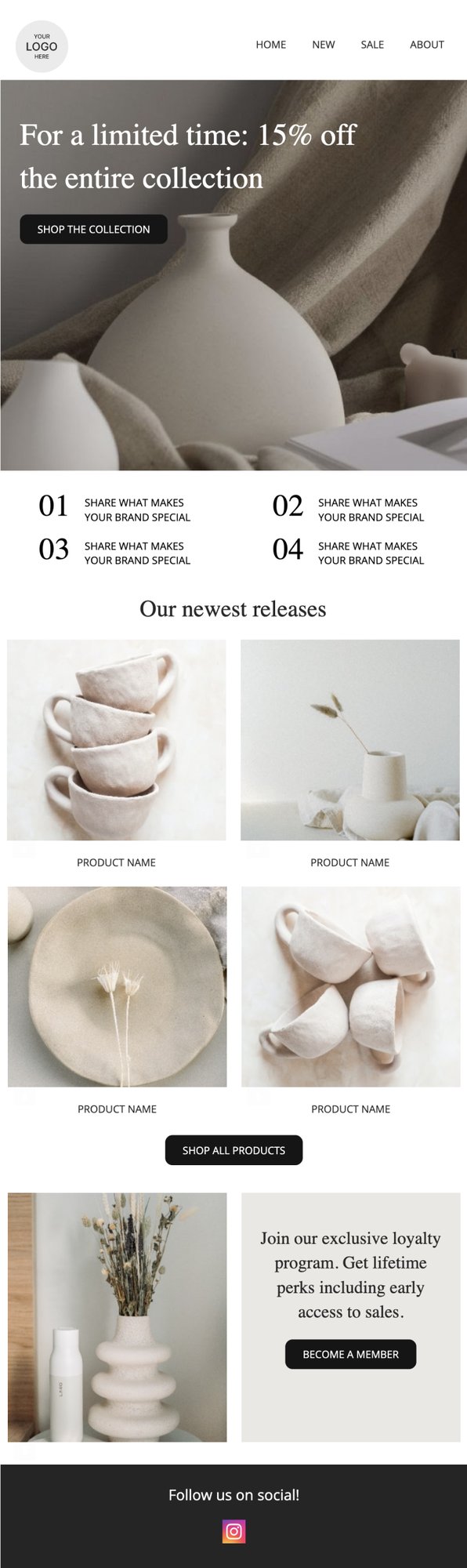

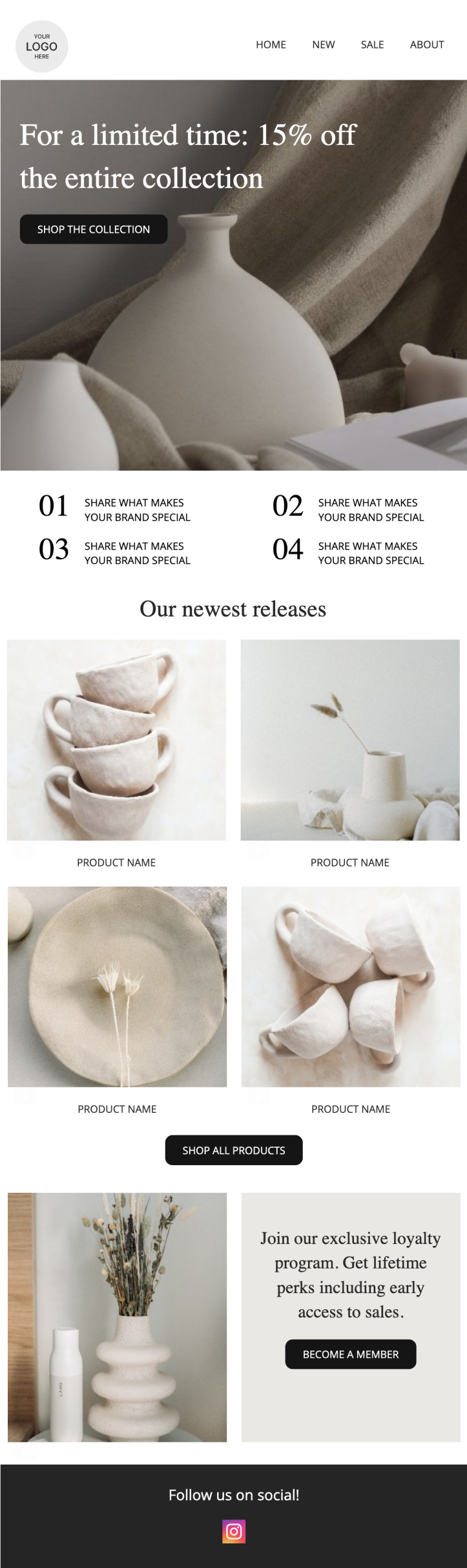

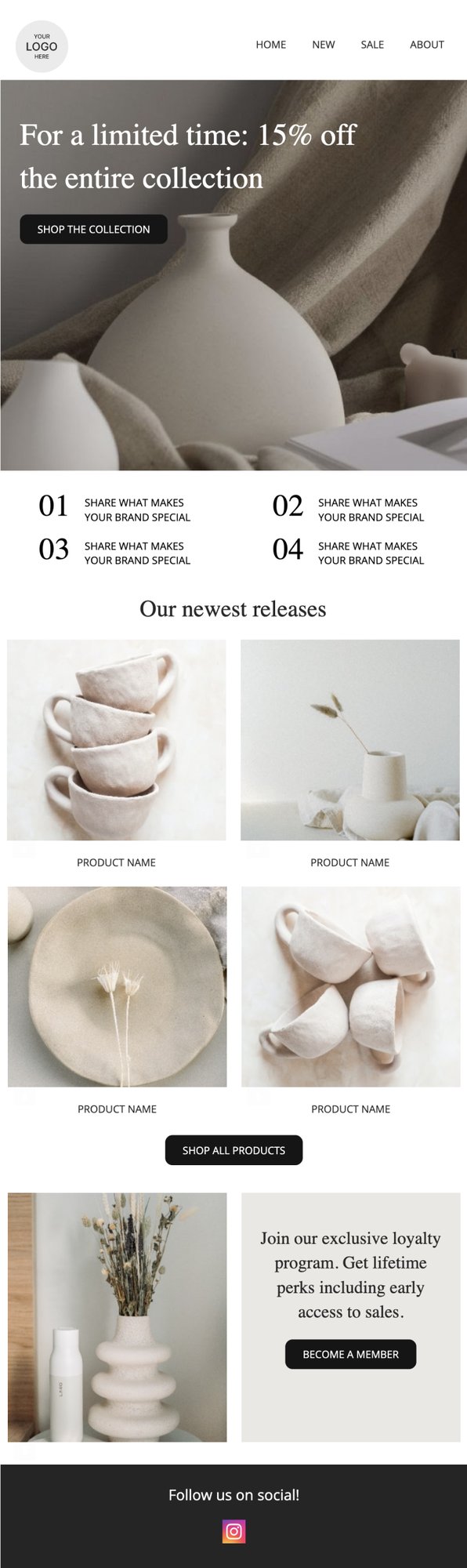

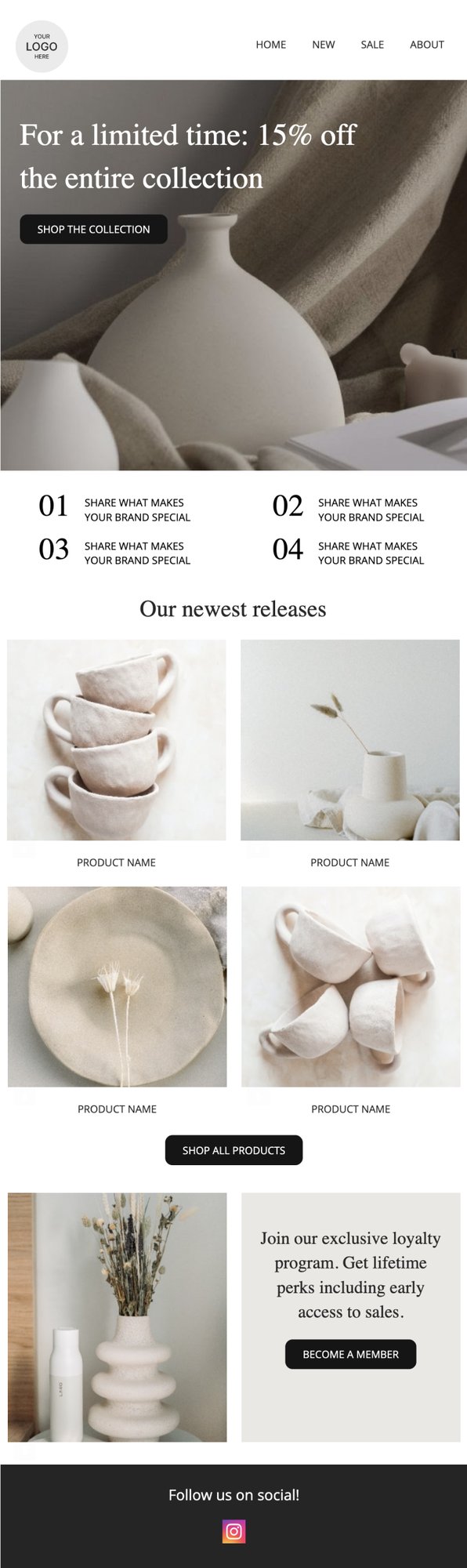

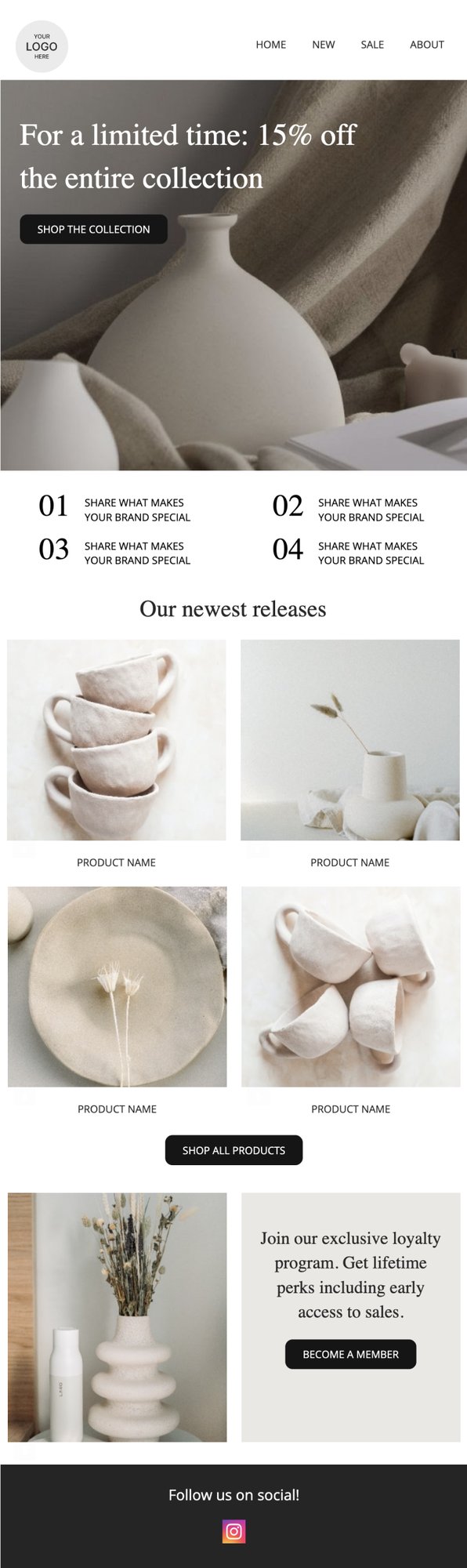

PRIVY EMAIL TEMPLATE

New Release Roundup

Dropping a new collection? Announce your new products here and share what makes your brand special.

Try this template

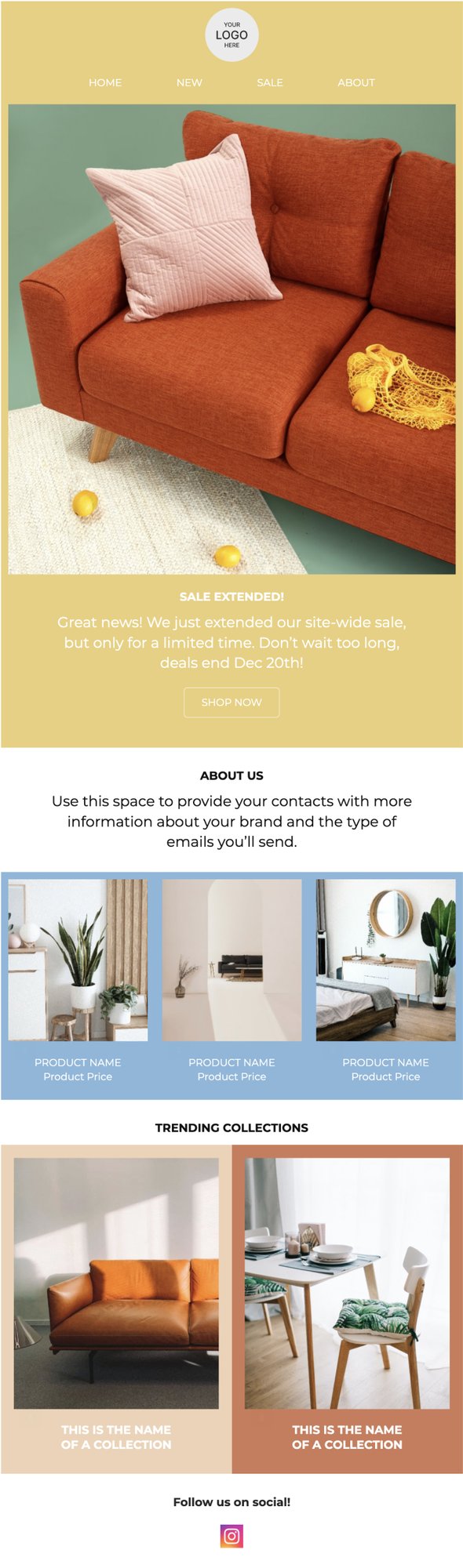

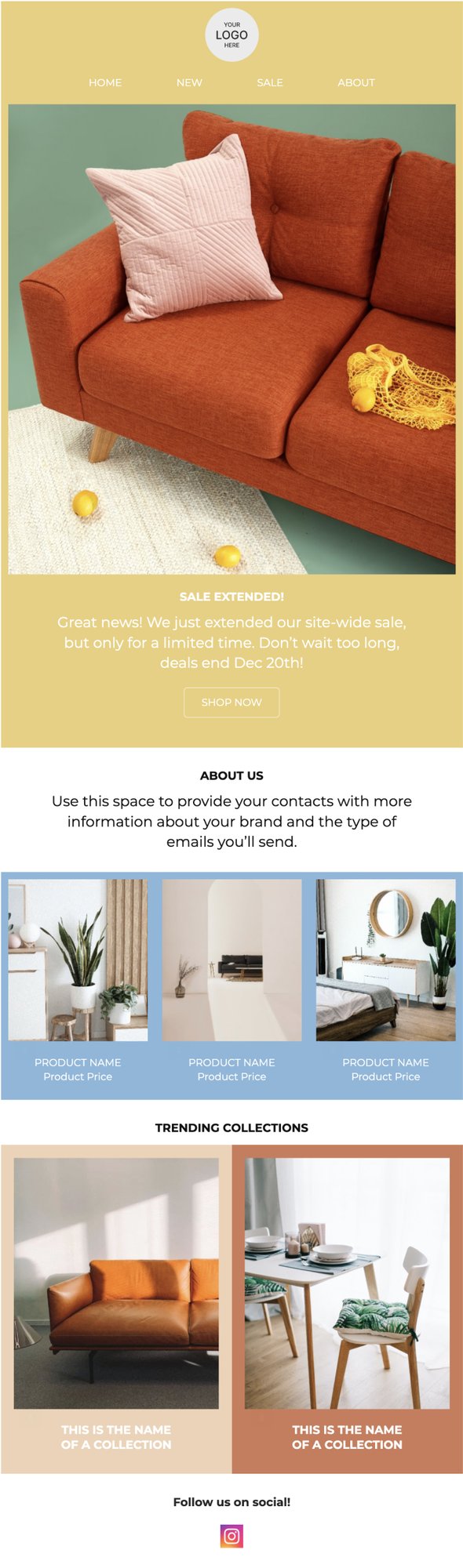

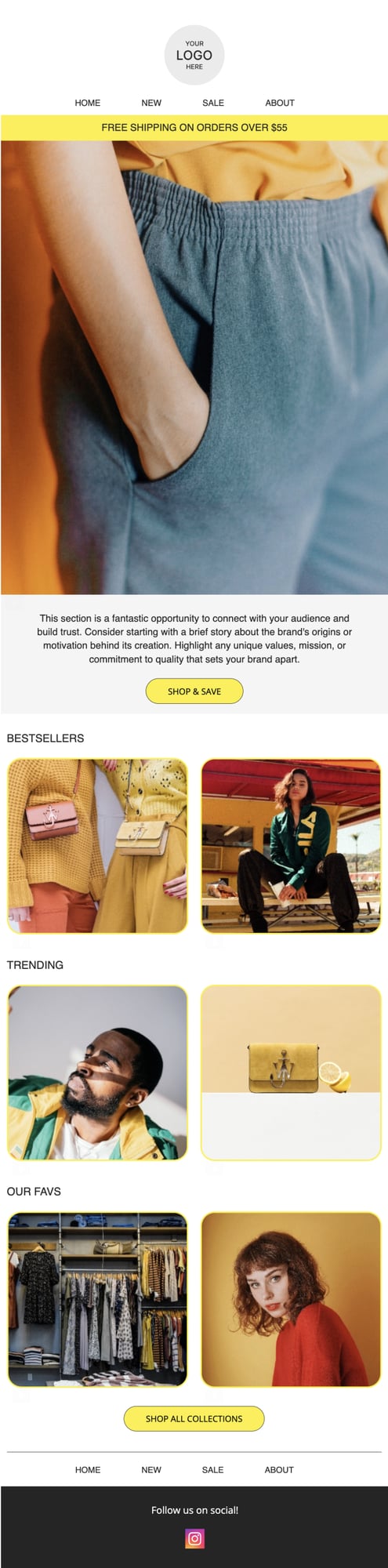

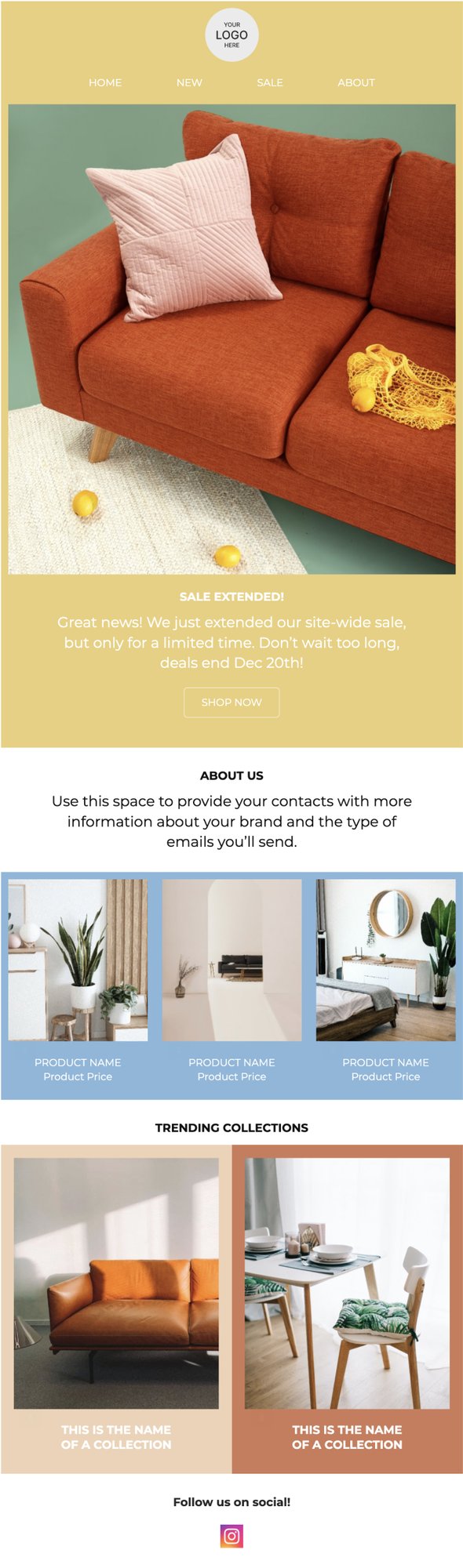

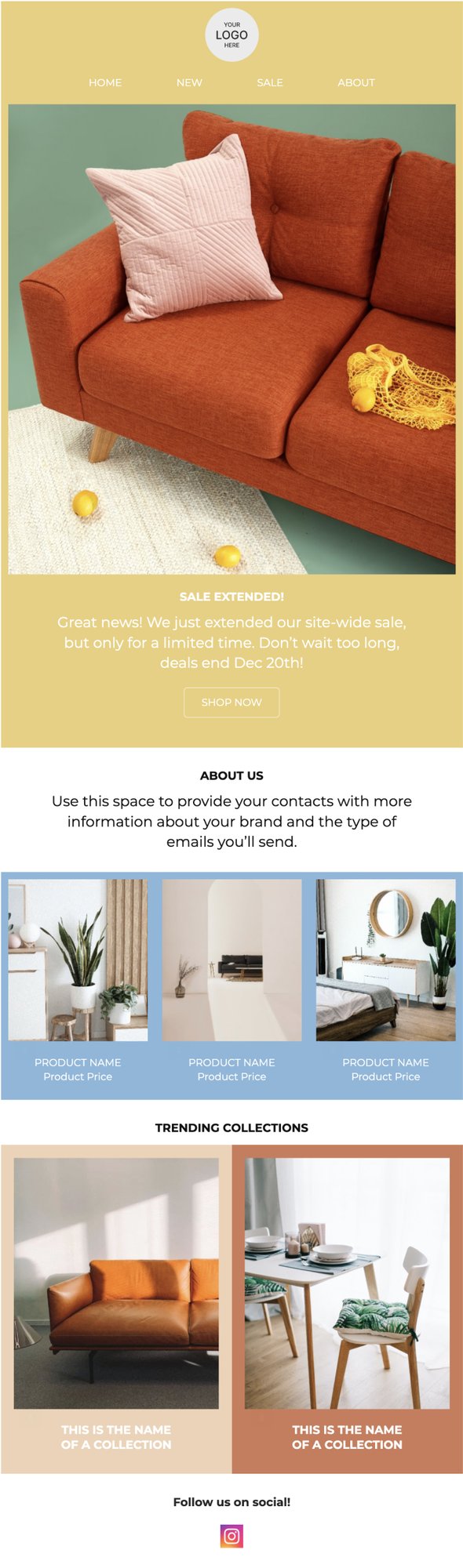

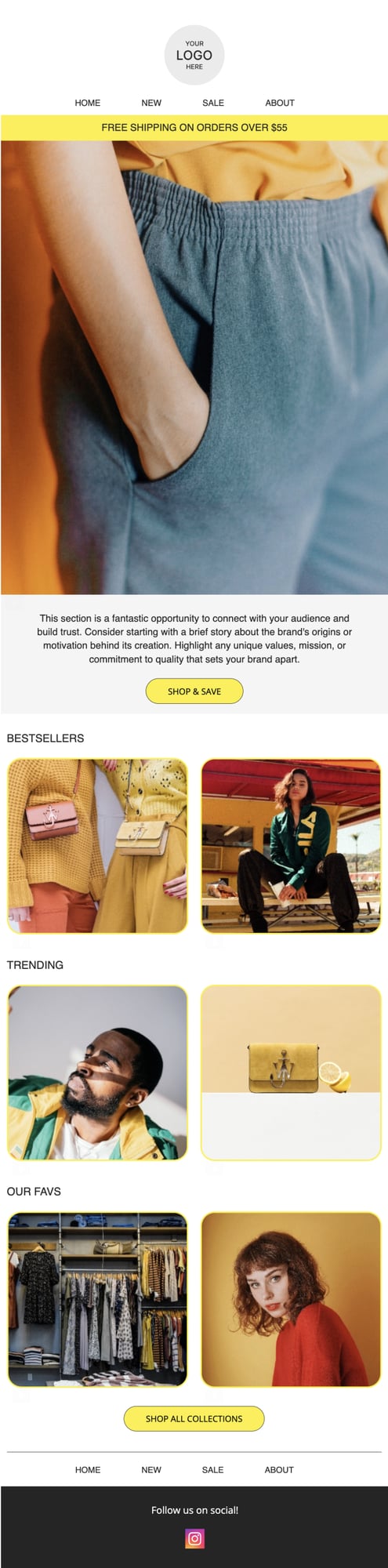

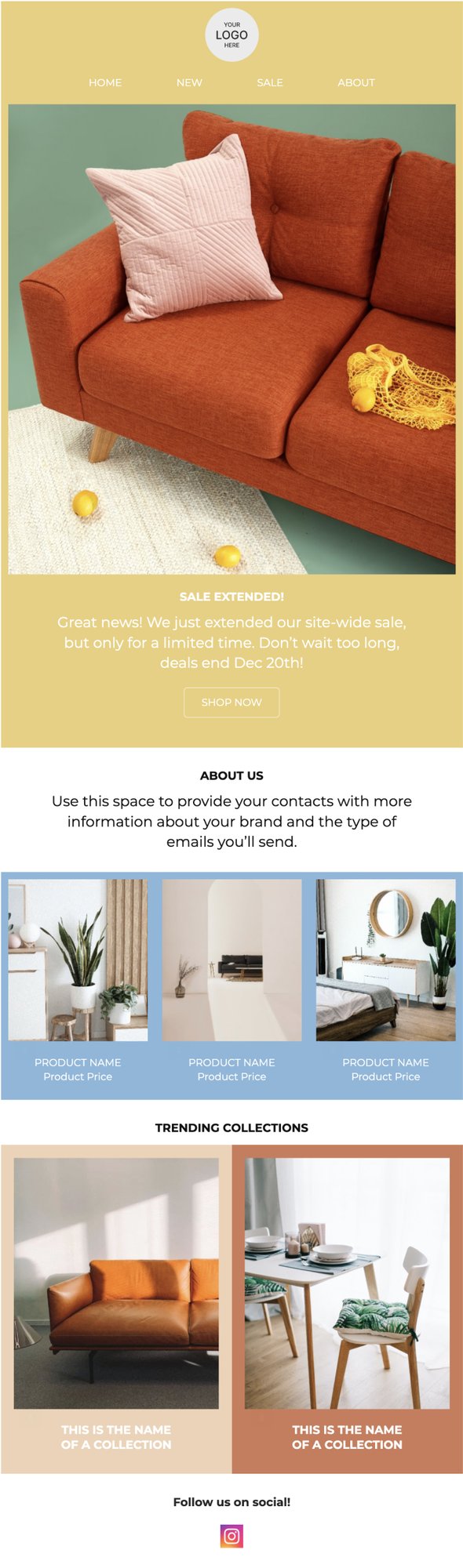

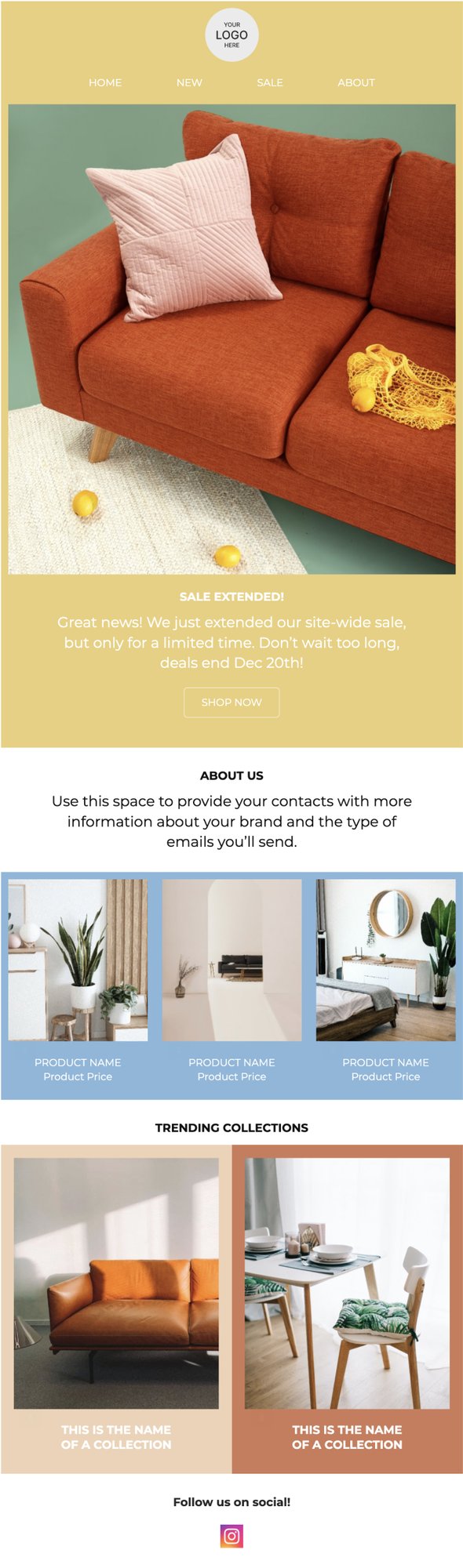

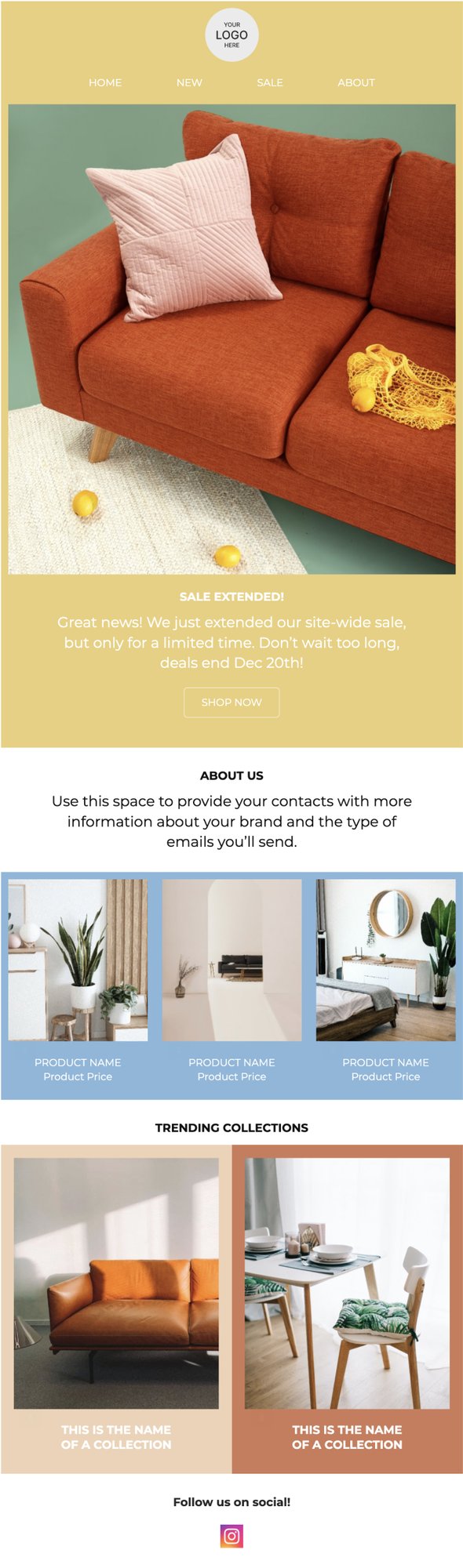

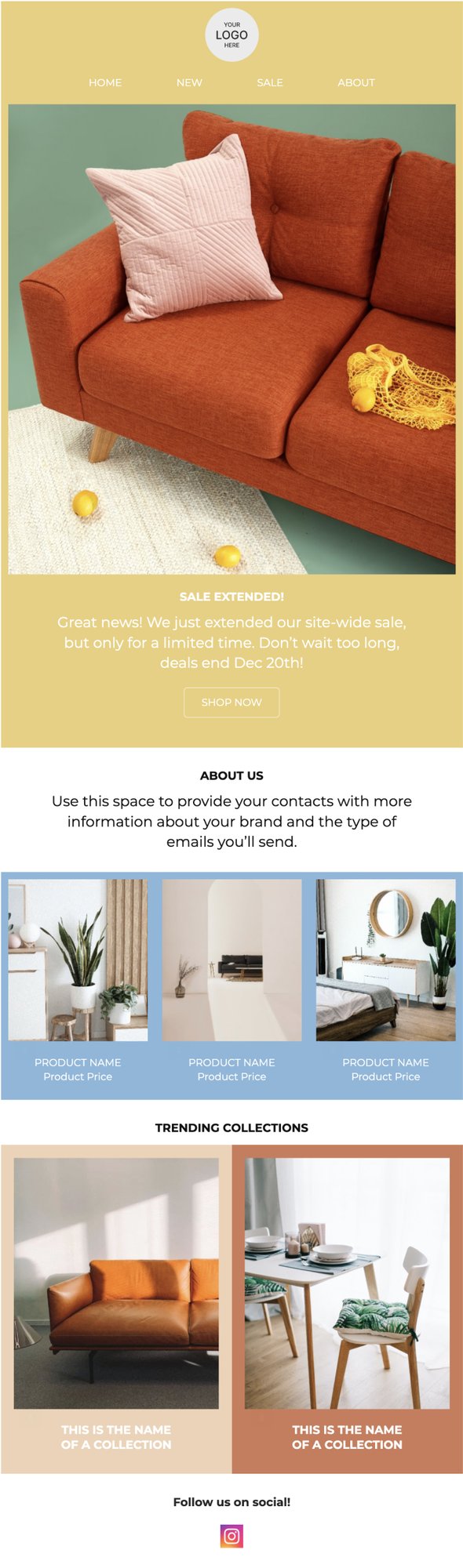

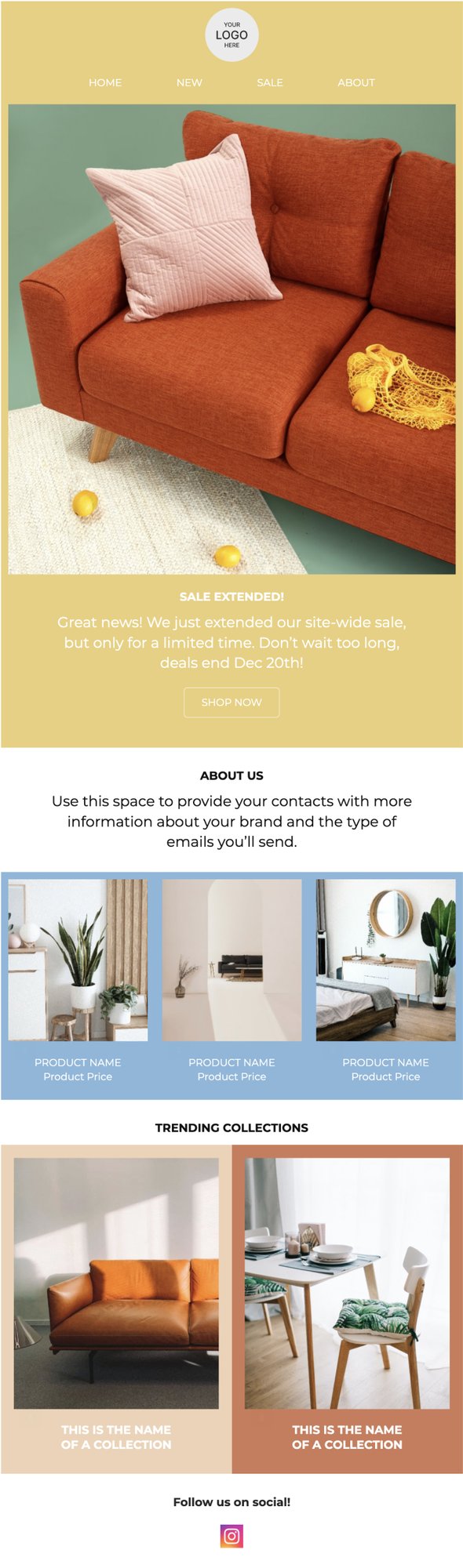

PRIVY EMAIL TEMPLATE

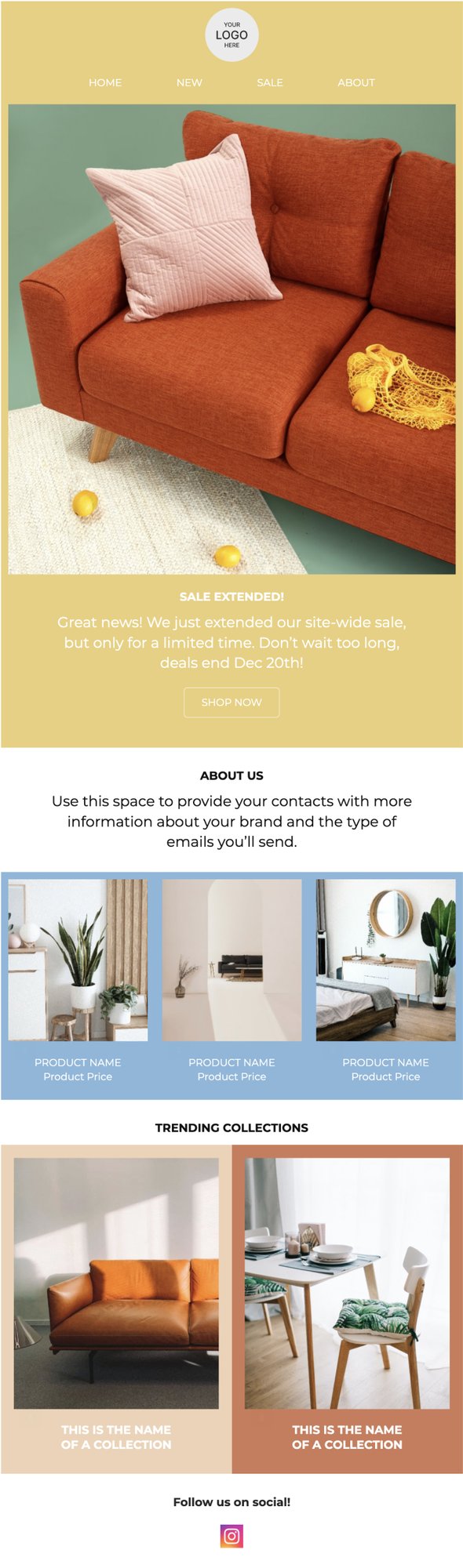

Colorful Sale Extension

This template is a bold, fun way to announce a sale and get some of your bestselling products in front of subscribers.

Try this template

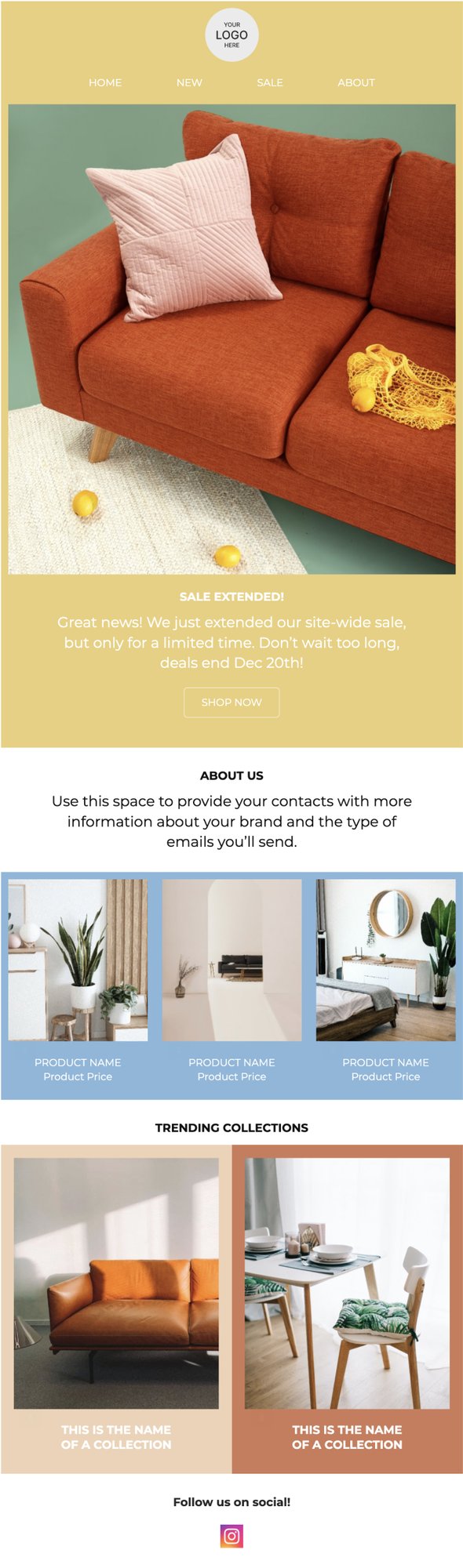

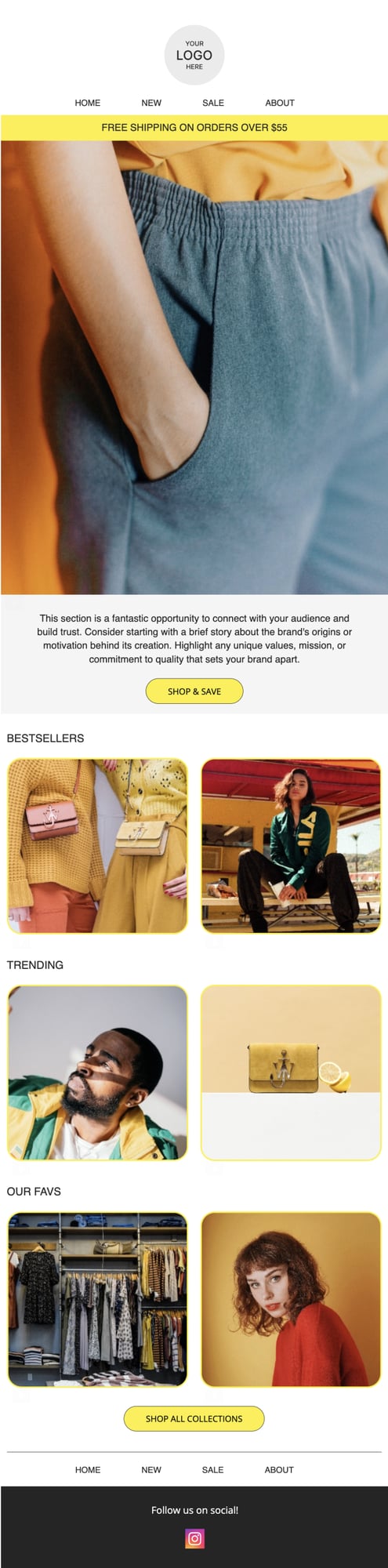

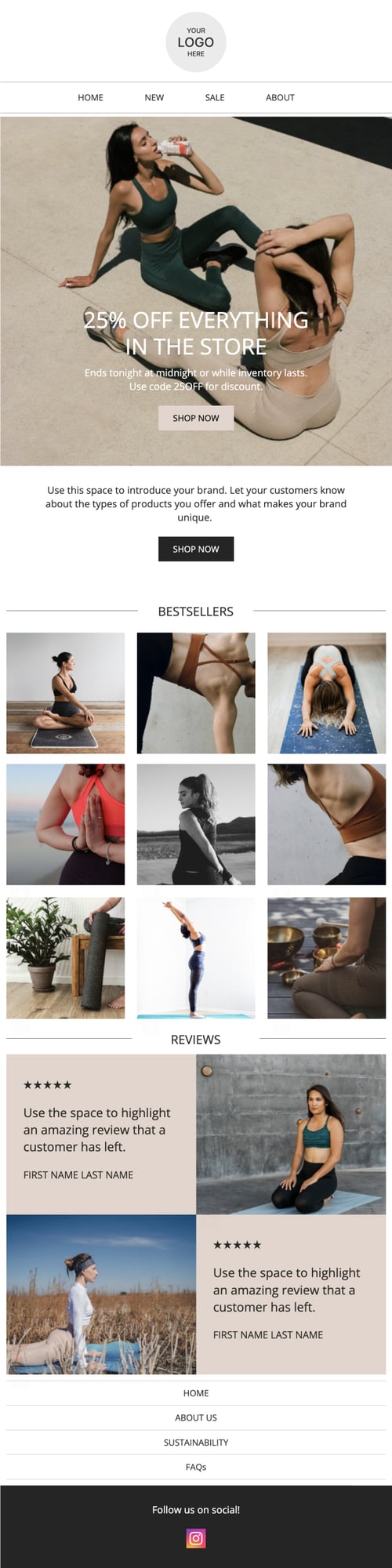

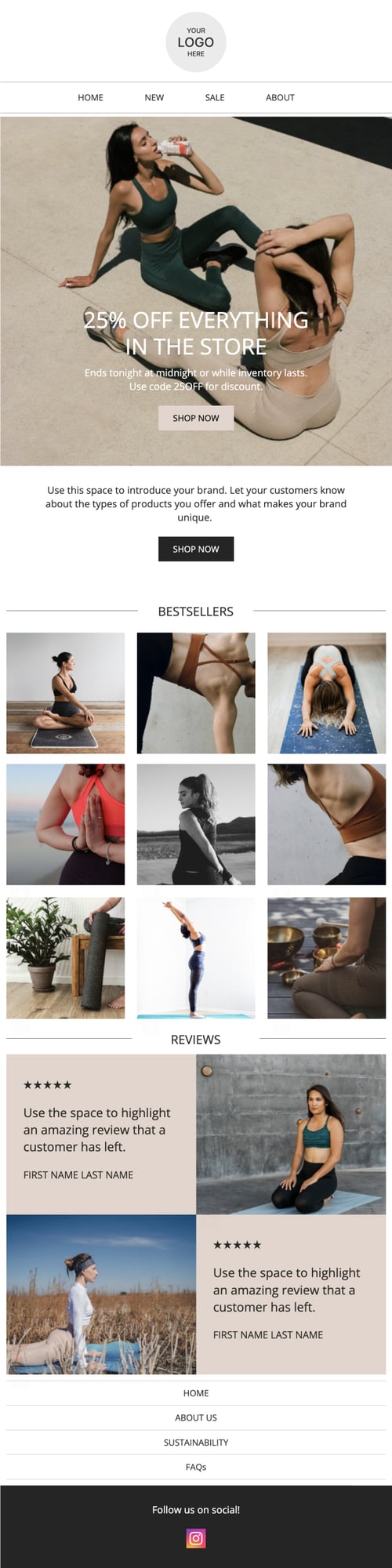

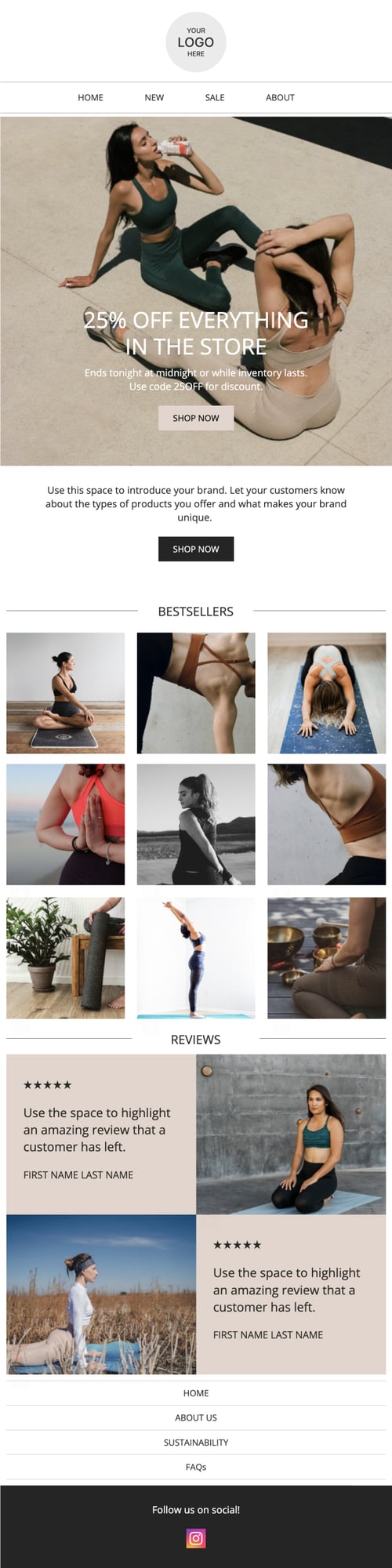

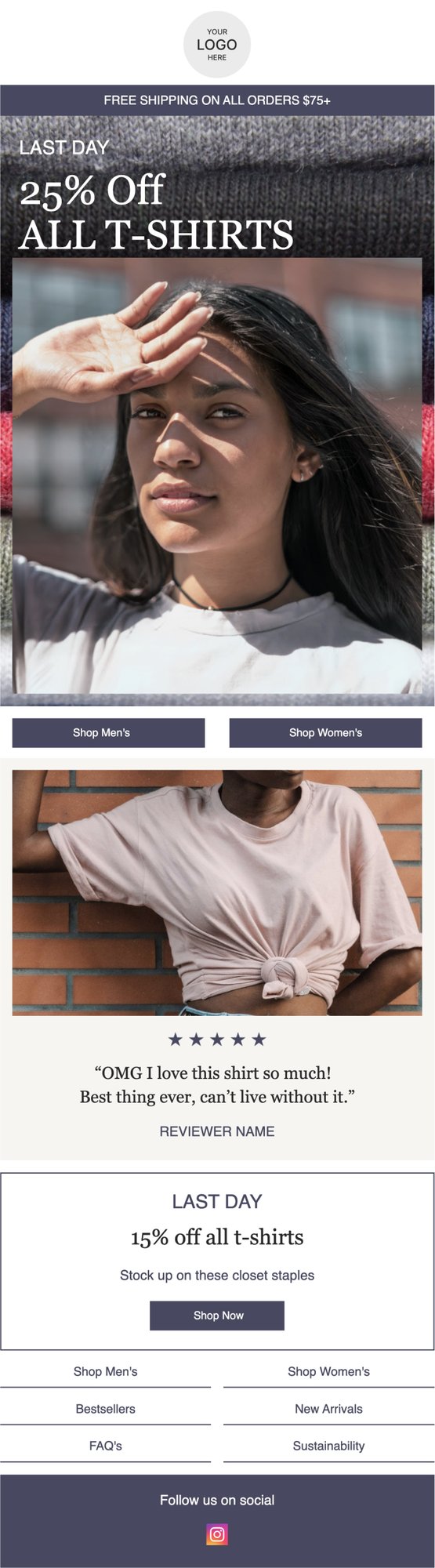

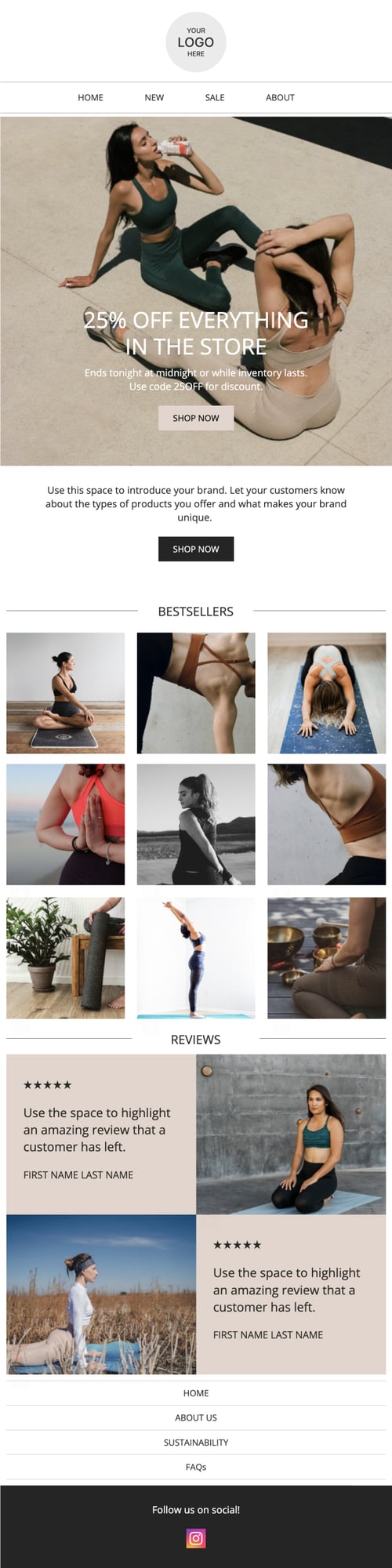

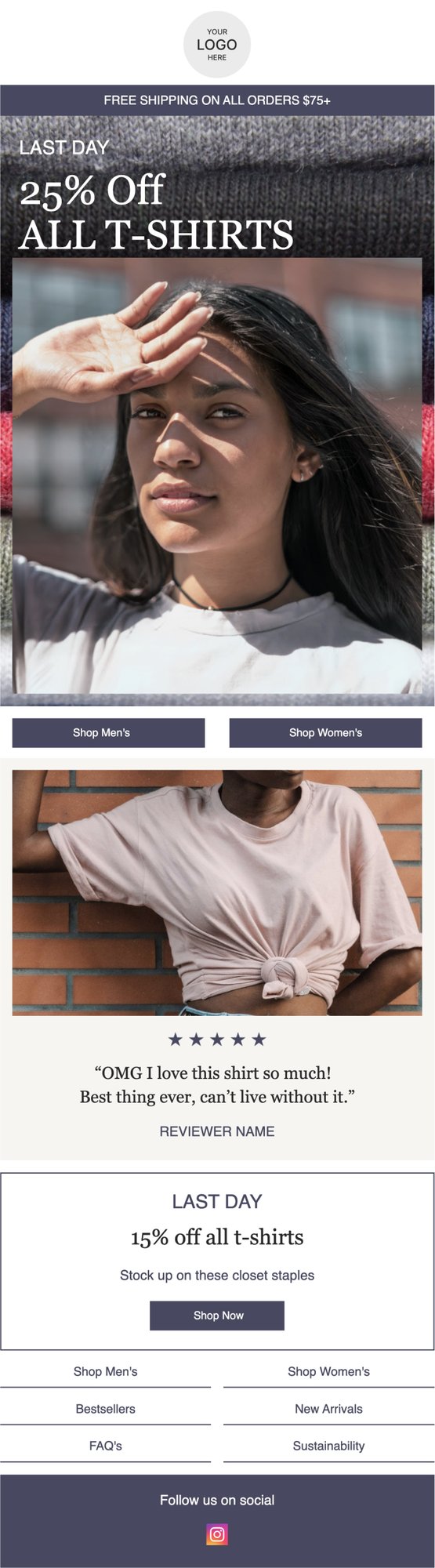

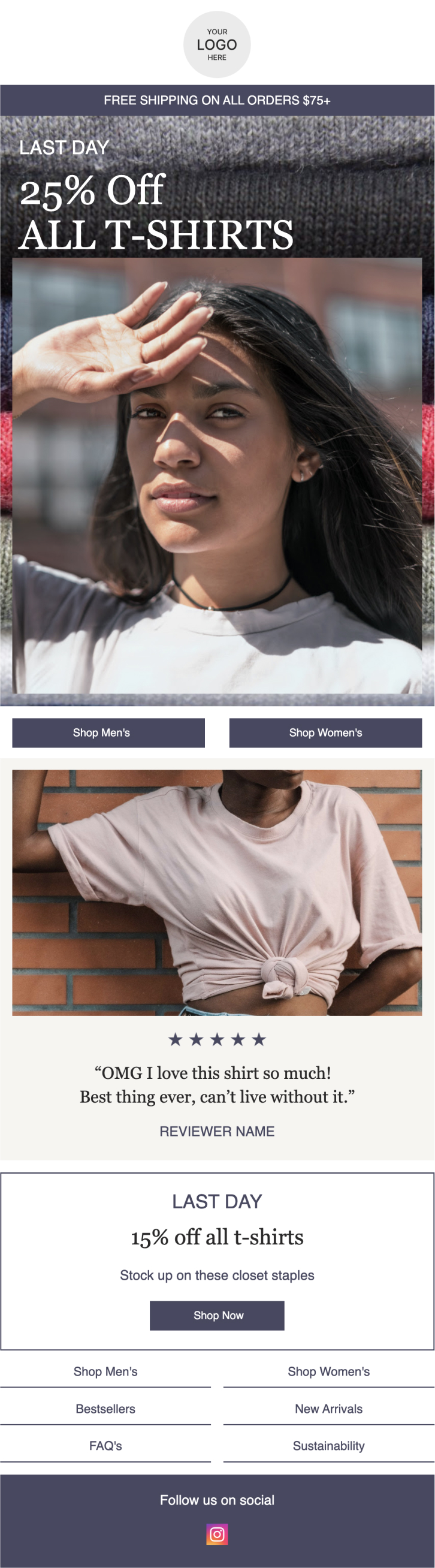

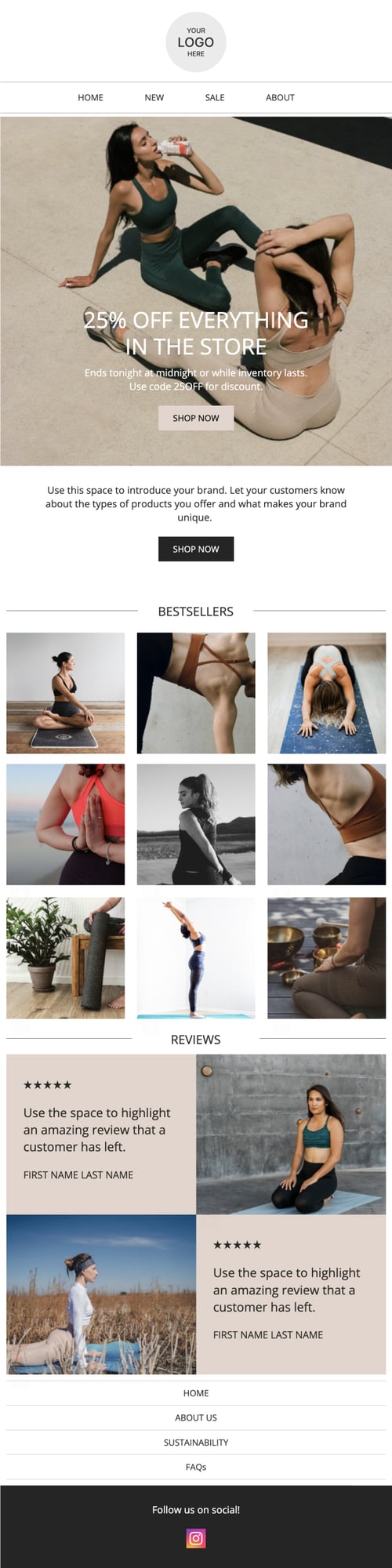

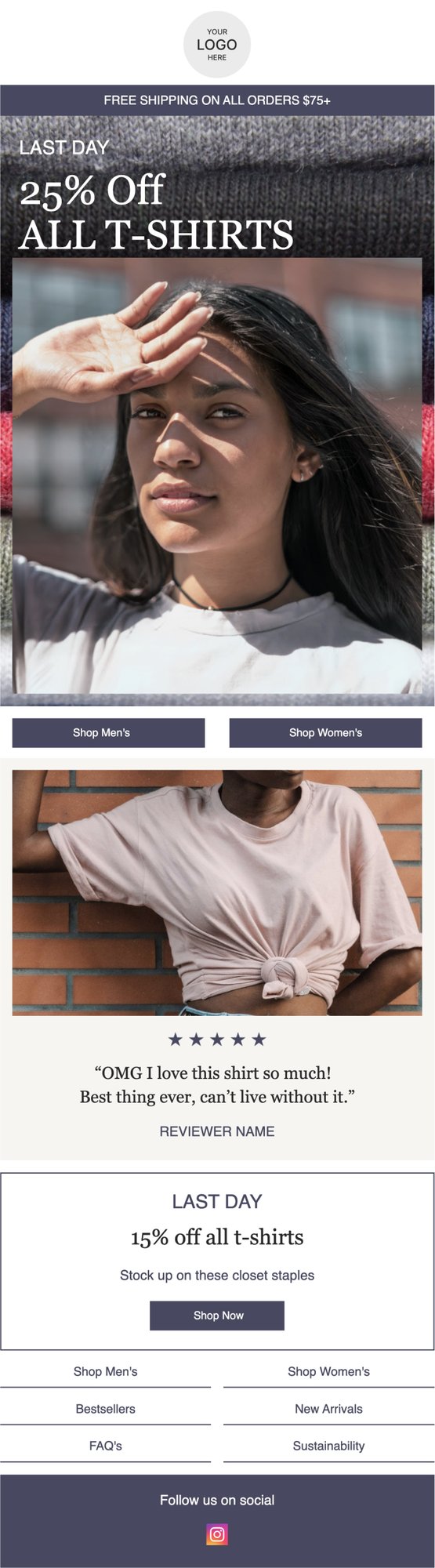

PRIVY EMAIL TEMPLATE

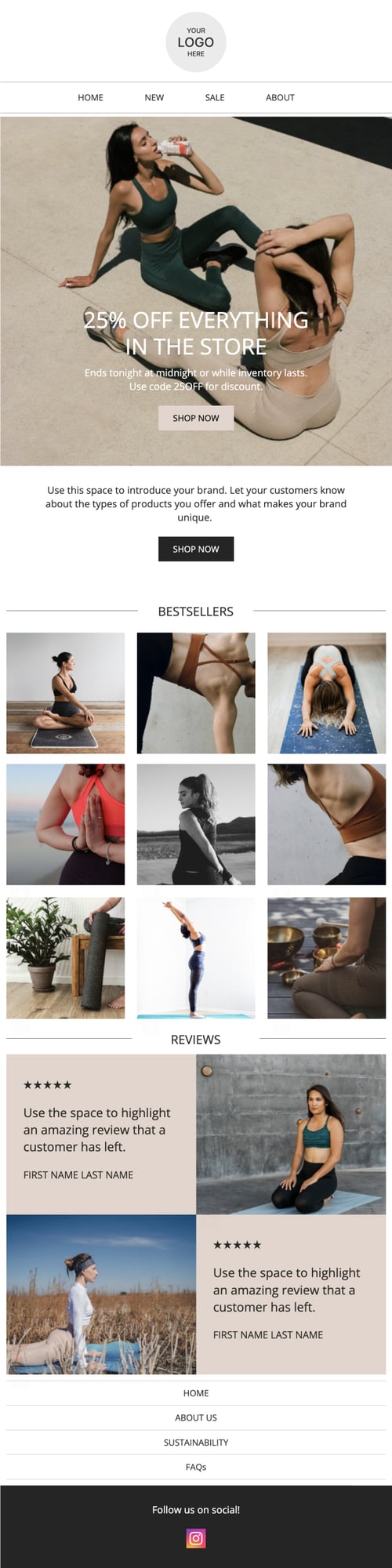

Sale Announcement with Reviews

Reviews are a great way to build credibility for your brand. Feature your most glowing feedback to make subscribers even more sure they want to buy from you.

Try this template

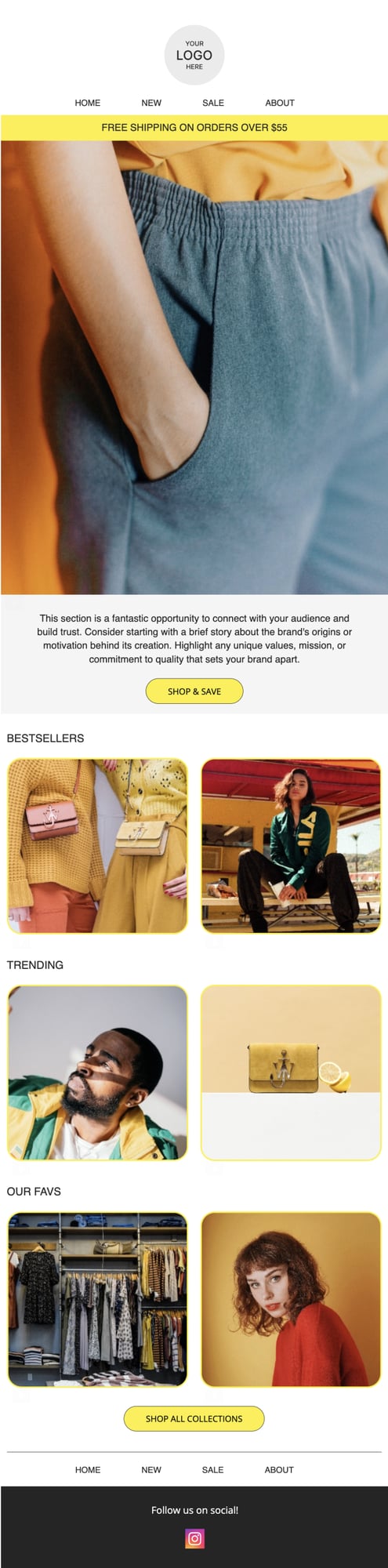

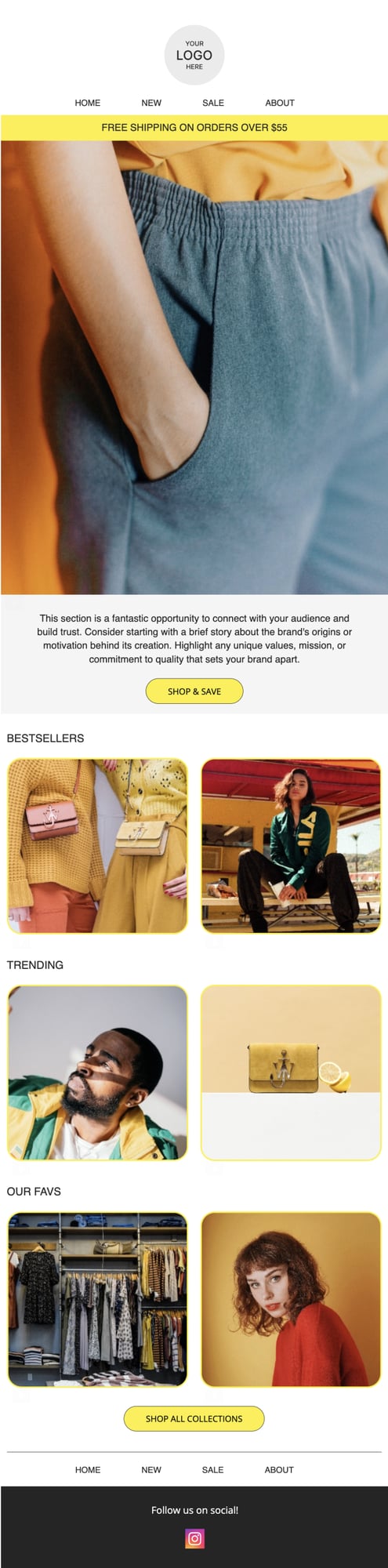

PRIVY EMAIL TEMPLATE

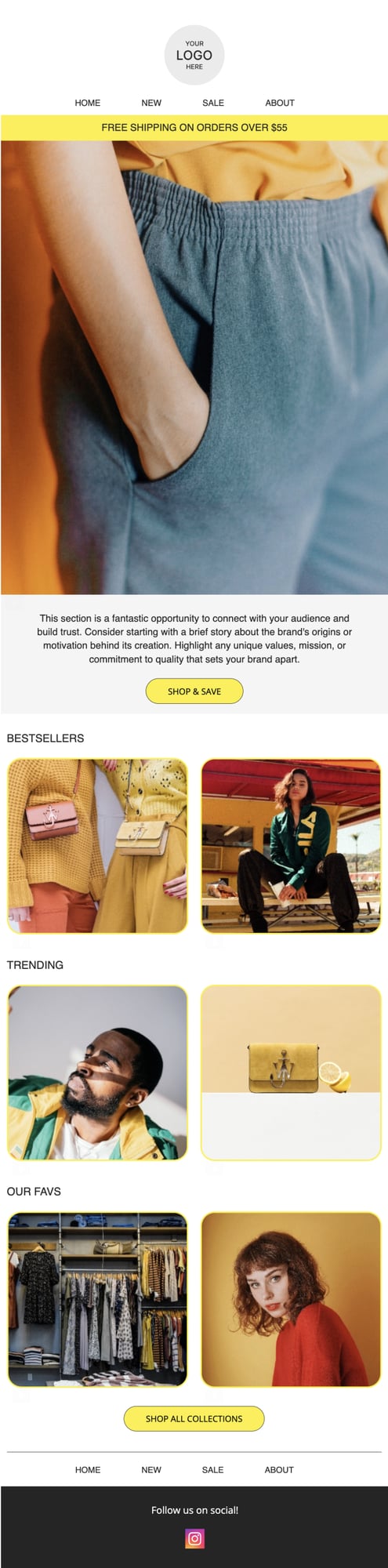

Featured Collections Newsletter

Use this template to display a few of your product collections. Rotate in seasonal products or limited releases to make this email even more impactful.

Try this template

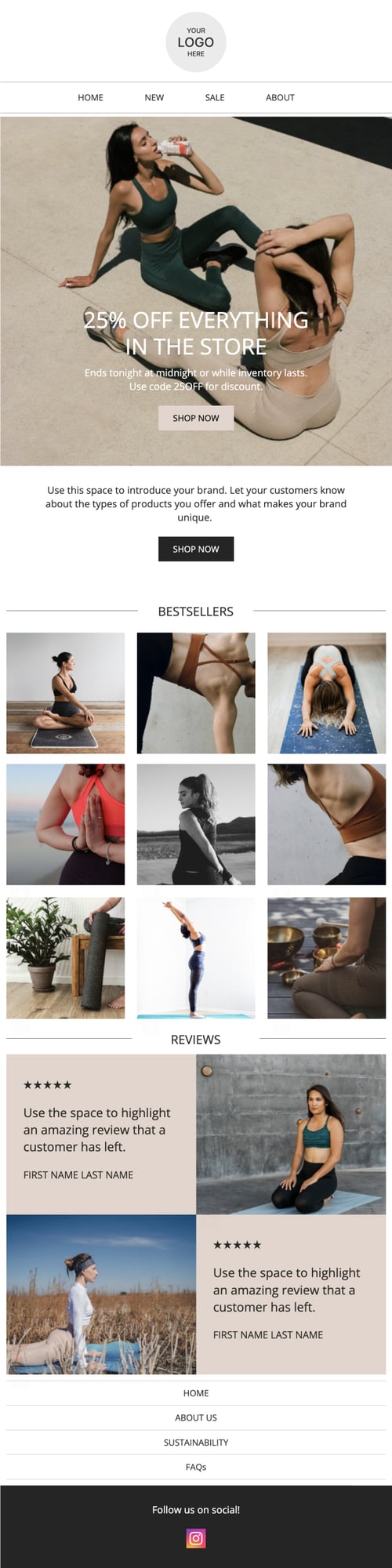

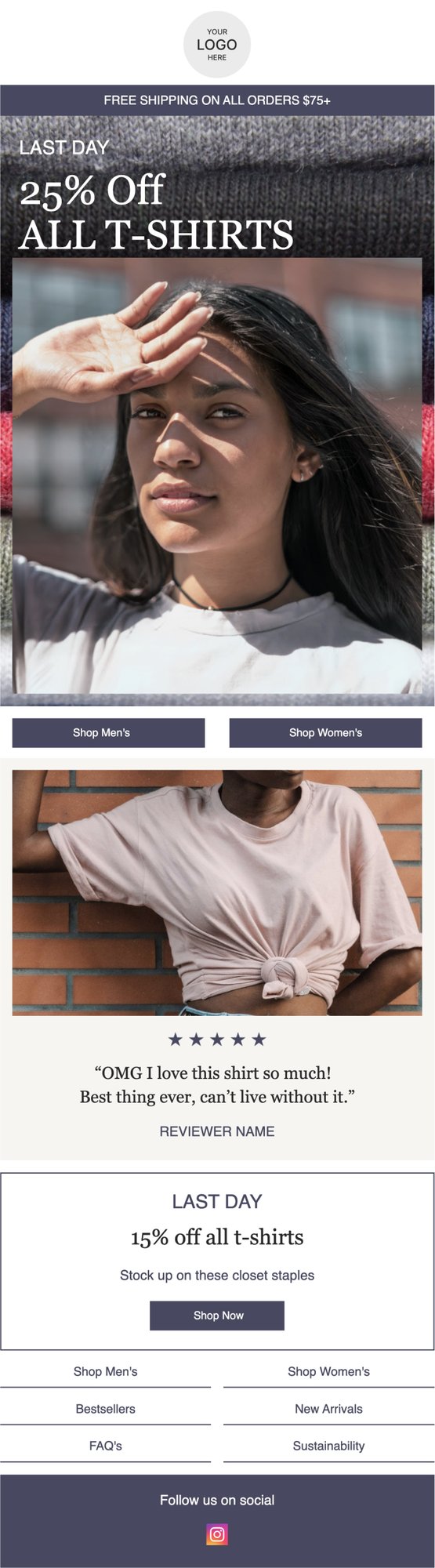

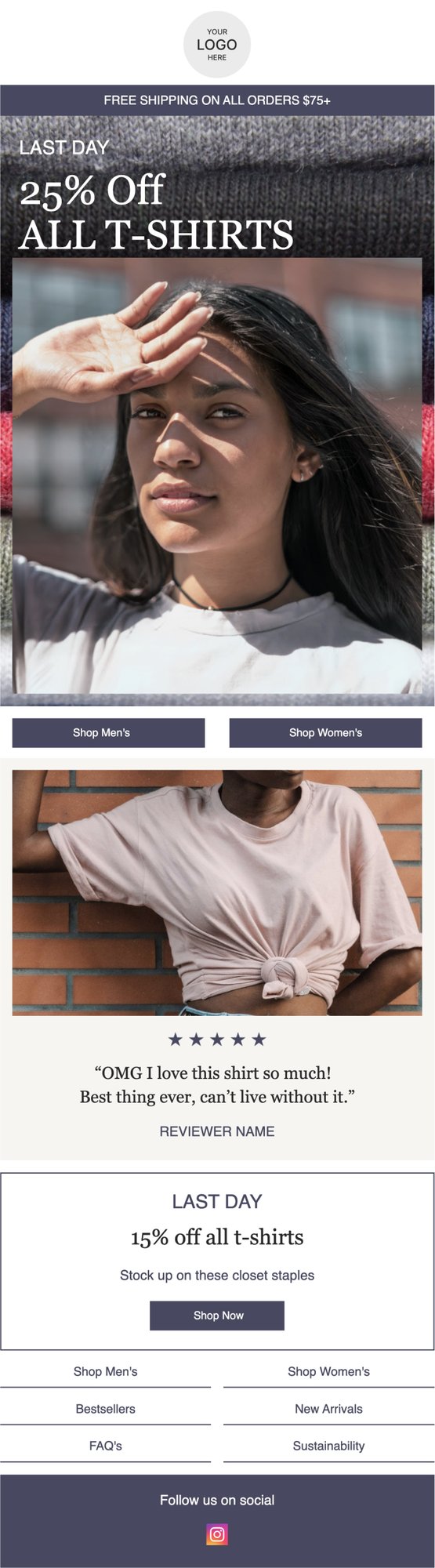

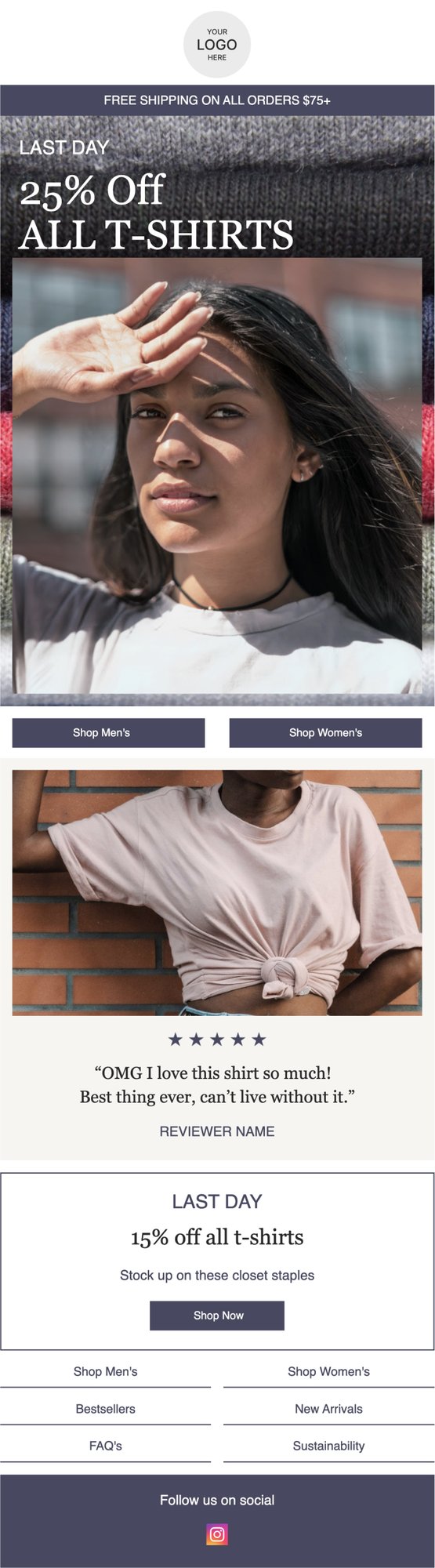

PRIVY EMAIL TEMPLATE

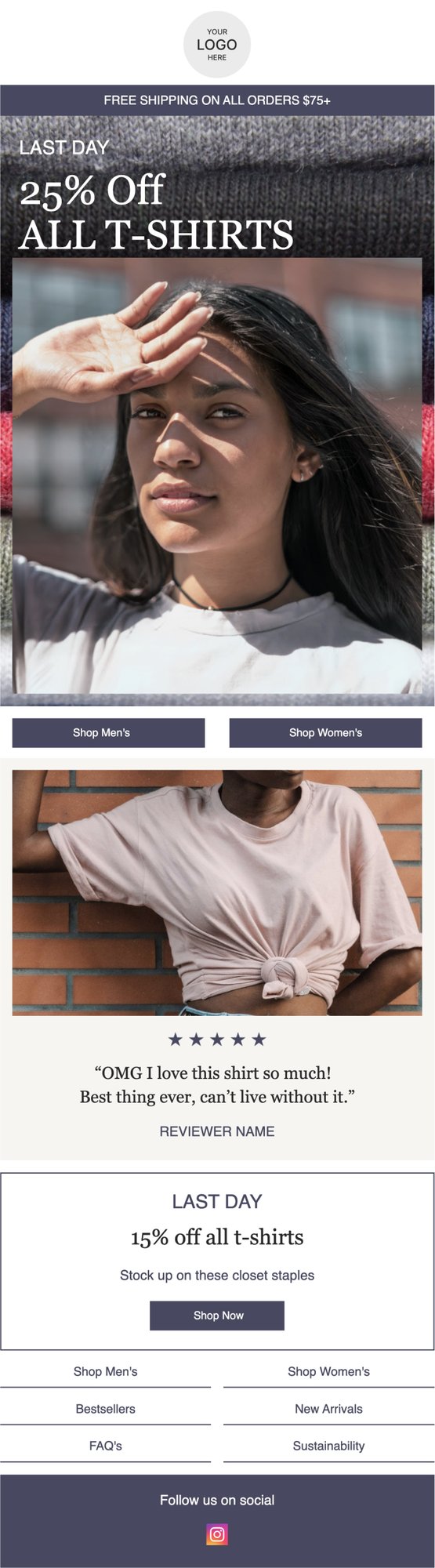

Last Chance Sale with Review

Remind your customers that your sale is ending soon, and give them even more encouragement to buy by featuring a product review.

Try this template

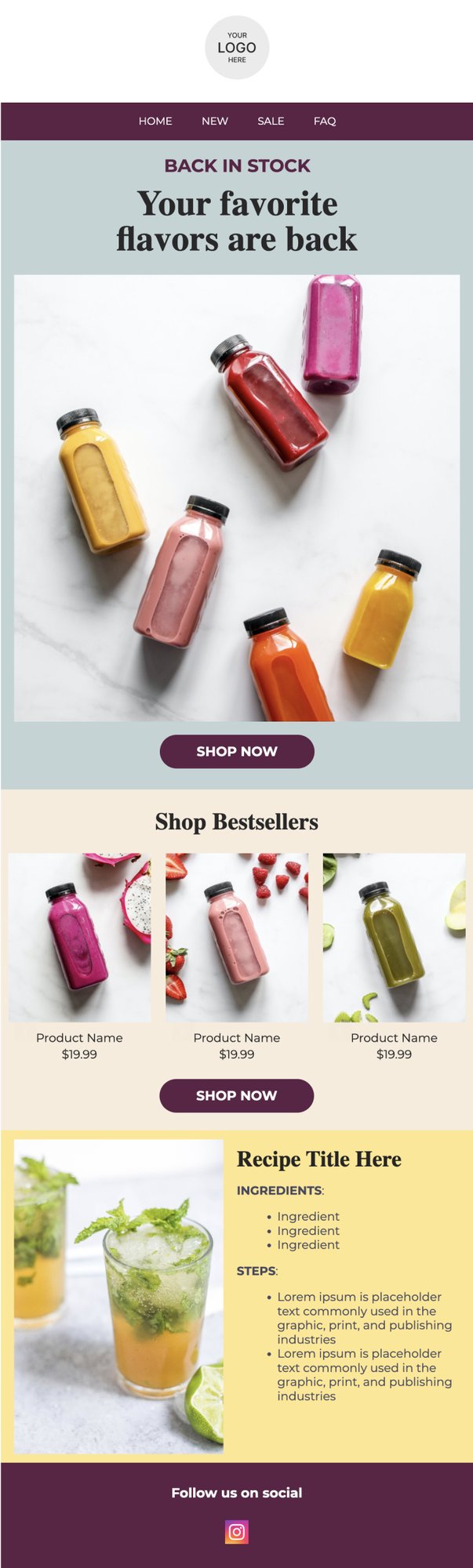

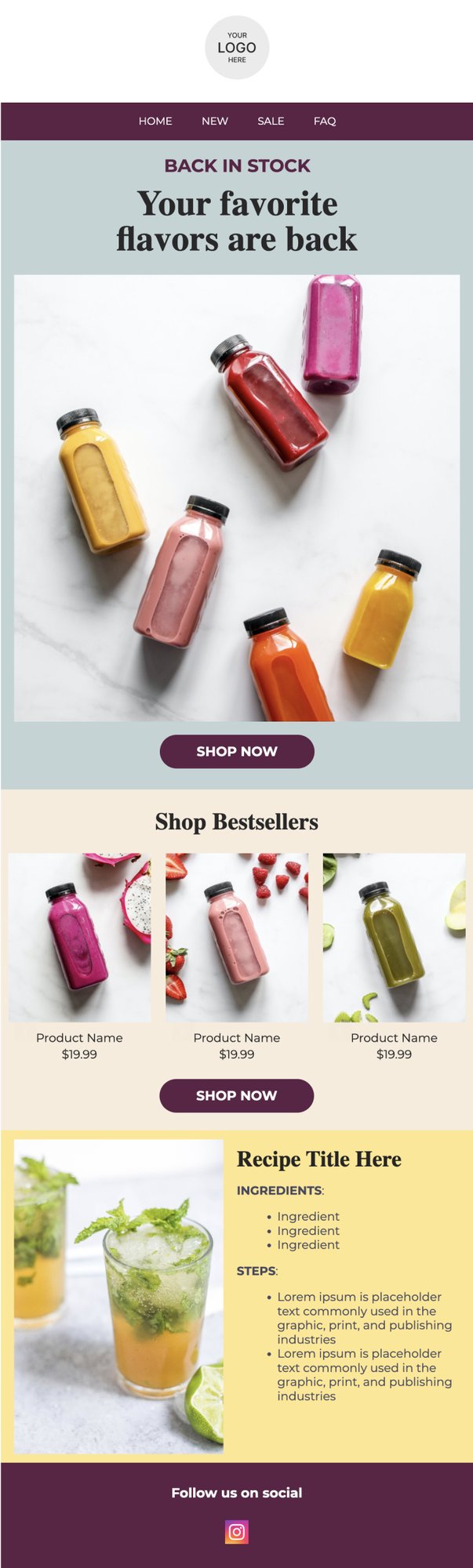

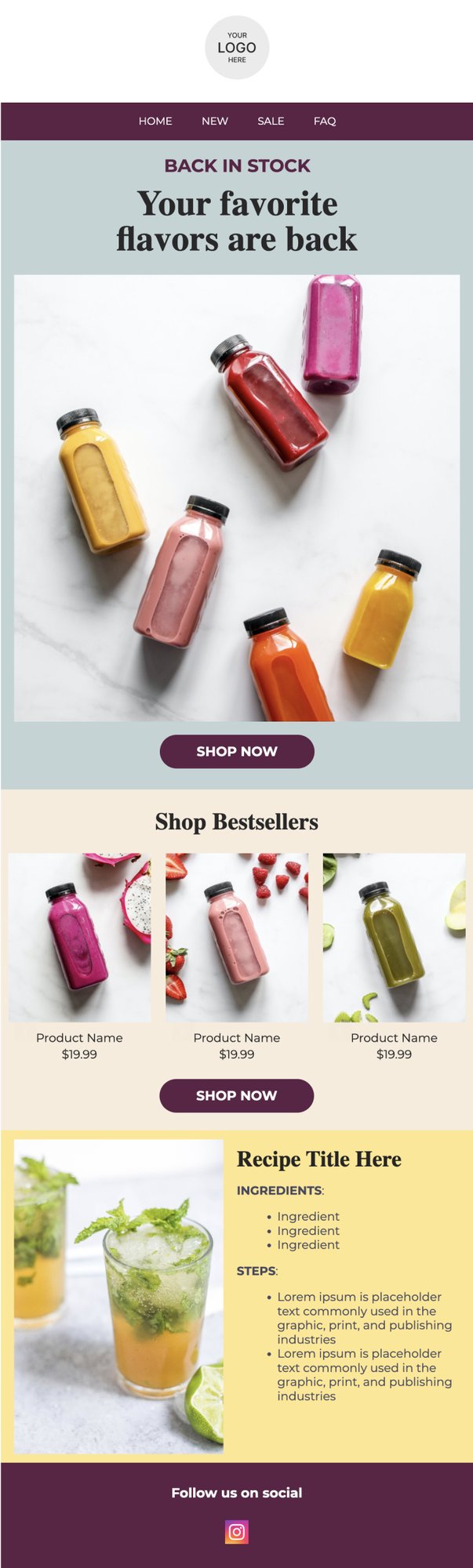

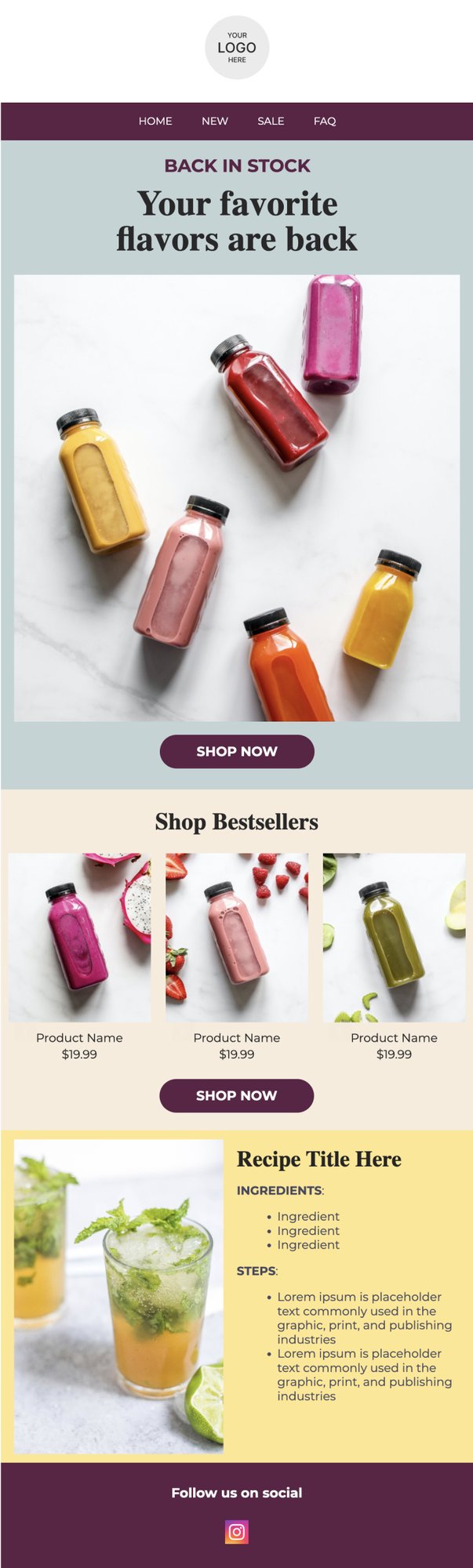

PRIVY EMAIL TEMPLATE

Bestsellers + Featured Recipe

Showcase some of your bestselling products, and give customers a taste of what they're missing by featuring a delicious seasonal recipe using your products.

Try this template

Privy’s trusted

platform can help

your brand grow.

And we have the

receipts to prove it.

17+

Thousand

Brands choose Privy for their ecommerce marketing.

$9+

Million

Revenue driven for Privy email customers each month.

100+

Million

Monthly email sends by Privy customers.

342+

Million

Total signups collected by Privy customers.

Made to work seamlessly with your Shopify store

Privy’s ecommerce marketing platform has a powerful and direct integration with Shopify to help your brand sell more online, no additional apps needed.

Choose right from your product catalog

Insert products from your Shopify store into an email in just two clicks.

Easy-to-understand revenue reporting

See exactly how your email & text marketing campaigns are paying off.

Automated abandoned cart emails & texts

Automatically email or text shoppers who left items in their cart, even if they haven’t started the checkout process.

Create unique or master coupon codes

Control who gets your coupons and how they are shared. Send recipients their own unique coupon code that automatically syncs with your store.

Case Studies

Read stories from real brands who have had real success using Privy

Get coaching & support from ecommerce experts, no matter where your Privy journey begins

Try Privy Free for 15 Days

Grow your email or SMS list with our pre-built templates and start sending money-making emails for the next two weeks. Automatically syncs with Shopify.

Start my trialNo credit card required.

How do our merchants use Privy to grow their brands?

Within six days we'd recovered the cost of Privy for the year. We've grown our list over 200% in the first three months. And also through the use of the opt-in and conversions, we've tracked our email marketing and we've seen an uplift in sales of around 300% since we've started using Privy. So it's been overall fantastic for us. It's paid for itself many times over already.

"Intuitive, full of valuable automation to maximize conversions and upsells, while making every visitor and customer feel seen."

I love working with Privy. The experience with automations and emails is so similar to Klaviyo, but the value is so much better. With Klaviyo, I felt like I was wasting money. My emails are performing well in Privy and I love that I can review my revenue performance.

"We've been using Privy for almost a year to boost our email opt-ins, manage email automations, and provide a better overall user-experience. Having a single tool to manage so many elements all-in-one has been a game-changer. Highly recommend this platform for any growing business."

It's wonderful to see how Privy strives to improve on a regular basis. Their openness to feedback and suggestions about their products is admirable, and I eagerly anticipate what new products they will introduce in the future. Privy employs individuals who are really concerned about you and your business. Isn't that what we all want when we use a tool to help us grow our businesses?

"This app is fantastic and has already paid for itself a hundred times over. A key component for any ecommerce business owner looking to automate newsletters and email marketing, to offering discounts, to saving abandoned carts."

The one-on-one coaching with Privy has not only made me feel confident, but I can talk to other retailers about and I can tell them what I’m trying and what I’m doing. Sometimes they say to me, “Oh my goodness, I can’t believe you’re even doing all that.” And it’s easy when you have the support I do with Privy.

Still have questions?

How much does Privy cost?

Privy has a Free Plan, Starter Plan, and Growth Plan. The cost of each plan depends on your # of mailable and/or textable contacts. You can also just add SMS marketing or list growth separately. To get a more detailed breakdown of what Privy will cost, use our Pricing & Plan Calculator.

How is Privy's customer service & support?

All Privy customers get live chat or email support from a human – 7 days a week with an average wait time of 5 minutes.

At Privy, you’ll always get support from a real person no matter how many contacts you have. Plus, if you have 10,000+ contacts, you’re eligible for 1:1 ongoing support with a customer success manager.

Can I schedule a demo with your team?

Does Privy limit the number of emails I can send each month?

If you are on the Starter Plan or the Growth Plan then you won't have a sending limit. Privy email customers can send an unlimited number of emails regardless of how much your plan costs. If you are in a free 15 day trial of Privy, you will have a 100 emails per day sending limit. When you upgrade to a paid plan after your 15 day trial you will not have a sending limit.

Does Privy limit the number of texts I can send per month?

Yes. The number of texts you can send per month is limited to a 6X multiplier of however many textable contacts you have. For example, if you have 500 textable contacts, you’ll be able to send up to 3,000 texts per month.

Can I import contacts from other email services to Privy?

Yes. Privy integrates with most other email service providers and allows you to import contacts directly into your Privy account. You can also upload a CSV file of your contacts to Privy.

Can I send text messages to contacts that I collected with something other than Privy?

No. Your textable contacts must provide their phone number to you via a Privy popup or onsite display in order for you to text them with Privy Text.

.jpg)